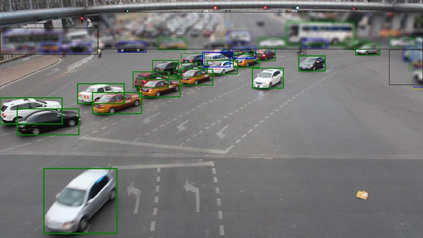

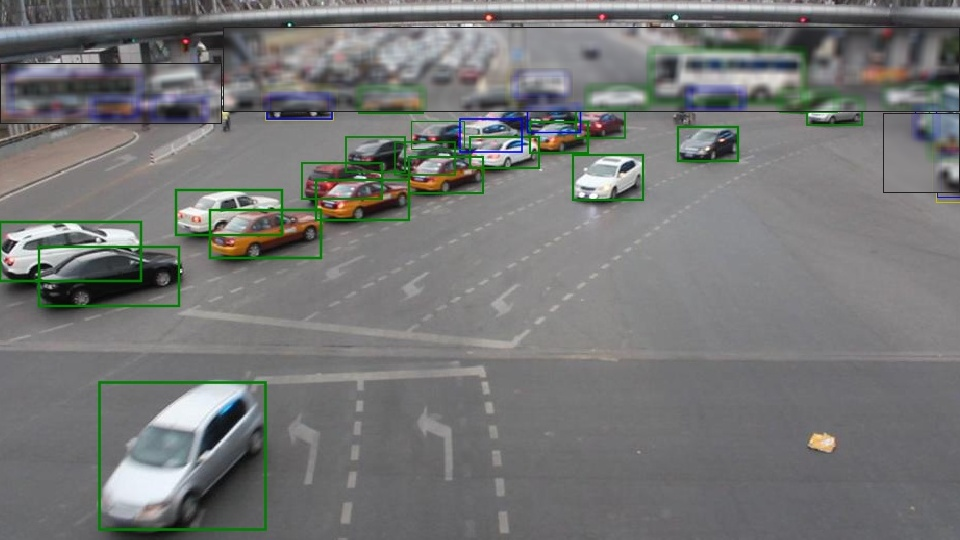

A significant amount of redundancy exists between consecutive frames of a video. Object detectors typically produce detections for one image at a time, without any capabilities for taking advantage of this redundancy. Meanwhile, many applications for object detection work with videos, including intelligent transportation systems, advanced driver assistance systems and video surveillance. Our work aims at taking advantage of the similarity between video frames to produce better detections. We propose FFAVOD, standing for feature fusion architecture for video object detection. We first introduce a novel video object detection architecture that allows a network to share feature maps between nearby frames. Second, we propose a feature fusion module that learns to merge feature maps to enhance them. We show that using the proposed architecture and the fusion module can improve the performance of three base object detectors on two object detection benchmarks containing sequences of moving road users. Additionally, to further increase performance, we propose an improvement to the SpotNet attention module. Using our architecture on the improved SpotNet detector, we obtain the state-of-the-art performance on the UA-DETRAC public benchmark as well as on the UAVDT dataset. Code is available at https://github.com/hu64/FFAVOD.

翻译:在连续的视频框架之间存在大量冗余。 物体探测器通常一次生成一个图像的探测,而没有利用这种冗余的任何能力。 同时,许多应用视频的物体探测工作应用,包括智能运输系统、先进的驱动器协助系统和视频监视。 我们的工作旨在利用视频框架之间的相似性来产生更好的检测。 我们提议FFAVOD, 站立于视频物体探测的特征聚合结构。 我们首先引入一个新的视频物体探测结构, 使网络能够共享附近框架之间的特征地图。 第二, 我们提出一个功能聚合模块, 学习合并特征地图来增强这些特征。 我们表明,使用拟议的架构和聚合模块可以改进包含移动道路使用者序列的两个目标探测器的性能。 此外,为了进一步提高性能,我们提议改进PotNet关注模块。 我们使用改进的SpotNet探测器的架构, 我们获得了UA-DETRAC公共基准以及UAVDD数据集的状态-艺术性能表现。 代码可在 https://github.com/huFF64/OD上查阅。