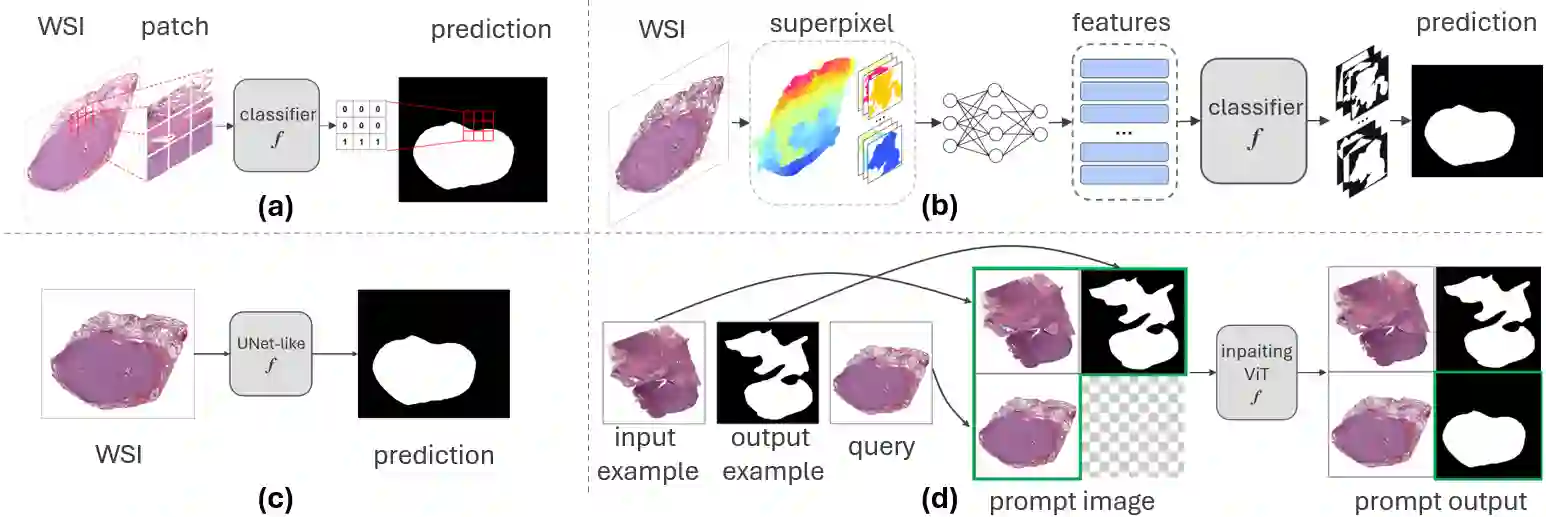

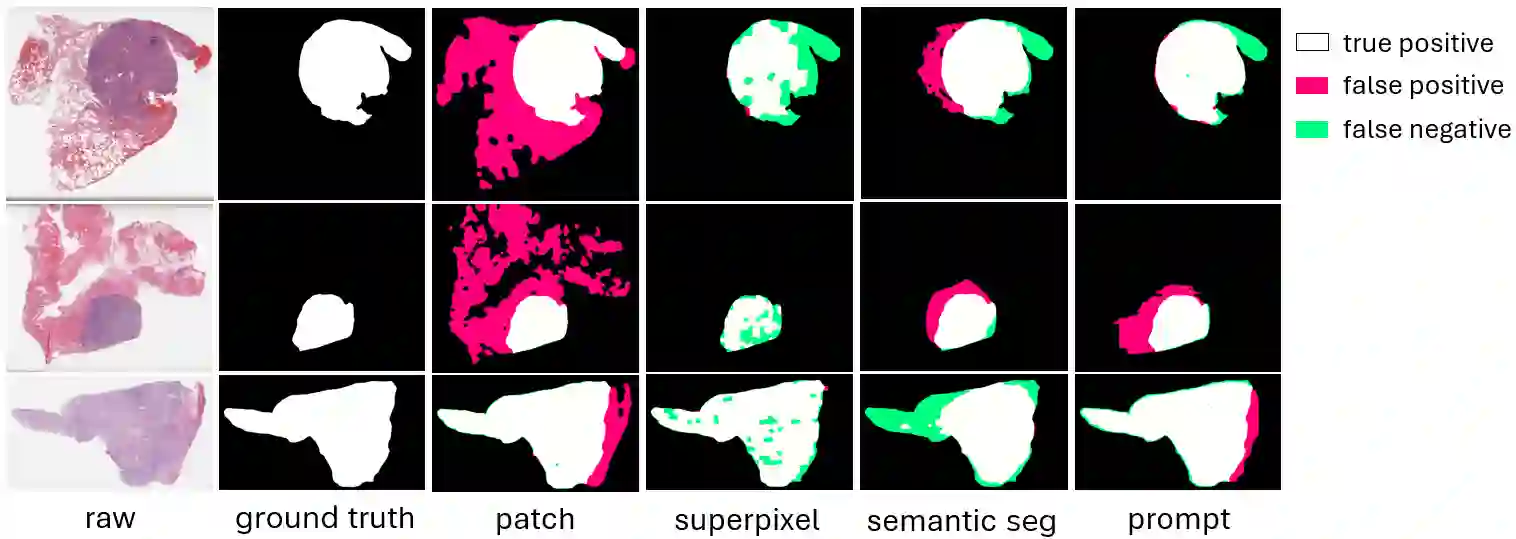

Tumor segmentation stands as a pivotal task in cancer diagnosis. Given the immense dimensions of whole slide images (WSI) in histology, deep learning approaches for WSI classification mainly operate at patch-wise or superpixel-wise level. However, these solutions often struggle to capture global WSI information and cannot directly generate the binary mask. Downsampling the WSI and performing semantic segmentation is another possible approach. While this method offers computational efficiency, it necessitates a large amount of annotated data since resolution reduction may lead to information loss. Visual prompting is a novel paradigm that allows the model to perform new tasks by making subtle modifications to the input space, rather than adapting the model itself. Such approach has demonstrated promising results on many computer vision tasks. In this paper, we show the efficacy of visual prompting in the context of tumor segmentation for three distinct organs. In comparison to classical methods trained for this specific task, our findings reveal that, with appropriate prompt examples, visual prompting can achieve comparable or better performance without extensive fine-tuning.

翻译:暂无翻译