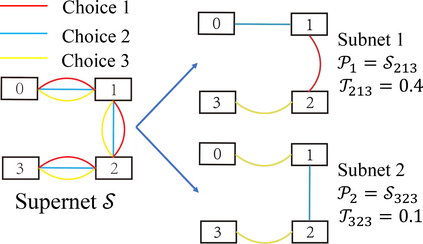

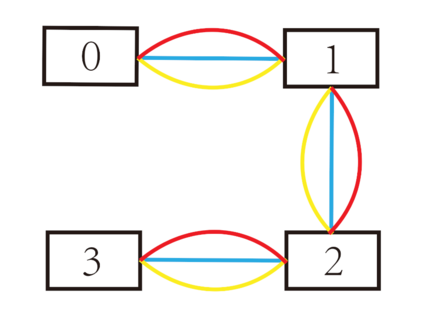

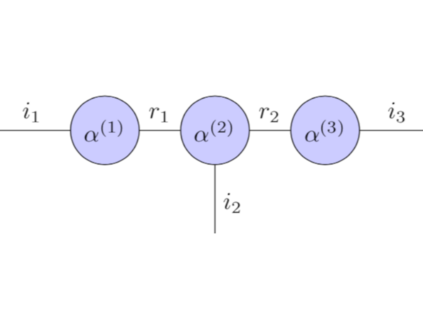

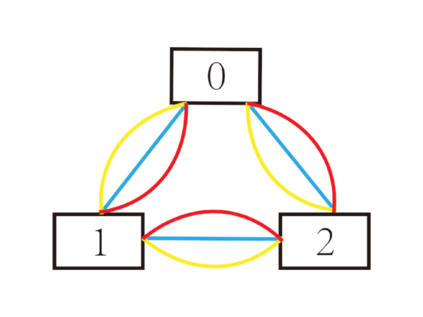

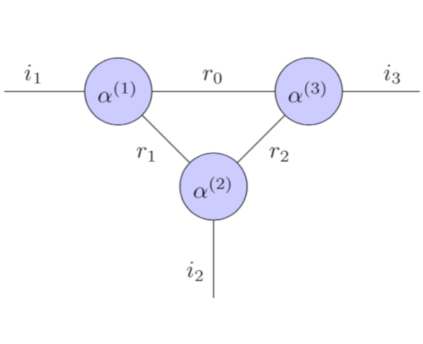

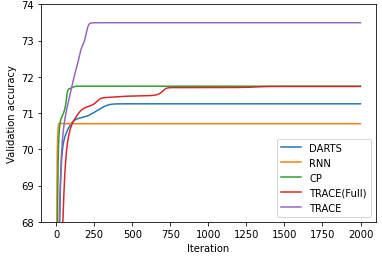

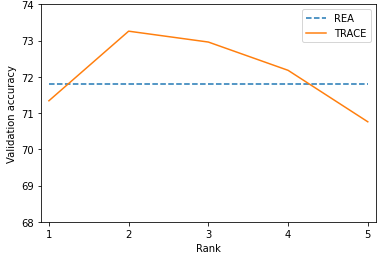

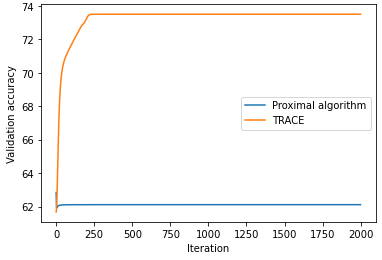

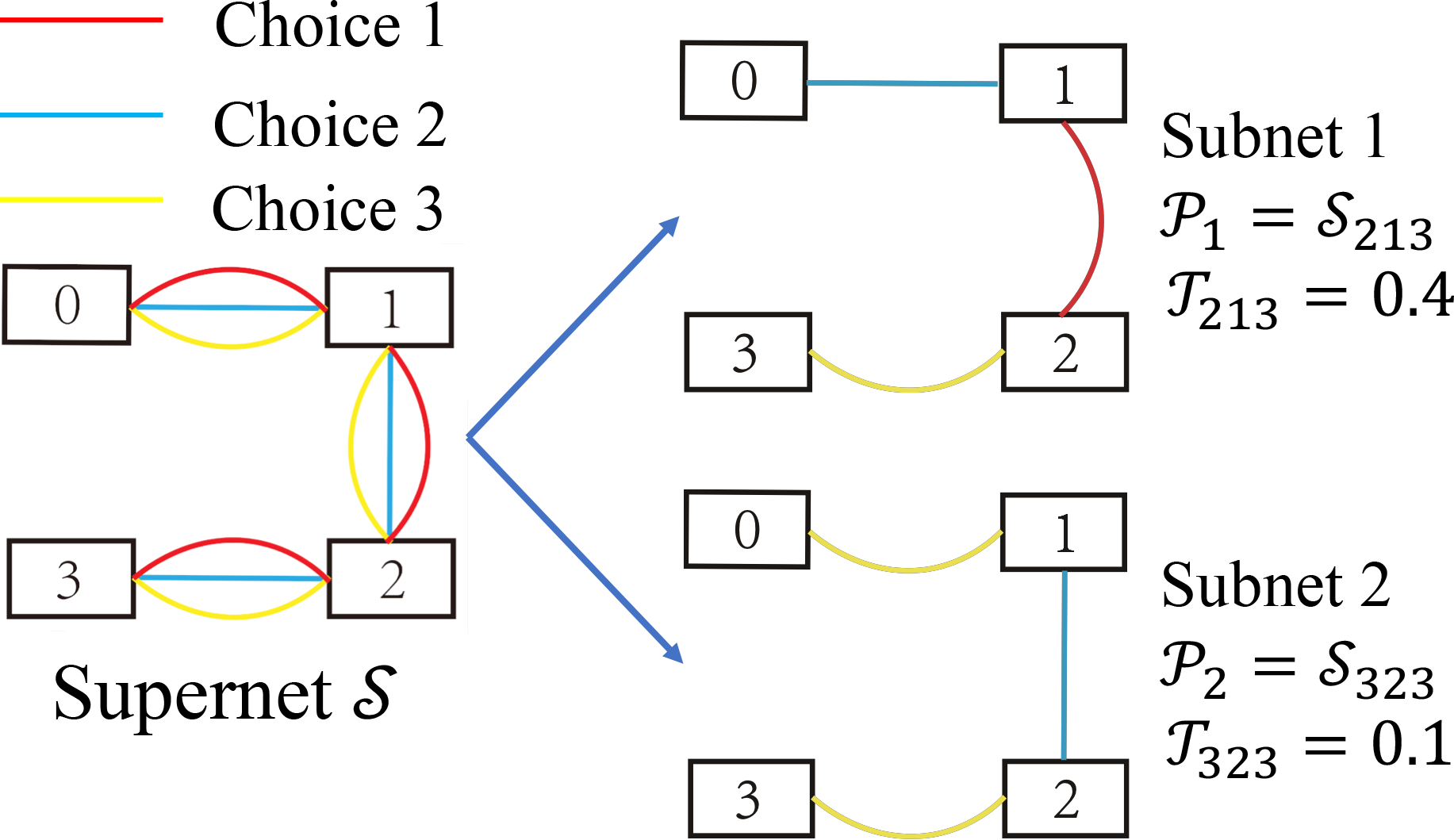

Recently, a special kind of graph, i.e., supernet, which allows two nodes connected by multi-choice edges, has exhibited its power in neural architecture search (NAS) by searching for better architectures for computer vision (CV) and natural language processing (NLP) tasks. In this paper, we discover that the design of such discrete architectures also appears in many other important learning tasks, e.g., logical chain inference in knowledge graphs (KGs) and meta-path discovery in heterogeneous information networks (HINs). Thus, we are motivated to generalize the supernet search problem on a broader horizon. However, none of the existing works are effective since the supernet topology is highly task-dependent and diverse. To address this issue, we propose to tensorize the supernet, i.e., unify the subgraph search problems by a tensor formulation and encode the topology inside the supernet by a tensor network. We further propose an efficient algorithm that admits both stochastic and deterministic objectives to solve the search problem. Finally, we perform extensive experiments on diverse learning tasks, i.e., architecture design for CV, logic inference for KG, and meta-path discovery for HIN. Empirical results demonstrate that our method leads to better performance and architectures.

翻译:最近,一种特殊的图表,即超级网,允许通过多选择边缘连接两个节点,通过寻找更好的计算机视觉和自然语言处理(NLP)任务架构,在神经结构搜索(NAS)中展示了它的力量。在本文中,我们发现这种离散结构的设计也出现在许多其他重要的学习任务中,例如,知识图形(KGs)中的逻辑链推理和不同信息网络(HINs)中的元病发现。因此,我们有动机在更广的视野上推广超级网络搜索问题。然而,由于超级网络的地形高度依赖任务和多样性,现有作品中没有任何一项是有效的。为了解决这个问题,我们建议用高压公式来拉动超级网络,即将子系统搜索问题统一起来,并将超级网络中的顶部发现编码成一个高压网络。我们进一步建议一种有效的算法,既承认求知性和确定性目标,又能解决搜索问题。最后,我们为了在多样化的C型结构中进行广泛的逻辑实验,我们用高压方法来展示了高压的模型。