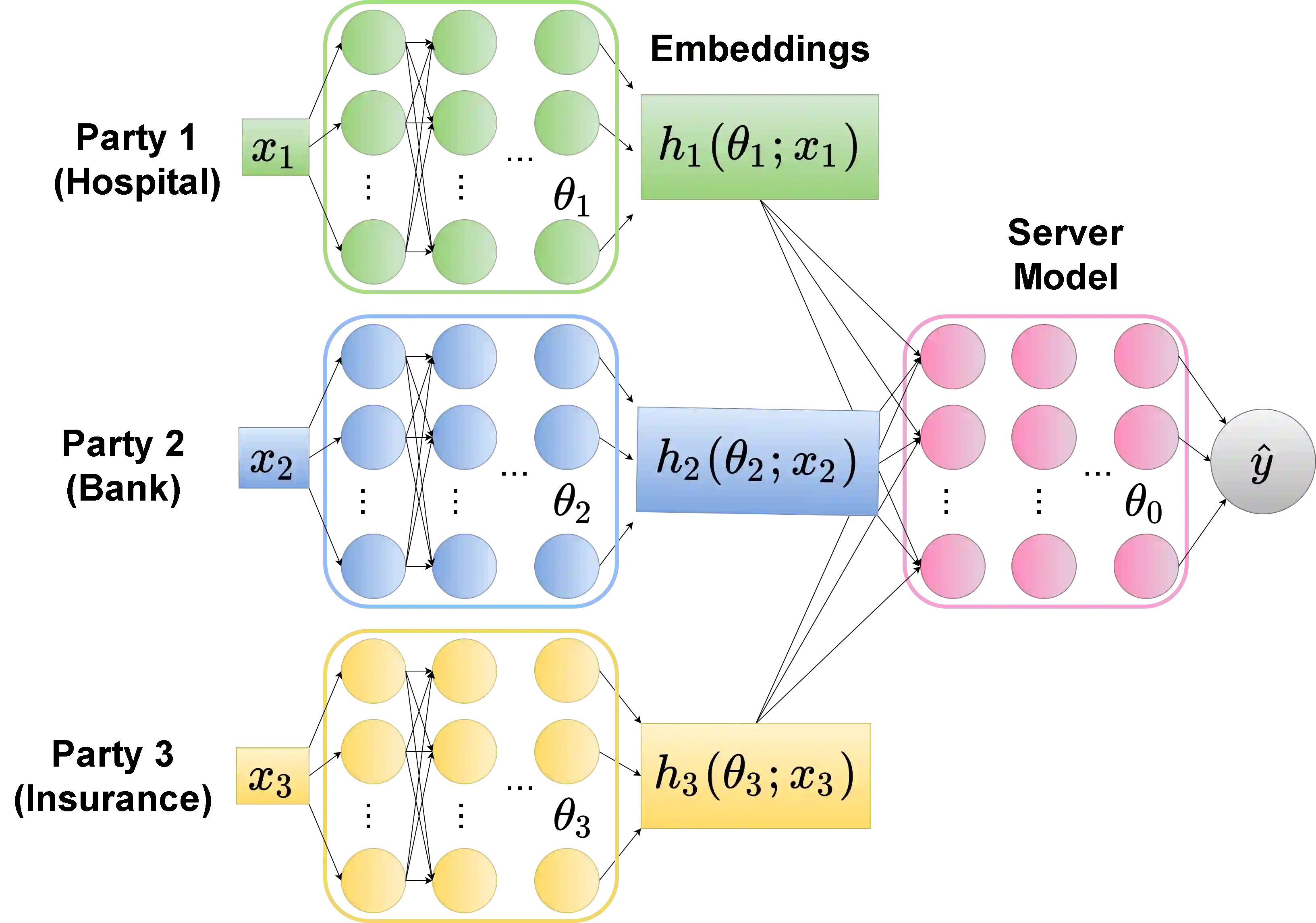

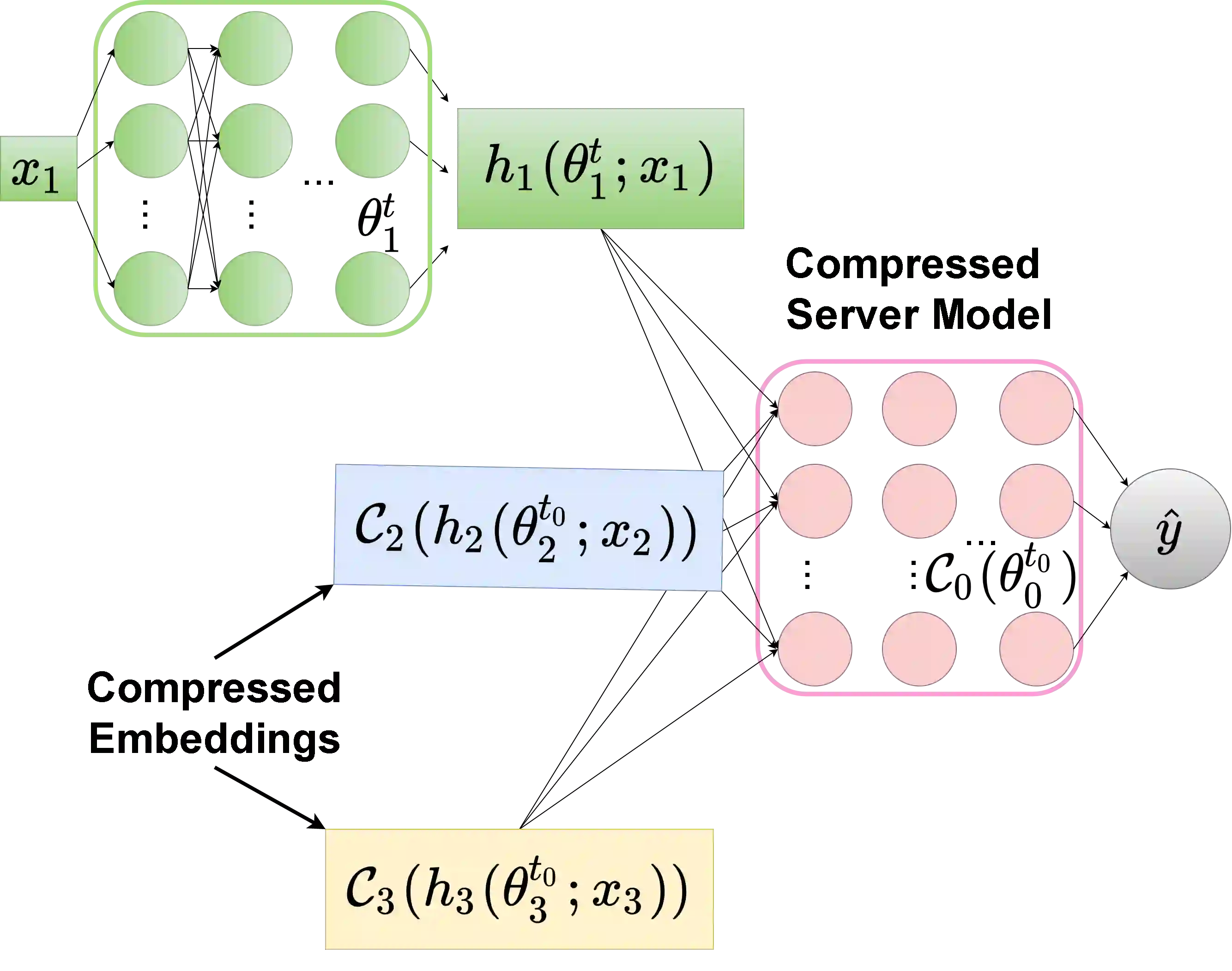

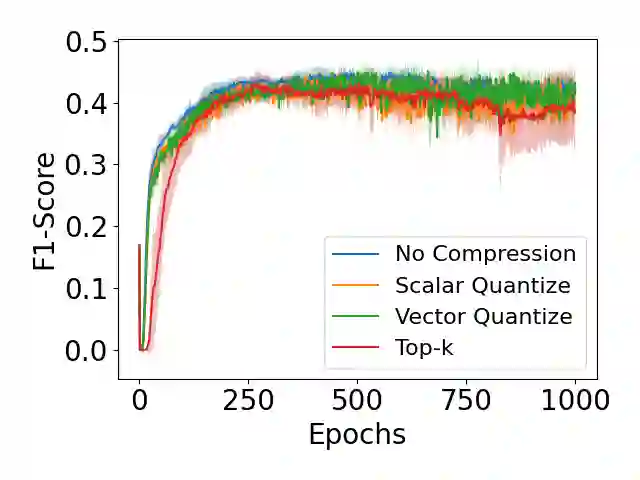

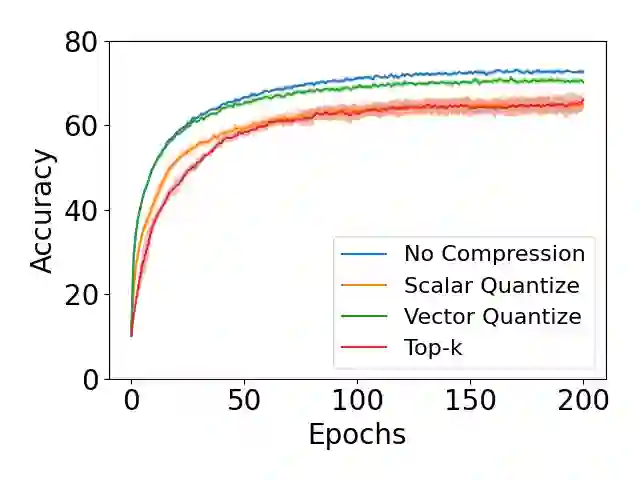

We propose Compressed Vertical Federated Learning (C-VFL) for communication-efficient training on vertically partitioned data. In C-VFL, a server and multiple parties collaboratively train a model on their respective features utilizing several local iterations and sharing compressed intermediate results periodically. Our work provides the first theoretical analysis of the effect message compression has on distributed training over vertically partitioned data. We prove convergence of non-convex objectives at a rate of $O(\frac{1}{\sqrt{T}})$ when the compression error is bounded over the course of training. We provide specific requirements for convergence with common compression techniques, such as quantization and top-$k$ sparsification. Finally, we experimentally show compression can reduce communication by over $90\%$ without a significant decrease in accuracy over VFL without compression.

翻译:我们建议采用压缩的纵向联邦学习(C-VFL)来进行关于垂直分割数据的通信高效培训。在C-VFL,一个服务器和多个当事方合作用当地迭代和定期分享压缩中间结果,就各自的特征培训一个模型。我们的工作提供了对信息压缩对垂直分割数据分布培训的影响的首次理论分析。当压缩错误在培训课程中被捆绑时,我们证明非曲线目标的趋同率为$O(frac{1unsqrt{T ⁇ ),我们提供了与通用压缩技术(如量化和最高-k$的抽压)结合的具体要求。最后,我们实验性地显示压缩可以将通信减少90美元以上,而不会显著降低VFL的精度。