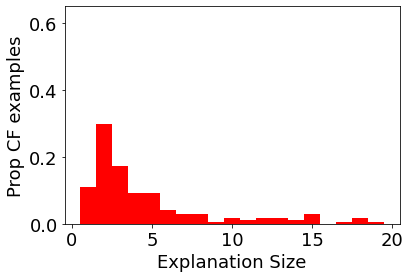

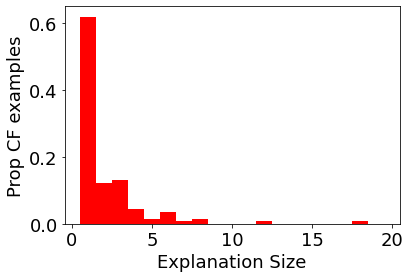

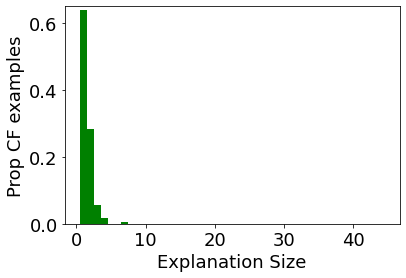

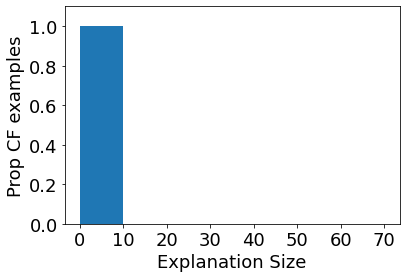

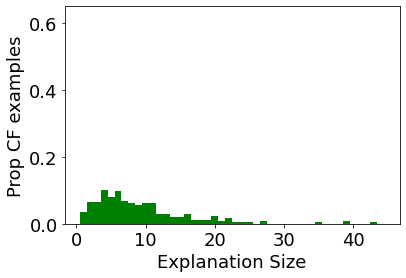

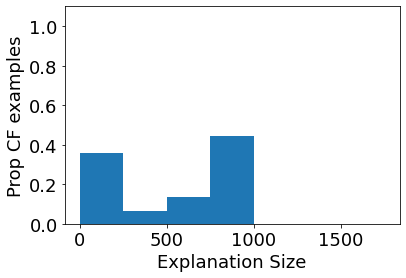

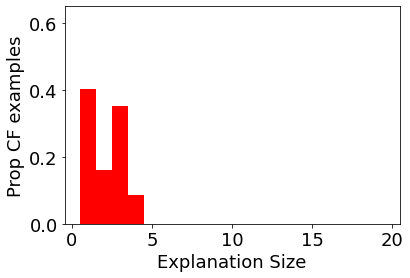

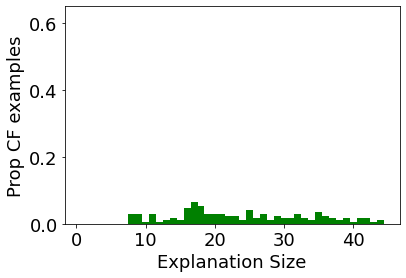

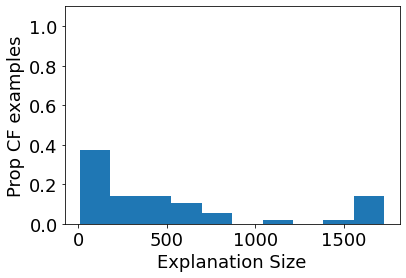

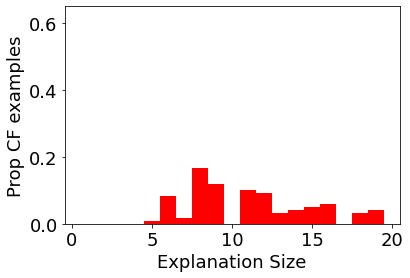

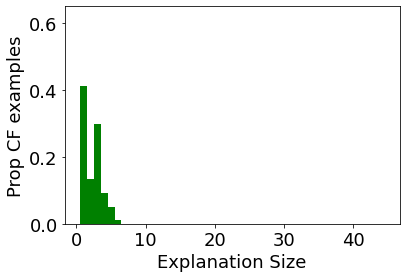

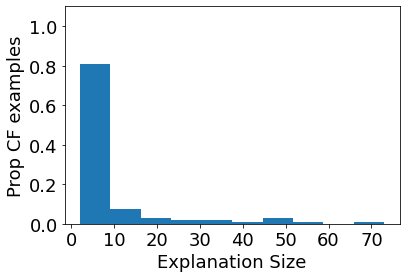

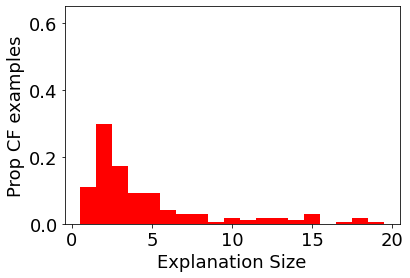

Graph neural networks (GNNs) have shown increasing promise in real-world applications, which has caused an increased interest in understanding their predictions. However, existing methods for explaining predictions from GNNs do not provide an opportunity for recourse: given a prediction for a particular instance, we want to understand how the prediction can be changed. We propose CF-GNNExplainer: the first method for generating counterfactual explanations for GNNs, i.e., the minimal perturbations to the input graph data such that the prediction changes. Using only edge deletions, we find that we are able to generate counterfactual examples for the majority of instances across three widely used datasets for GNN explanations, while removing less than 3 edges on average, with at least 94% accuracy. This indicates that CF-GNNExplainer primarily removes edges that are crucial for the original predictions, resulting in minimal counterfactual examples.

翻译:神经网络(GNNs)在现实世界应用中显示出越来越大的希望,这引起了人们对了解其预测的兴趣。然而,现有解释GNNs预测的方法并没有提供追索的机会:如果对某个特定实例作出预测,我们想了解如何改变预测。我们提议CF-GNNNExlainer:为GNNs提供反事实解释的第一个方法,即输入图数据最小的扰动,从而导致预测的变化。我们仅使用边缘删除,发现我们能够为GNN解释的三个广泛使用的数据集中的大多数案例生成反事实实例,同时平均去除不到3个边缘,且精确度至少达到94%。这表明CF-GNNNExlainer主要去除了最初预测的关键边缘,导致最小的反事实例子。