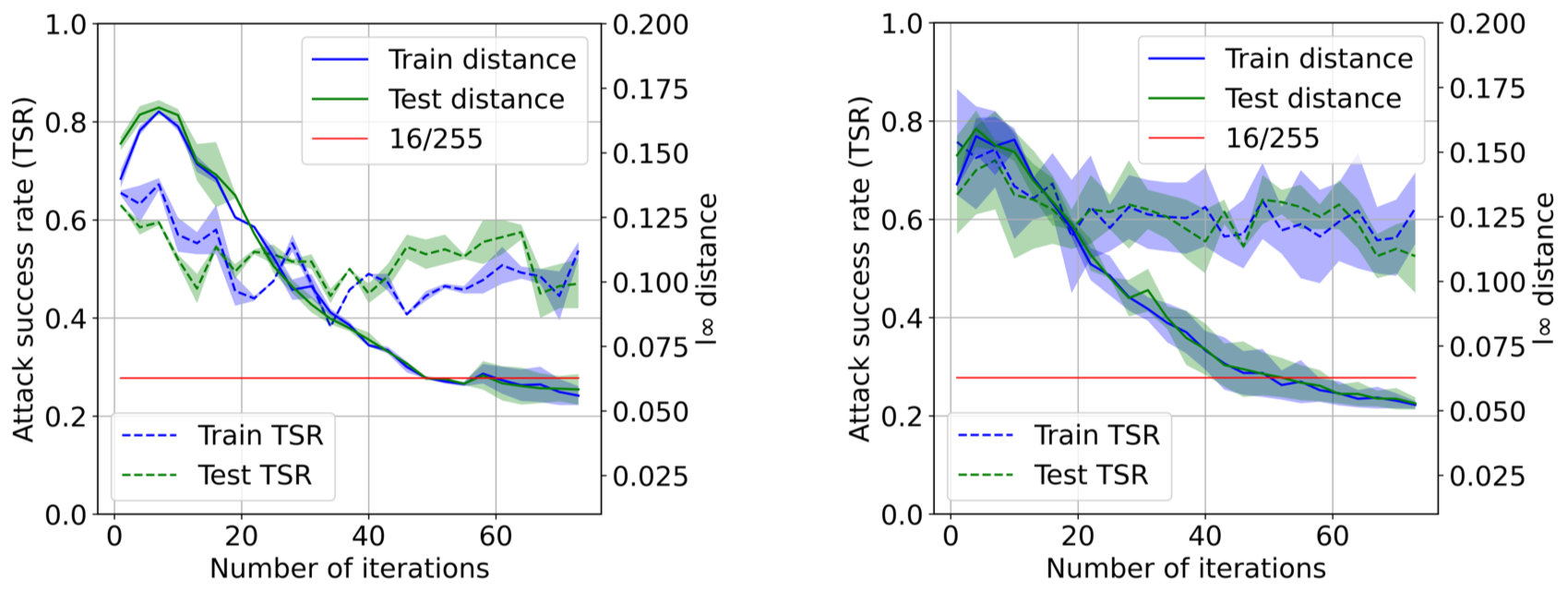

The vulnerability of the high-performance machine learning models implies a security risk in applications with real-world consequences. Research on adversarial attacks is beneficial in guiding the development of machine learning models on the one hand and finding targeted defenses on the other. However, most of the adversarial attacks today leverage the gradient or logit information from the models to generate adversarial perturbation. Works in the more realistic domain: decision-based attacks, which generate adversarial perturbation solely based on observing the output label of the targeted model, are still relatively rare and mostly use gradient-estimation strategies. In this work, we propose a pixel-wise decision-based attack algorithm that finds a distribution of adversarial perturbation through a reinforcement learning algorithm. We call this method Decision-based Black-box Attack with Reinforcement learning (DBAR). Experiments show that the proposed approach outperforms state-of-the-art decision-based attacks with a higher attack success rate and greater transferability.

翻译:高性能机器学习模型的脆弱性意味着在具有现实世界后果的应用中存在安全风险。关于对抗性攻击的研究有利于指导机器学习模型的开发,并找到定向防御。然而,今天大多数对抗性攻击利用模型的梯度或登录信息产生对抗性扰动。在更现实的领域开展工作:基于决定的攻击,其产生对抗性扰动的唯一依据是观察目标模型的产出标签,这种攻击仍然相对罕见,而且大多使用梯度估计战略。在这项工作中,我们提议采用基于决定的比素式攻击算法,通过强化学习算法找到对抗性干扰的分布。我们称这种方法基于决定的黑盒攻击与强化学习(DBAR)。实验表明,拟议的方法比基于决定的状态攻击更成功率和更大的可转移性强。