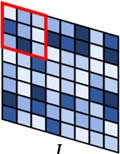

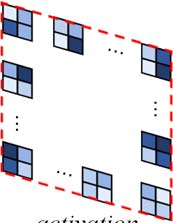

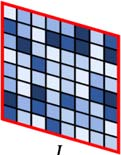

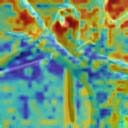

Efficient custom pooling techniques that can aggressively trim the dimensions of a feature map and thereby reduce inference compute and memory footprint for resource-constrained computer vision applications have recently gained significant traction. However, prior pooling works extract only the local context of the activation maps, limiting their effectiveness. In contrast, we propose a novel non-local self-attentive pooling method that can be used as a drop-in replacement to the standard pooling layers, such as max/average pooling or strided convolution. The proposed self-attention module uses patch embedding, multi-head self-attention, and spatial-channel restoration, followed by sigmoid activation and exponential soft-max. This self-attention mechanism efficiently aggregates dependencies between non-local activation patches during down-sampling. Extensive experiments on standard object classification and detection tasks with various convolutional neural network (CNN) architectures demonstrate the superiority of our proposed mechanism over the state-of-the-art (SOTA) pooling techniques. In particular, we surpass the test accuracy of existing pooling techniques on different variants of MobileNet-V2 on ImageNet by an average of 1.2%. With the aggressive down-sampling of the activation maps in the initial layers (providing up to 22x reduction in memory consumption), our approach achieves 1.43% higher test accuracy compared to SOTA techniques with iso-memory footprints. This enables the deployment of our models in memory-constrained devices, such as micro-controllers (without losing significant accuracy), because the initial activation maps consume a significant amount of on-chip memory for high-resolution images required for complex vision tasks. Our proposed pooling method also leverages the idea of channel pruning to further reduce memory footprints.

翻译:能够大幅缩小地貌图尺寸从而降低资源受限制的计算机视觉应用程序的计算率和存储率的测算率和记忆足迹的自定义自定义共享技术最近获得显著的牵引力。然而,先前的自控工作只提取启动地图的本地背景,限制了其有效性。相反,我们提议了一种新的非本地自控集合方法,可以用来作为标准集合层的低位替换,例如最大/平均集合或摇晃。拟议的自控模块使用补丁嵌入、多头自控和空间通道修复技术,随后是模拟存储启动和快速软体。这种自控机制在下取样期间有效地综合了非本地启动地图的补补补补。在各种进式神经网络(CNN)结构方面进行的广泛实验表明,我们拟议的机制优于最新(SOATA)集装集成技术,特别是,我们超额测试了现有在移动式网络-存储图像的更高变异端存储技术的精度,在初始图像网络上实现了大幅升级的缩缩缩缩缩缩缩缩缩略图,因为我们在初始的缩略微缩缩缩缩缩缩缩缩图中要达到了比例。