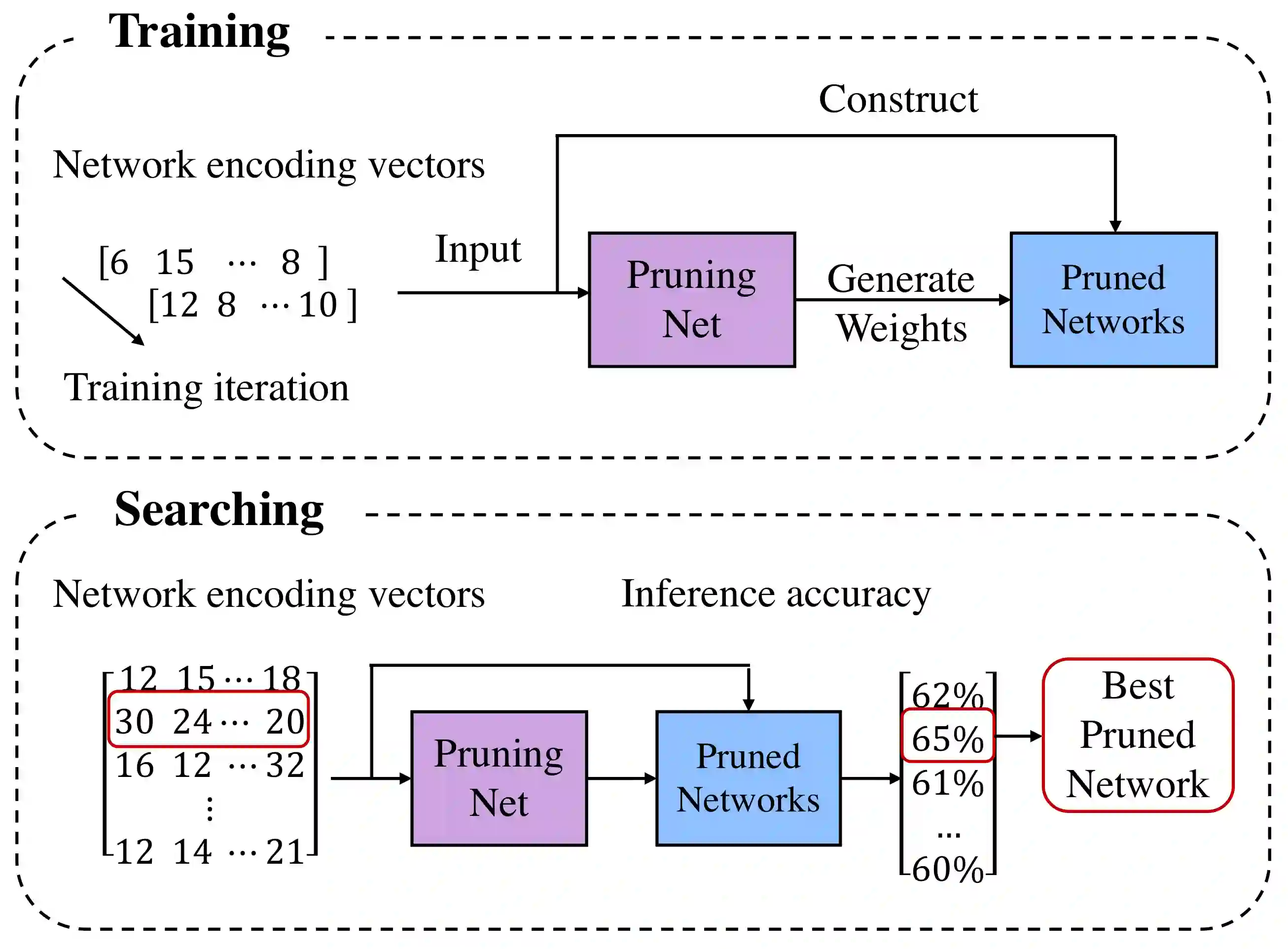

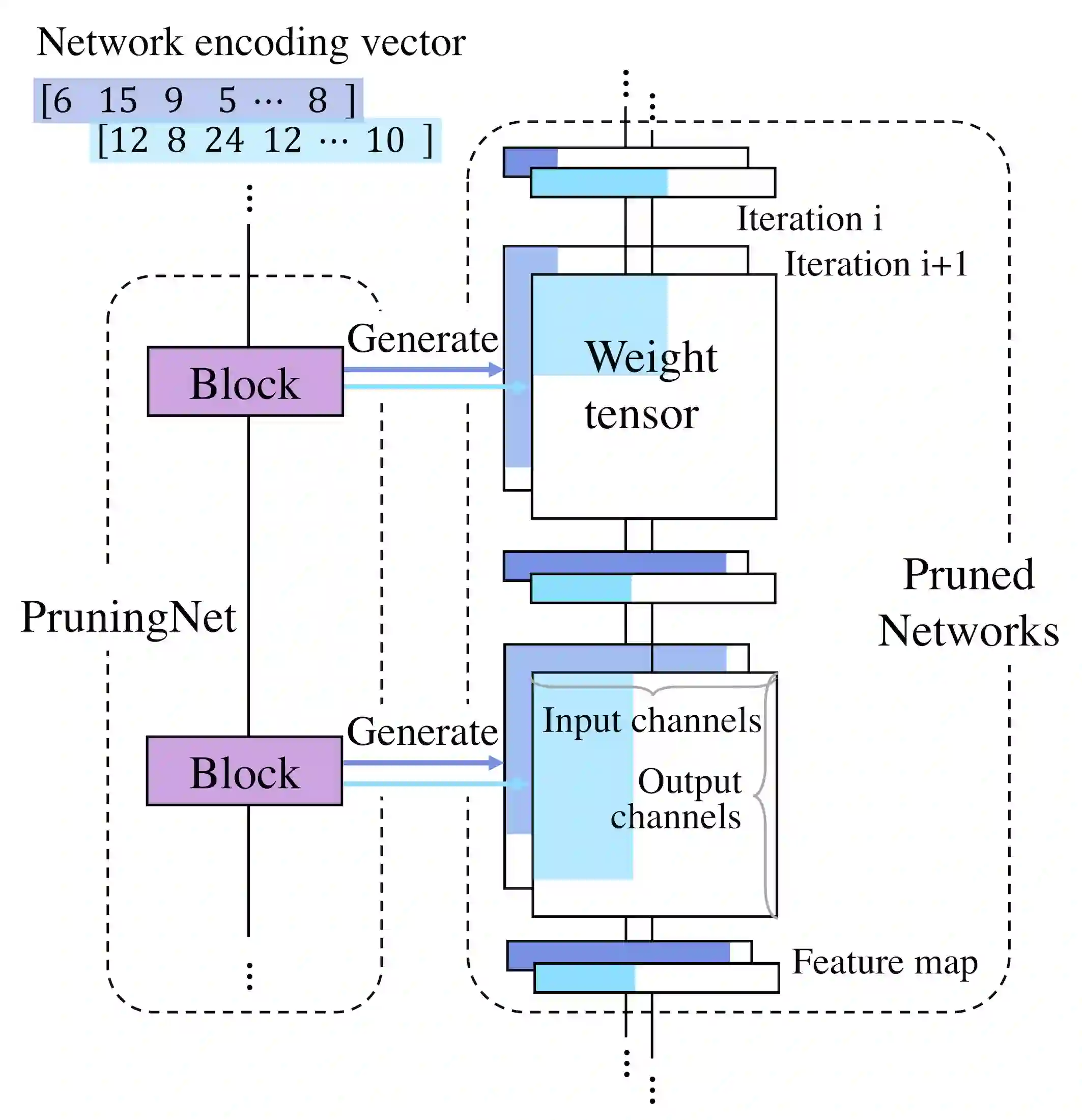

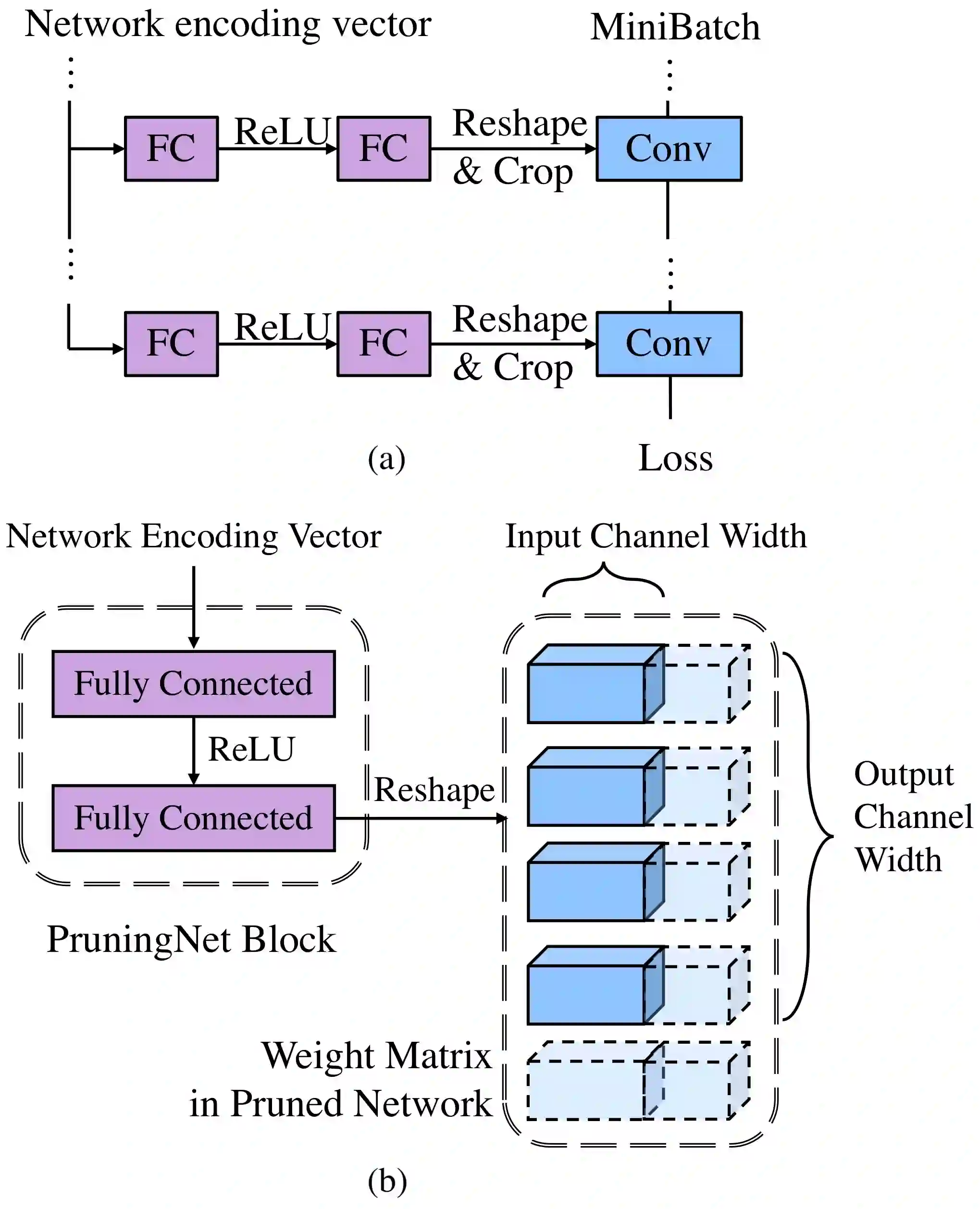

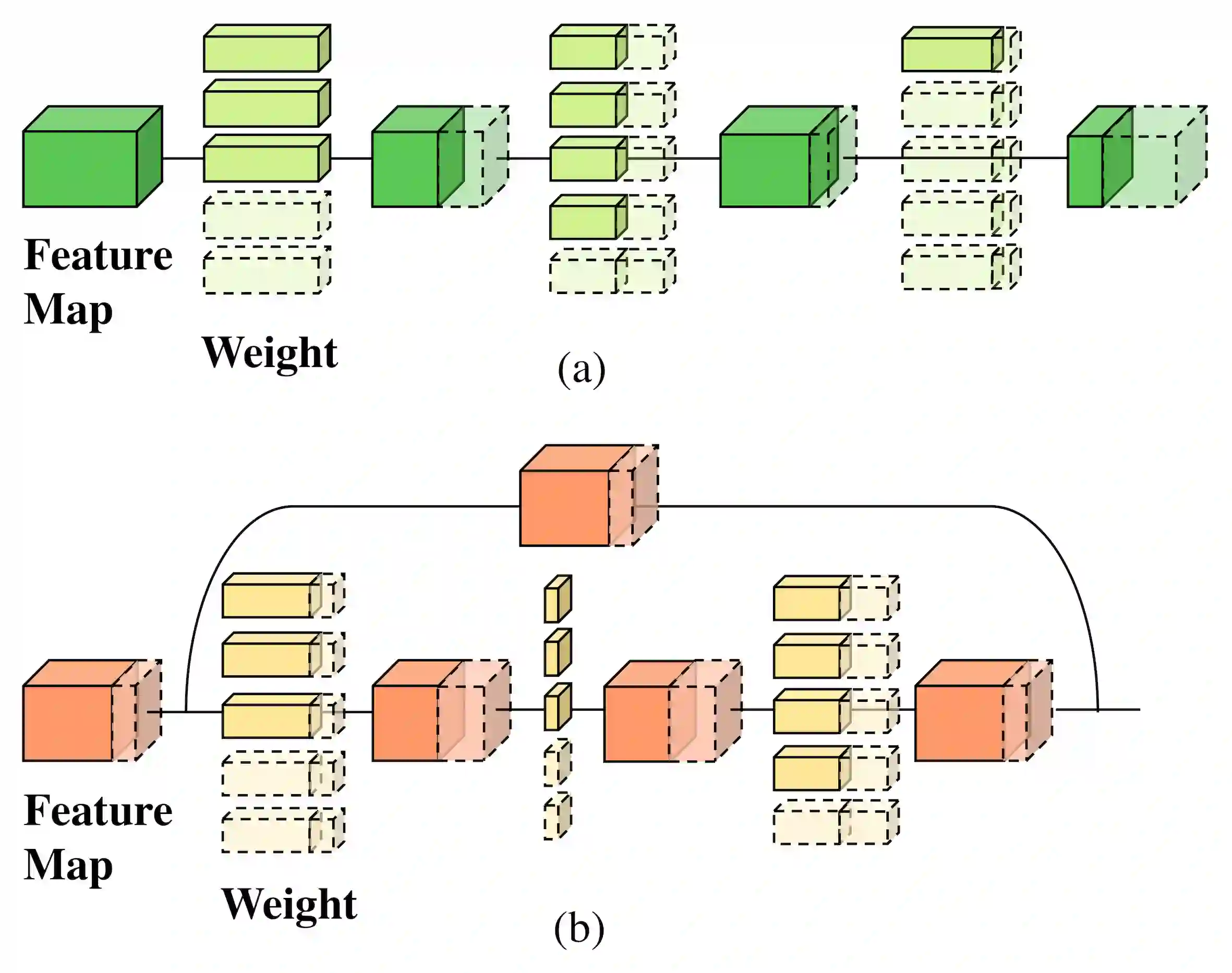

In this paper, we propose a novel meta learning approach for automatic channel pruning of very deep neural networks. We first train a PruningNet, a kind of meta network, which is able to generate weight parameters for any pruned structure given the target network. We use a simple stochastic structure sampling method for training the PruningNet. Then, we apply an evolutionary procedure to search for good-performing pruned networks. The search is highly efficient because the weights are directly generated by the trained PruningNet and we do not need any finetuning at search time. With a single PruningNet trained for the target network, we can search for various Pruned Networks under different constraints with little human participation. Compared to the state-of-the-art pruning methods, we have demonstrated superior performances on MobileNet V1/V2 and ResNet. Codes are available on https://github.com/liuzechun/MetaPruning.

翻译:在本文中,我们提出一种新型的元学习方法,用于对非常深层的神经网络进行自动频道修剪。我们首先培训了PruningNet,这是一种元网络,能够根据目标网络为任何修剪结构生成重量参数。我们使用简单的随机结构抽样方法来培训PruningNet。然后,我们应用进化程序来搜索业绩良好的修剪网络。搜索效率很高,因为重量是由训练有素的PruningNet直接生成的,在搜索时间我们不需要做任何微调。在为目标网络培训的单一PruningNet中,我们可以在不同的限制下寻找各种普鲁宁网络,而人类的参与很少。与最先进的裁剪裁方法相比,我们在移动Net V1/V2 和 ResNet 上展示了优异性的表现。 代码可以在 https://github.com/liuzechun/MetaPruning上查阅。