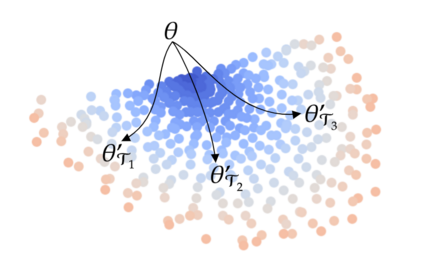

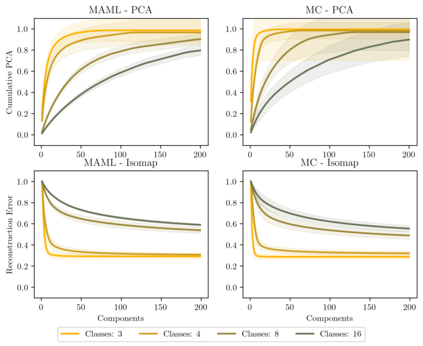

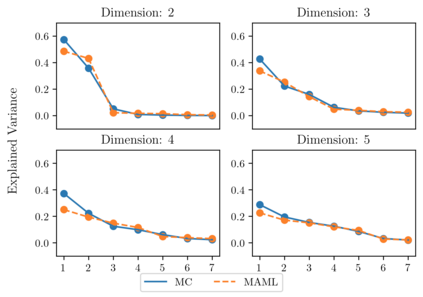

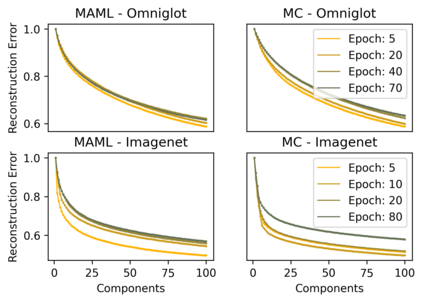

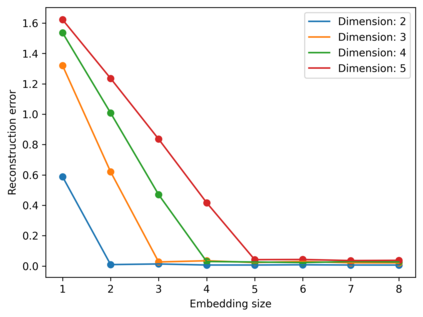

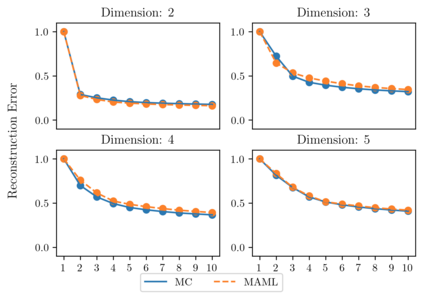

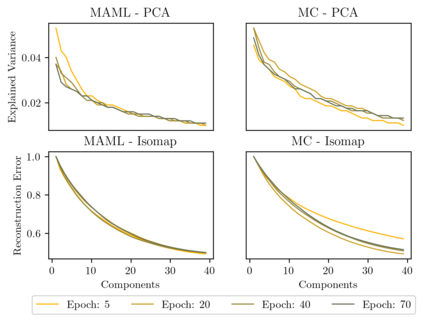

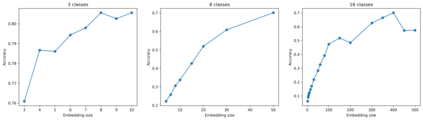

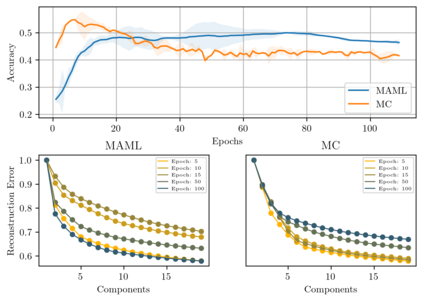

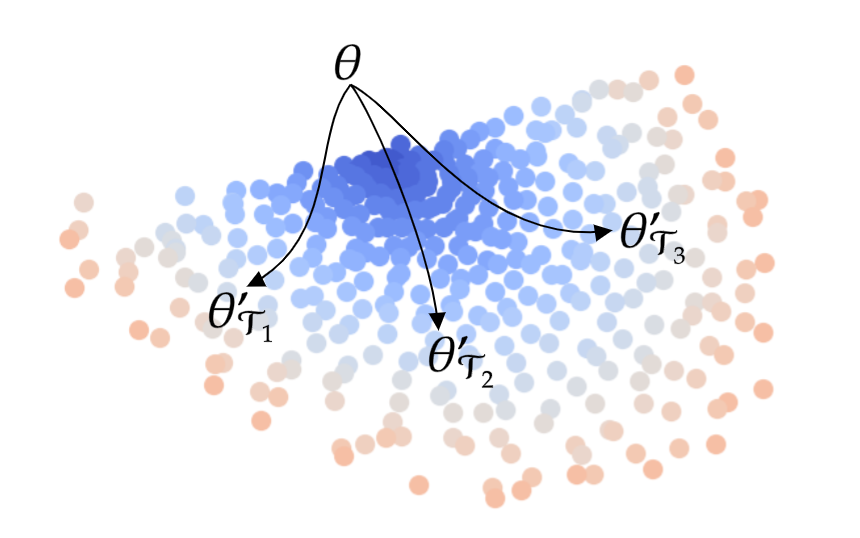

In this work we provide an analysis of the distribution of the post-adaptation parameters of Gradient-Based Meta-Learning (GBML) methods. Previous work has noticed how, for the case of image-classification, this adaption only takes place on the last layers of the network. We propose the more general notion that parameters are updated over a low-dimensional \emph{subspace} of the same dimensionality as the task-space and show that this holds for regression as well. Furthermore, the induced subspace structure provides a method to estimate the intrinsic dimension of the space of tasks of common few-shot learning datasets.

翻译:在这项工作中,我们分析了基于梯度的元数据学习方法(GBML)的适应后参数的分布情况。先前的工作注意到,就图像分类而言,这种调整只发生在网络的最后一层。我们提出了更为普遍的概念,即参数是在与任务-空间相同的一个低维/emph{subspace上更新的,并表明这也具有回归性。此外,诱导的子空间结构提供了一种方法,用以估计共同的微小学习数据集任务空间的内在层面。