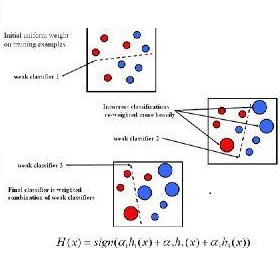

The classic algorithm AdaBoost allows to convert a weak learner, that is an algorithm that produces a hypothesis which is slightly better than chance, into a strong learner, achieving arbitrarily high accuracy when given enough training data. We present a new algorithm that constructs a strong learner from a weak learner but uses less training data than AdaBoost and all other weak to strong learners to achieve the same generalization bounds. A sample complexity lower bound shows that our new algorithm uses the minimum possible amount of training data and is thus optimal. Hence, this work settles the sample complexity of the classic problem of constructing a strong learner from a weak learner.

翻译:经典算法AdaBoost允许将学习能力弱的学习者,即产生比机会略好一点的假设的算法转换成一个强的学习者,在获得足够的培训数据时达到任意高精度。我们提出了一个新的算法,从学习能力弱的学习者中构建一个强健的学习者,但使用比AdaBoost和其他所有弱者更少的培训数据,使学习能力强的学习者达到相同的概括界限。抽样复杂性较低,表明我们的新算法使用最低可能的培训数据量,因此是最佳的。因此,这项工作解决了从学习能力弱的学习者中构建一个强健的学习者这一典型问题的样本复杂性。