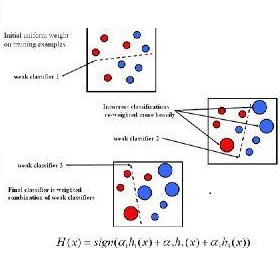

AdaBoost is a classic boosting algorithm for combining multiple inaccurate classifiers produced by a weak learner, to produce a strong learner with arbitrarily high accuracy when given enough training data. Determining the optimal number of samples necessary to obtain a given accuracy of the strong learner, is a basic learning theoretic question. Larsen and Ritzert (NeurIPS'22) recently presented the first provably optimal weak-to-strong learner. However, their algorithm is somewhat complicated and it remains an intriguing question whether the prototypical boosting algorithm AdaBoost also makes optimal use of training samples. In this work, we answer this question in the negative. Concretely, we show that the sample complexity of AdaBoost, and other classic variations thereof, are sub-optimal by at least one logarithmic factor in the desired accuracy of the strong learner.

翻译:AdaBoost是将学习能力薄弱者生产的多种不准确分类方法结合起来的典型推算法,目的是在获得足够的培训数据时产生一个高度精准的强健学习者。确定获得强健学习者特定准确性所需的最佳样本数量是一个基本的学习理论问题。Larsen和Ritzert(NeurIPS'22)最近提出了第一个最优、最优的弱至强学习者。然而,他们的算法有些复杂,仍然是一个令人费解的问题,即原型推算法AdaBoost是否也最佳地利用了培训样本。在这项工作中,我们否定了这个问题。具体地说,我们表明,AdaBoost的样本复杂性和其他典型的变异性在强学习者预期精准性中至少是一个对数因素的亚优。