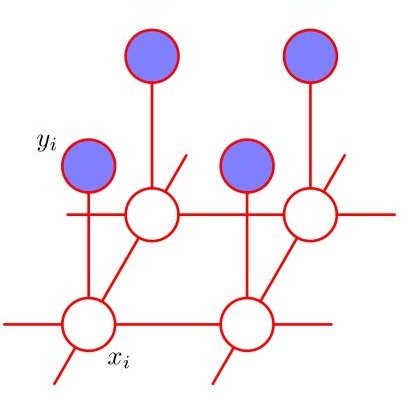

Non-stationarity is one thorny issue in cooperative multi-agent reinforcement learning (MARL). One of the reasons is the policy changes of agents during the learning process. Some existing works have discussed various consequences caused by non-stationarity with several kinds of measurement indicators. This makes the objectives or goals of existing algorithms are inevitably inconsistent and disparate. In this paper, we introduce a novel notion, the $\delta$-measurement, to explicitly measure the non-stationarity of a policy sequence, which can be further proved to be bounded by the KL-divergence of consecutive joint policies. A straightforward but highly non-trivial way is to control the joint policies' divergence, which is difficult to estimate accurately by imposing the trust-region constraint on the joint policy. Although it has lower computational complexity to decompose the joint policy and impose trust-region constraints on the factorized policies, simple policy factorization like mean-field approximation will lead to more considerable policy divergence, which can be considered as the trust-region decomposition dilemma. We model the joint policy as a pairwise Markov random field and propose a trust-region decomposition network (TRD-Net) based on message passing to estimate the joint policy divergence more accurately. The Multi-Agent Mirror descent policy algorithm with Trust region decomposition, called MAMT, is established by adjusting the trust-region of the local policies adaptively in an end-to-end manner. MAMT can approximately constrain the consecutive joint policies' divergence to satisfy $\delta$-stationarity and alleviate the non-stationarity problem. Our method can bring noticeable and stable performance improvement compared with baselines in cooperative tasks of different complexity.

翻译:多剂合作强化学习(MARL)的一个棘手问题是不透明性,这是合作性多剂强化学习(MARL)的一个棘手问题。原因之一是代理人在学习过程中的政策变化。有些现有工作讨论了不常态与若干种衡量指标之间的不同后果。这使得现有算法的目的或目标不可避免地不一致和差异。在本文中,我们引入了一个新颖的概念,即美元delta美元计量,以明确衡量政策序列的不常态性,这可以进一步证明受连续联合政策的KL-弹性约束。一个直截了当但高度非三角的方法是控制联合政策的差异,而这种差异很难通过对联合政策施加信任区域的制约来准确估计。尽管计算复杂性会降低联合政策并对要素化政策施加信任性区域制约,但像平均地差那样简单的政策化将会导致更大的政策差异,这可以被视为信任区域分解的两难点。我们将联合政策建成一个对齐的Markov随机字段,但高度非三重的非三角方式是控制联合政策的差异,通过对联合政策进行精确的递增后期的IMML政策网络,因此,将稳定地将稳定地降低区域政策。