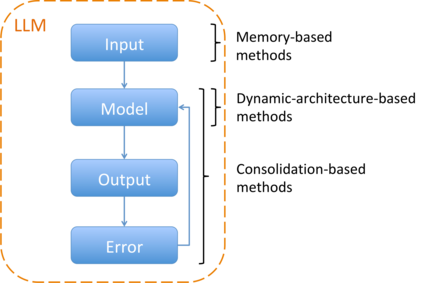

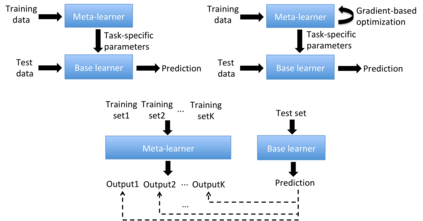

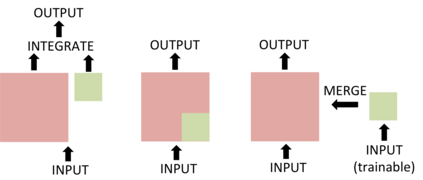

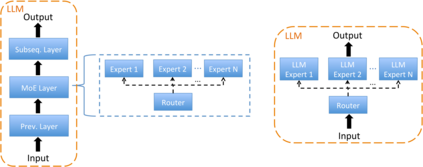

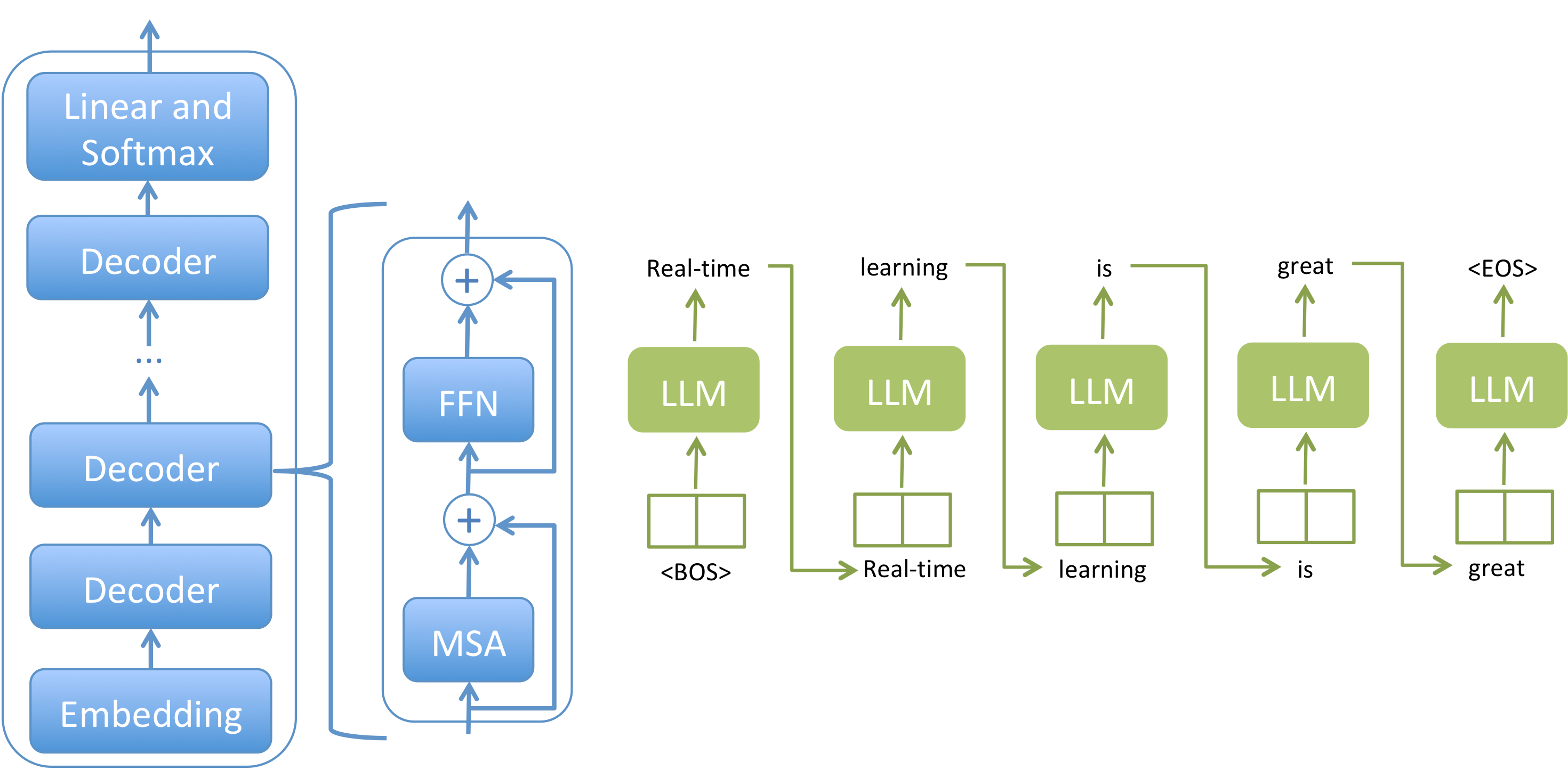

Incremental learning is the ability of systems to acquire knowledge over time, enabling their adaptation and generalization to novel tasks. It is a critical ability for intelligent, real-world systems, especially when data changes frequently or is limited. This review provides a comprehensive analysis of incremental learning in Large Language Models. It synthesizes the state-of-the-art incremental learning paradigms, including continual learning, meta-learning, parameter-efficient learning, and mixture-of-experts learning. We demonstrate their utility for incremental learning by describing specific achievements from these related topics and their critical factors. An important finding is that many of these approaches do not update the core model, and none of them update incrementally in real-time. The paper highlights current problems and challenges for future research in the field. By consolidating the latest relevant research developments, this review offers a comprehensive understanding of incremental learning and its implications for designing and developing LLM-based learning systems.

翻译:暂无翻译