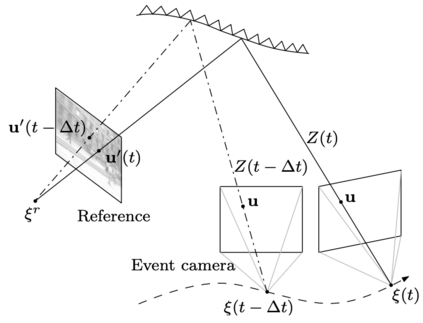

In recent decades, visual simultaneous localization and mapping (vSLAM) has gained significant interest in both academia and industry. It estimates camera motion and reconstructs the environment concurrently using visual sensors on a moving robot. However, conventional cameras are limited by hardware, including motion blur and low dynamic range, which can negatively impact performance in challenging scenarios like high-speed motion and high dynamic range illumination. Recent studies have demonstrated that event cameras, a new type of bio-inspired visual sensor, offer advantages such as high temporal resolution, dynamic range, low power consumption, and low latency. This paper presents a timely and comprehensive review of event-based vSLAM algorithms that exploit the benefits of asynchronous and irregular event streams for localization and mapping tasks. The review covers the working principle of event cameras and various event representations for preprocessing event data. It also categorizes event-based vSLAM methods into four main categories: feature-based, direct, motion-compensation, and deep learning methods, with detailed discussions and practical guidance for each approach. Furthermore, the paper evaluates the state-of-the-art methods on various benchmarks, highlighting current challenges and future opportunities in this emerging research area. A public repository will be maintained to keep track of the rapid developments in this field at {\url{https://github.com/kun150kun/ESLAM-survey}}.

翻译:近几十年来,基于视觉同时定位和地图构建(vSLAM)已经在学术界和工业界获得了广泛关注。该方法利用移动机器人上的视觉传感器同时估计相机运动和重建环境。但是,传统的相机受到硬件限制(例如运动模糊和低动态范围),这些因素会在高速运动和高动态范围照明等挑战性场景下对其性能产生负面影响。最近的研究表明,事件相机是一种新型的仿生视觉传感器,具有高时序分辨率、动态范围、低功耗和低延迟等优点。本文对利用异步和不规则事件流进行定位和建图任务的事件驱动的vSLAM算法进行了及时和全面的综述。综述包括事件相机的工作原理和各种事件表示方法以预处理事件数据。它还将基于事件的vSLAM方法分为四个主要类别:基于特征的、直接的、运动补偿和深度学习方法,并针对每种方法进行了详细的讨论和实用指导。此外,本文还在各种基准测试上评估了最先进的方法,并突出了这个新兴研究领域中当前的挑战和未来的机遇。我们维护了公共代码库以跟踪该领域的快速发展,其网址为{\url{https://github.com/kun150kun/ESLAM-survey}}。