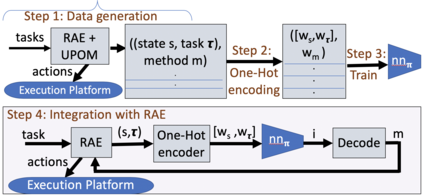

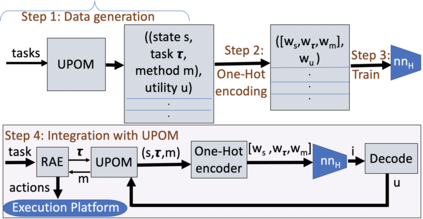

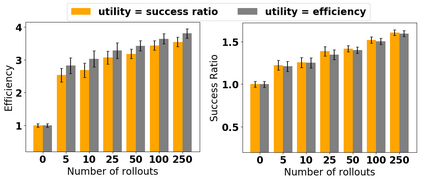

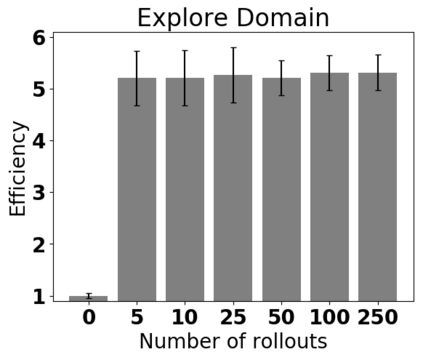

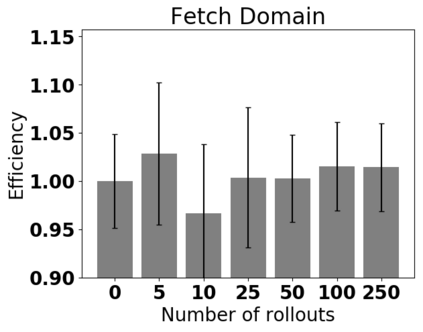

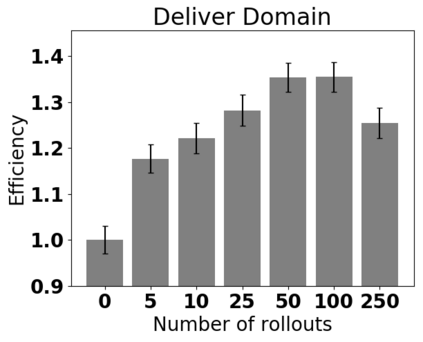

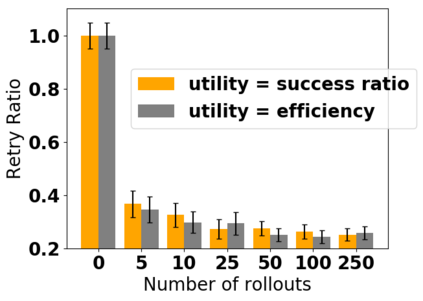

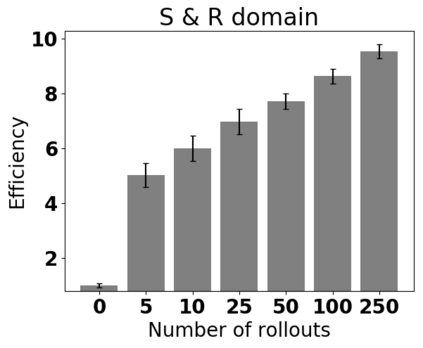

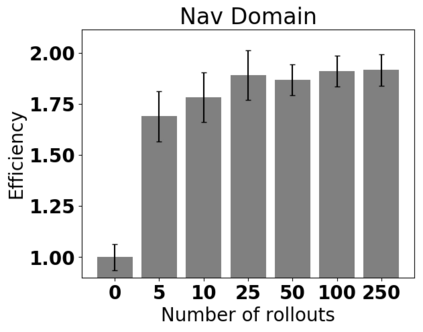

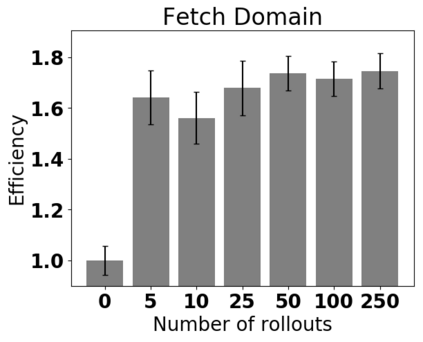

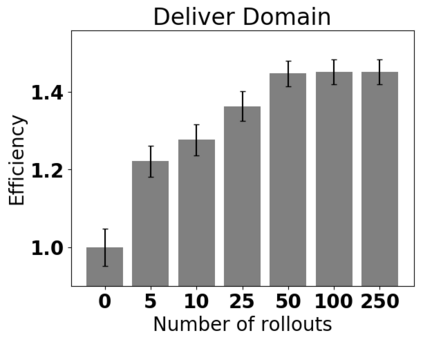

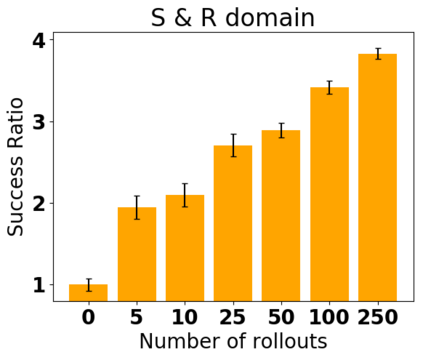

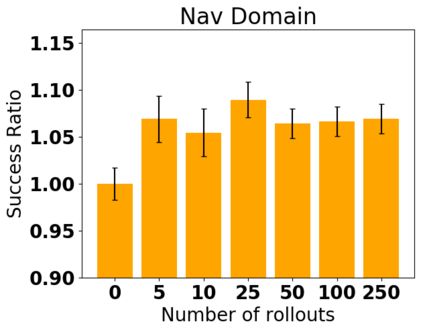

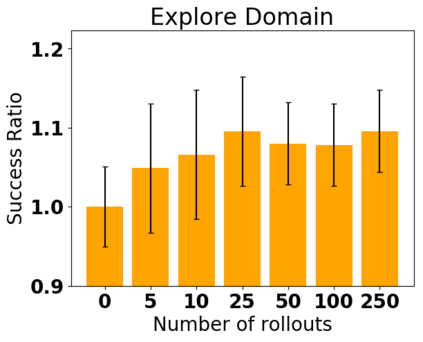

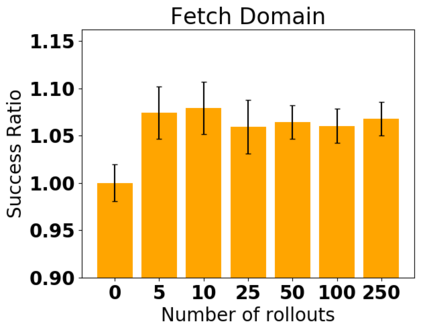

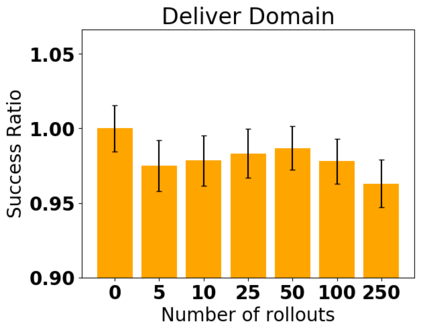

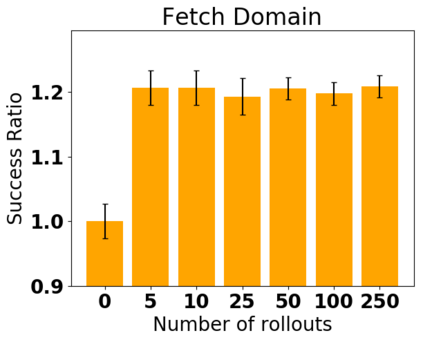

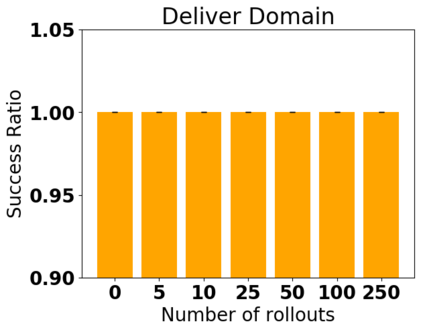

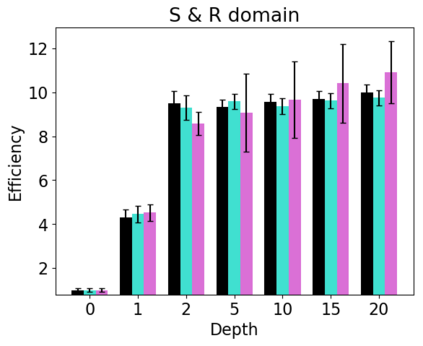

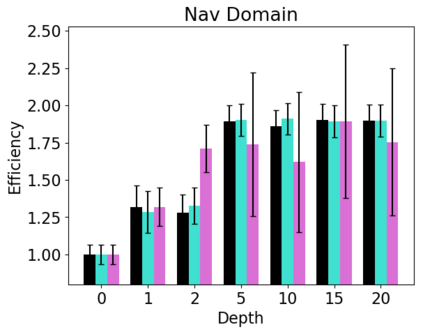

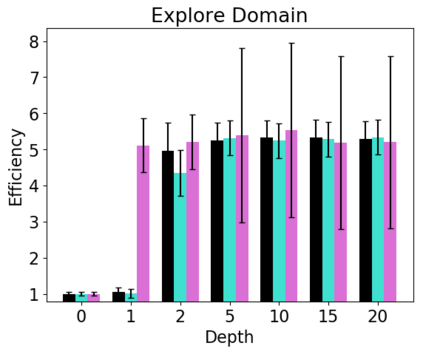

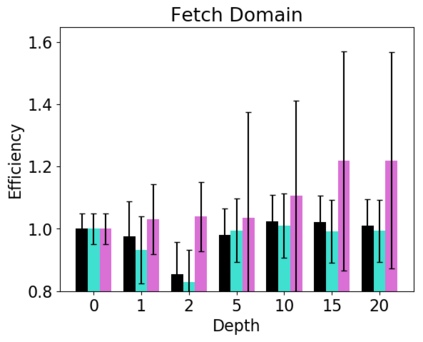

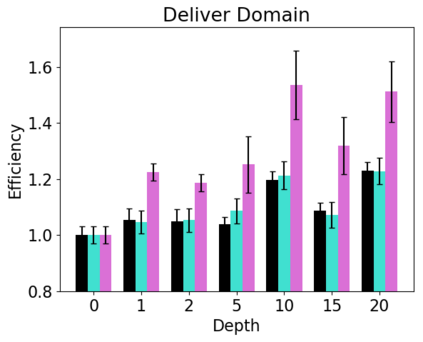

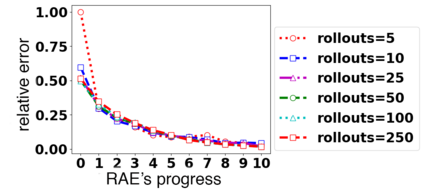

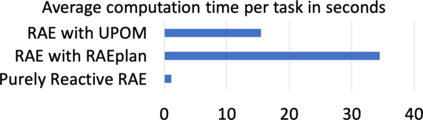

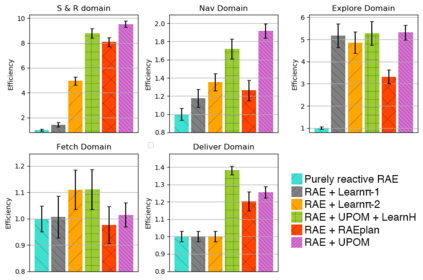

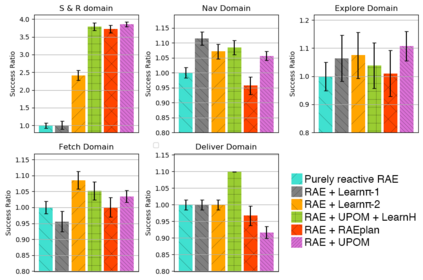

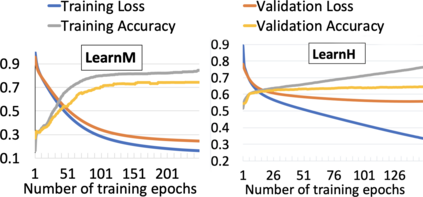

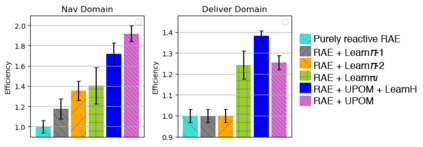

In AI research, synthesizing a plan of action has typically used descriptive models of the actions that abstractly specify what might happen as a result of an action, and are tailored for efficiently computing state transitions. However, executing the planned actions has needed operational models, in which rich computational control structures and closed-loop online decision-making are used to specify how to perform an action in a complex execution context, react to events and adapt to an unfolding situation. Deliberative actors, which integrate acting and planning, have typically needed to use both of these models together -- which causes problems when attempting to develop the different models, verify their consistency, and smoothly interleave acting and planning. As an alternative, we define and implement an integrated acting-and-planning system in which both planning and acting use the same operational models. These rely on hierarchical task-oriented refinement methods offering rich control structures. The acting component, called Reactive Acting Engine (RAE), is inspired by the well-known PRS system. At each decision step, RAE can get advice from a planner for a near-optimal choice with respect to a utility function. The anytime planner uses a UCT-like Monte Carlo Tree Search procedure, called UPOM, (UCT Procedure for Operational Models), whose rollouts are simulations of the actor's operational models. We also present learning strategies for use with RAE and UPOM that acquire, from online acting experiences and/or simulated planning results, a mapping from decision contexts to method instances as well as a heuristic function to guide UPOM. We demonstrate the asymptotic convergence of UPOM towards optimal methods in static domains, and show experimentally that UPOM and the learning strategies significantly improve the acting efficiency and robustness.

翻译:在大赦国际的研究中,将一项行动计划综合起来,通常会使用一些描述性的行动模式,这些模式抽象地具体说明了由于某项行动而可能发生的情况,并且为高效计算国家过渡而专门设计。然而,执行所计划的行动需要一种操作模式,其中丰富的计算控制结构和闭路在线决策用于具体说明如何在复杂的执行背景下采取行动,对事件作出反应,并适应正在出现的情况。将行为和规划结合起来的思考性行为者通常需要同时使用这两种趋同模式 -- -- 这在试图开发不同模型、核查其一致性以及顺利互换行为和规划功能时造成了问题。作为一种替代办法,我们定义和实施一个综合的行动和规划系统,其中既有规划和行动模式,也有相同的操作模式。这些基于任务的分级性改进方法,提供丰富的控制结构,对事件作出反应和适应正在发展的局势。在每一个决策步骤中,RAE可以从一个规划者处得到建议,以接近最优化的选择方式对待一个实用的操作程序。在UPOM 运行过程中,我们的时间点使用一个运行程序,而现在的UTO-ROM 也使用一个运行程序。