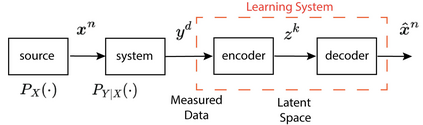

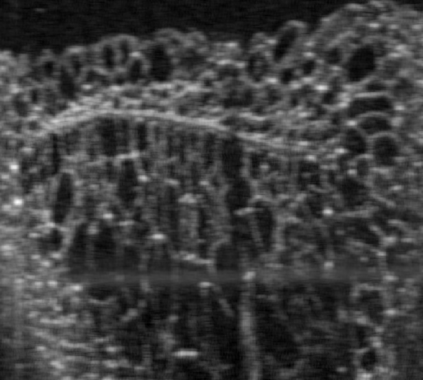

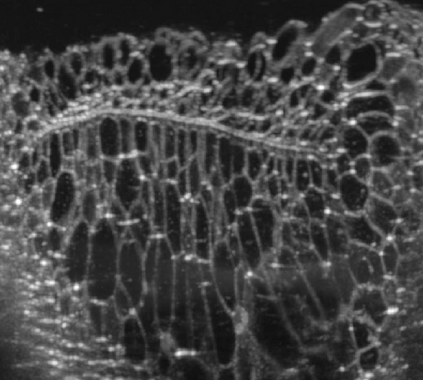

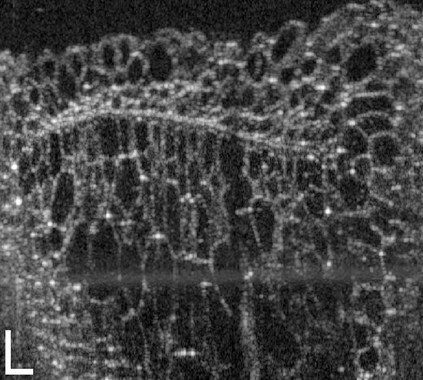

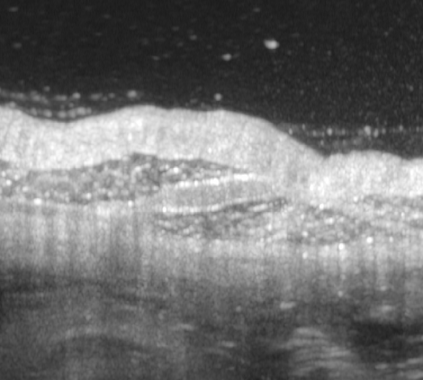

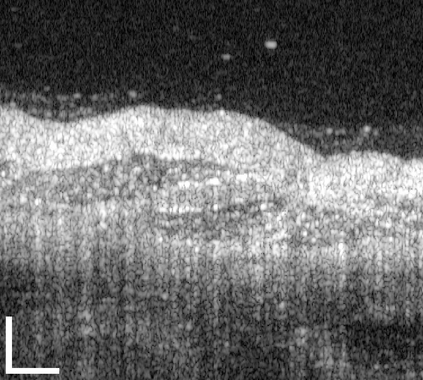

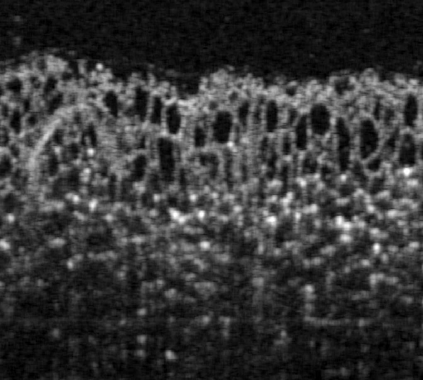

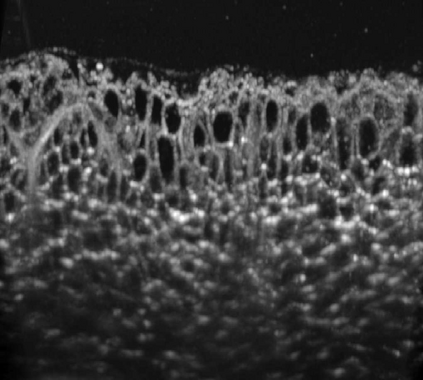

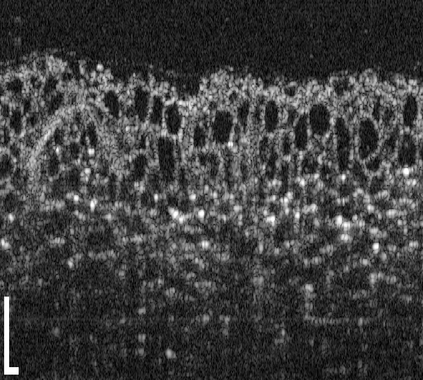

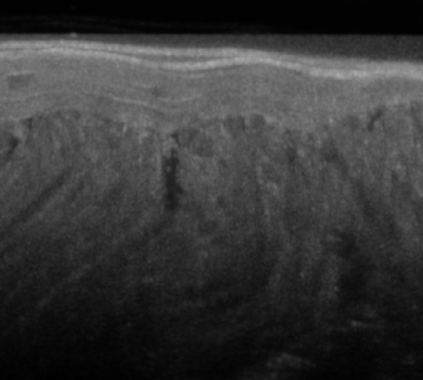

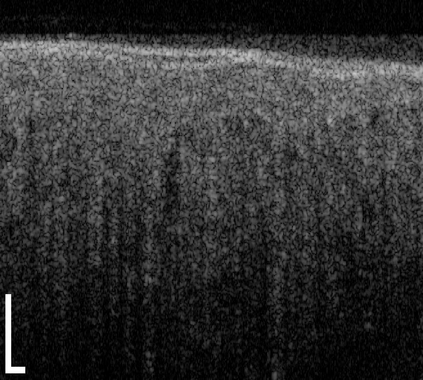

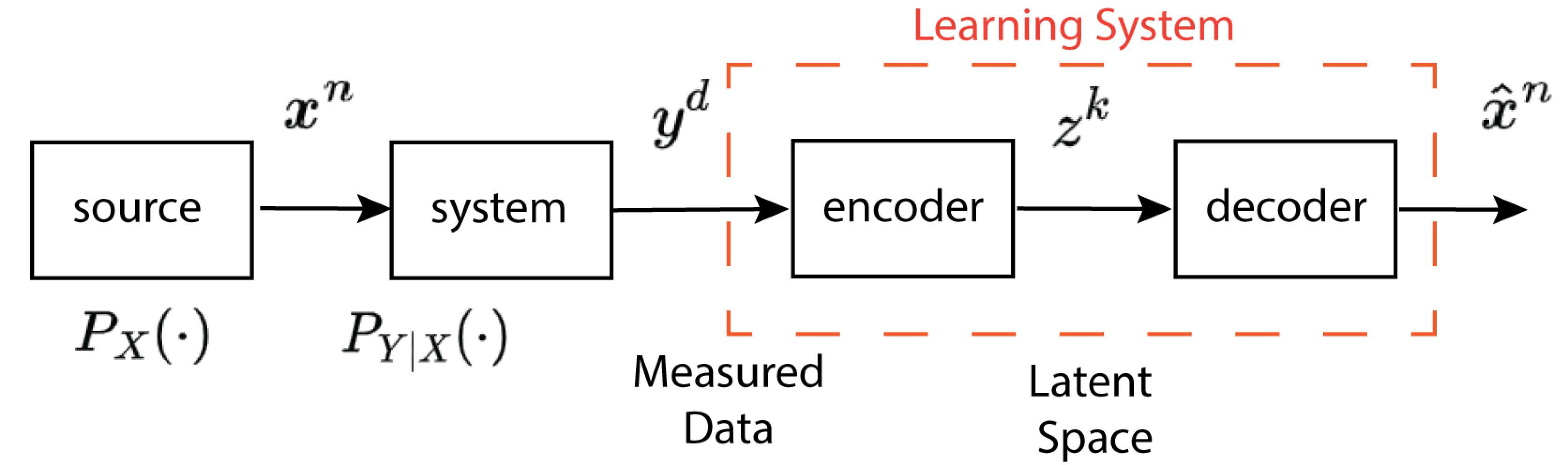

The statistical supervised learning framework assumes an input-output set with a joint probability distribution that is reliably represented by the training dataset. The learner is then required to output a prediction rule learned from the training dataset's input-output pairs. In this work, we provide meaningful insights into the asymptotic equipartition property (AEP) \citep{Shannon:1948} in the context of machine learning, and illuminate some of its potential ramifications for few-shot learning. We provide theoretical guarantees for reliable learning under the information-theoretic AEP, and for the generalization error with respect to the sample size. We then focus on a highly efficient recurrent neural net (RNN) framework and propose a reduced-entropy algorithm for few-shot learning. We also propose a mathematical intuition for the RNN as an approximation of a sparse coding solver. We verify the applicability, robustness, and computational efficiency of the proposed approach with image deblurring and optical coherence tomography (OCT) speckle suppression. Our experimental results demonstrate significant potential for improving learning models' sample efficiency, generalization, and time complexity, that can therefore be leveraged for practical real-time applications.

翻译:由统计监督的学习框架假定了一个具有共同概率分布的输入-输出数据集,其共同概率分布由培训数据集可靠地体现。 然后,学习者必须提出从培训数据集的输入-输出对配对中学习的预测规则。 在这项工作中,我们从机器学习的角度对无症状设备属性(AEP)\citep{Shannon:1948}提供了有意义的洞察力。我们核查了拟议方法的实用性、稳健性和计算效率,并用图像分解和光学一致性进行摄影(OCT)分解。我们的实验结果显示,在提高学习模型的实际效率、一般化和复杂性方面,我们具有巨大的潜力。