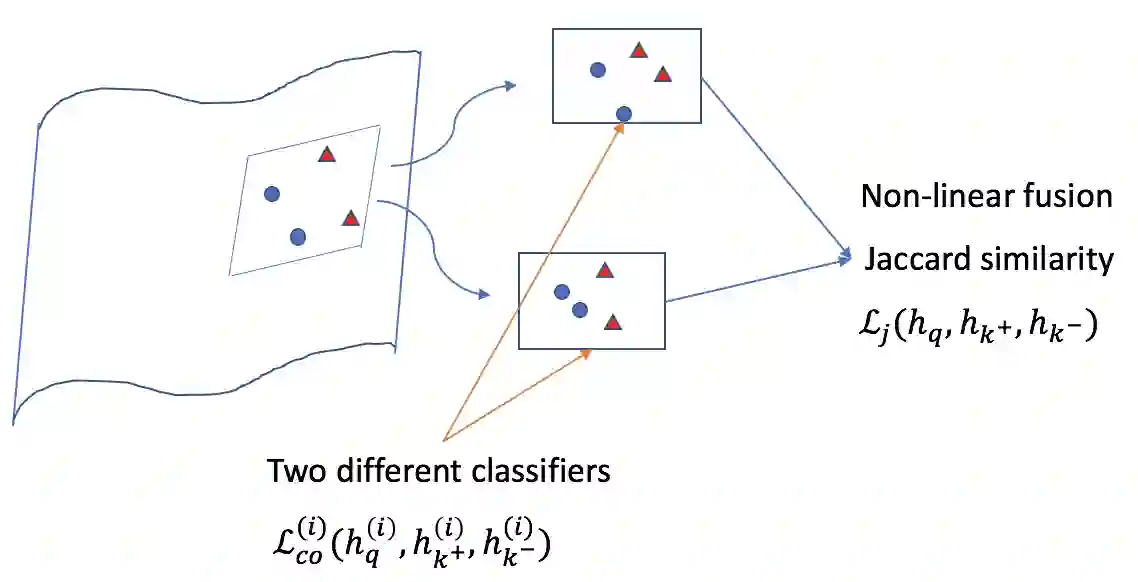

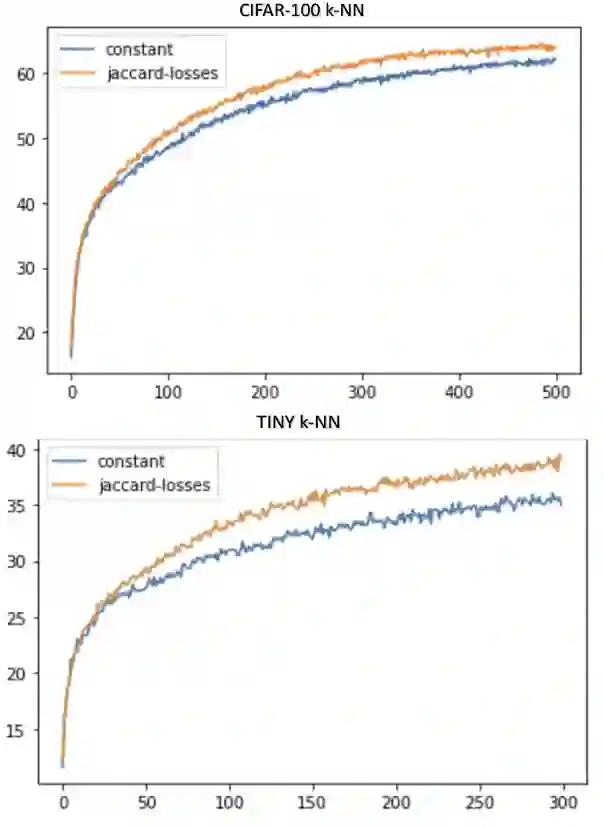

We introduce in this paper a new statistical perspective, exploiting the Jaccard similarity metric, as a measure-based metric to effectively invoke non-linear features in the loss of self-supervised contrastive learning. Specifically, our proposed metric may be interpreted as a dependence measure between two adapted projections learned from the so-called latent representations. This is in contrast to the cosine similarity measure in the conventional contrastive learning model, which accounts for correlation information. To the best of our knowledge, this effectively non-linearly fused information embedded in the Jaccard similarity, is novel to self-supervision learning with promising results. The proposed approach is compared to two state-of-the-art self-supervised contrastive learning methods on three image datasets. We not only demonstrate its amenable applicability in current ML problems, but also its improved performance and training efficiency.

翻译:在本文中,我们引入了一个新的统计视角,利用“耳卡相似度”衡量标准,作为衡量标准,在丧失自我监督的对比学习中有效援引非线性特征。具体地说,我们拟议的衡量标准可以被解释为从所谓的潜在表现中得出的两种调整预测之间的依赖性衡量标准。这与传统对比学习模型中反映相关信息的共生相似度标准形成对照。根据我们的最佳知识,这种嵌入“耳卡相似度”的有效非线性整合信息对于自我监督学习来说是新奇的,具有有希望的结果。拟议方法与三个图像数据集中两种最先进的自我监督的对比性学习方法相比。我们不仅表明它适合适用于当前ML问题,而且还表明它提高了绩效和培训效率。