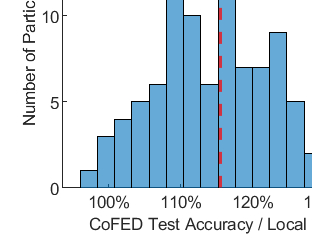

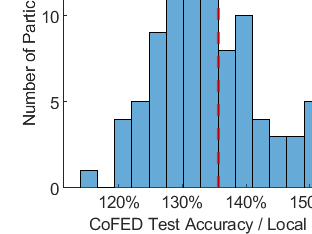

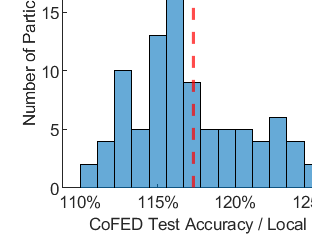

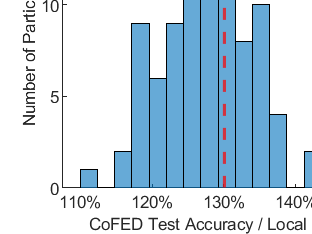

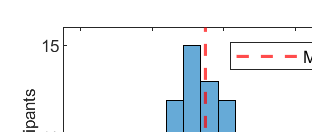

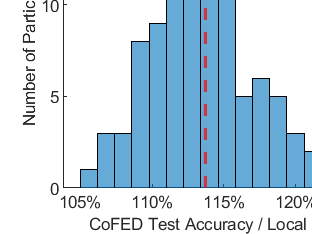

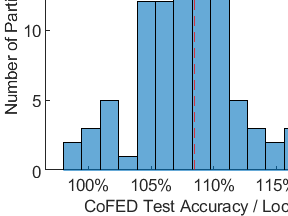

Federated Learning (FL) is a machine learning technique that enables participants to train high-quality models collaboratively without exchanging their private data. Participants in cross-silo FL settings are independent organizations with different task needs, and they are concerned not only with data privacy, but also with training independently their unique models due to intellectual property. Most existing FL schemes are incapability for the above scenarios. In this paper, we propose a communication-efficient FL scheme, CoFED, based on pseudo-labeling unlabeled data like co-training. To the best of our knowledge, it is the first FL scheme compatible with heterogeneous tasks, heterogeneous models, and heterogeneous training algorithms simultaneously. Experimental results show that CoFED achieves better performance with a lower communication cost. Especially for the non-IID settings and heterogeneous models, the proposed method improves the performance by 35%.

翻译:联邦学习(FL)是一种机器学习技术,使参与者能够在不交换私人数据的情况下合作培训高质量的模型。跨筒仓FL环境的参与者是独立组织,具有不同的任务需求,他们不仅关心数据隐私,而且关心由于知识产权而独立培训他们独特的模型。大多数现有的FL计划无法应对上述情景。在本文中,我们提议了一个通信高效FL计划,即CoFED,基于假标签的无标签数据,如联合培训。根据我们的知识,这是第一个与多种任务、多种模型和多种培训算法同时兼容的FL计划。实验结果表明,FED在通信成本较低的情况下取得了更好的业绩。特别是对于非IID环境和多种模式,拟议方法提高了35%的绩效。