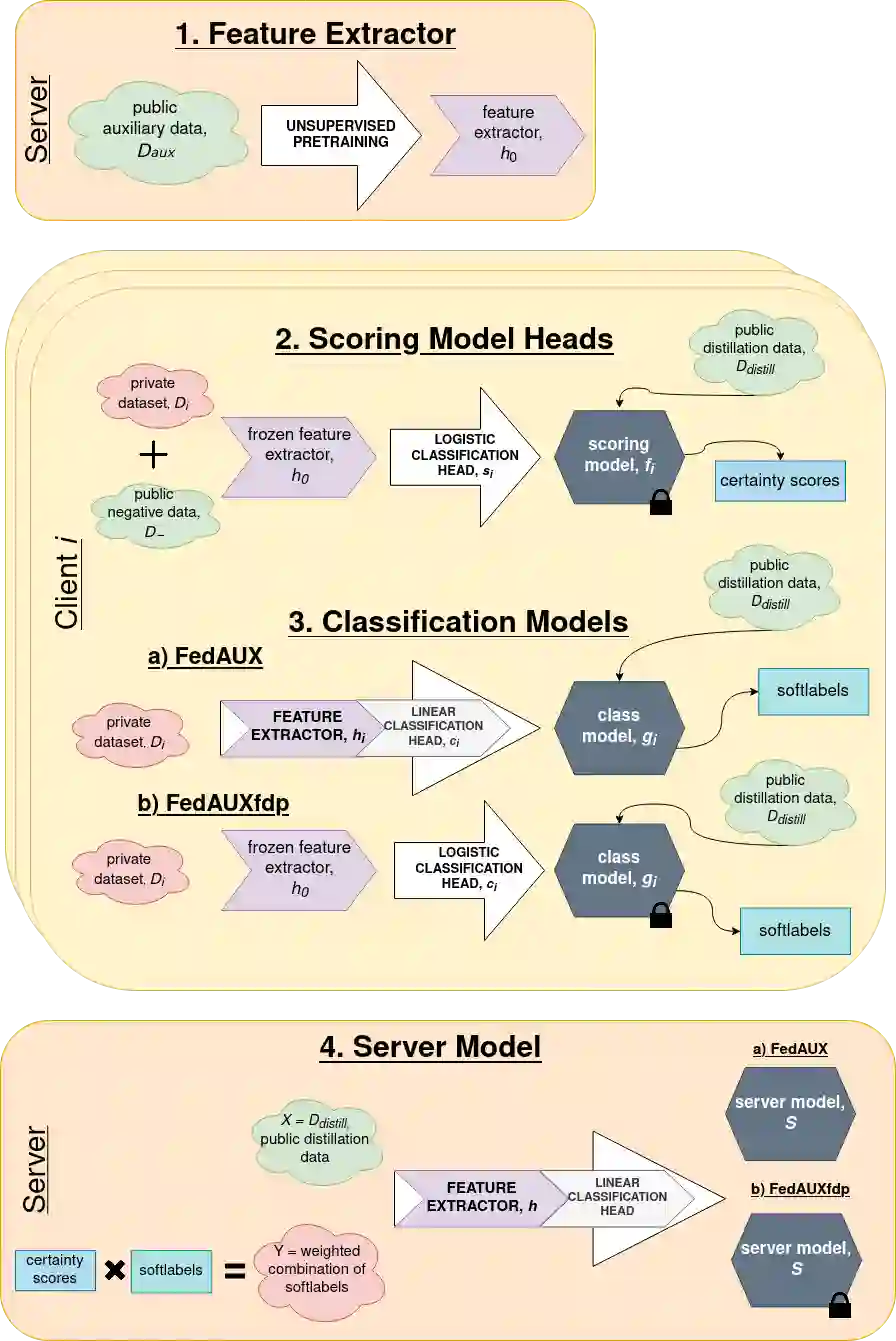

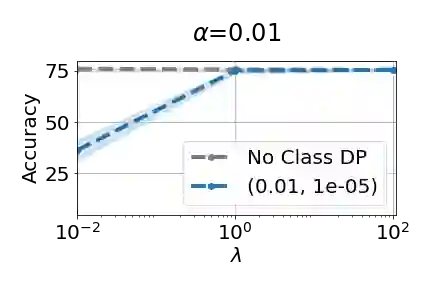

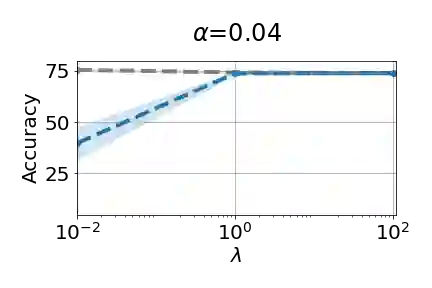

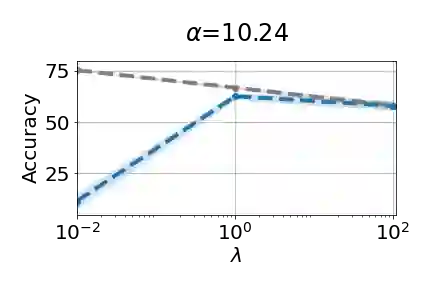

Federated learning suffers in the case of non-iid local datasets, i.e., when the distributions of the clients' data are heterogeneous. One promising approach to this challenge is the recently proposed method FedAUX, an augmentation of federated distillation with robust results on even highly heterogeneous client data. FedAUX is a partially $(\epsilon, \delta)$-differentially private method, insofar as the clients' private data is protected in only part of the training it takes part in. This work contributes a fully differentially private modification, termed FedAUXfdp. We further contribute an upper bound on the $l_2$-sensitivity of regularized multinomial logistic regression. In experiments with deep networks on large-scale image datasets, FedAUXfdp with strong differential privacy guarantees performs significantly better than other equally privatized SOTA baselines on non-iid client data in just a single communication round. Full privatization of the modified method results in a negligible reduction in accuracy at all levels of data heterogeneity.

翻译:在非本地数据集中,即客户数据分布不一时,联邦学习会受到影响。应对这一挑战的一个有希望的方法是最近提出的FedAUX方法,即增加联合蒸馏,对即使是高度多样化的客户数据也产生强有力的结果。FedAUX是一种部分(//epsilon,\delta)美元-有区别的私人方法,因为客户的私人数据仅在其参与的培训中的一部分得到保护。这项工作有助于完全差别化的私人修改,称为FedAUXfdp。我们进一步贡献了正规化多金属物流回归对美元-2美元敏感度的上限。在与大型图像数据集深度网络的实验中,具有强烈差异隐私权保障的FedAUXfdp在仅仅一次通信回合中比其他同样私有化的SOTA非客户数据基线要好得多。修改方法的全面私有化导致所有级别数据的高度遗传性降低。