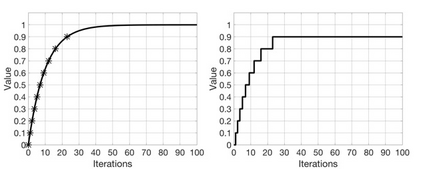

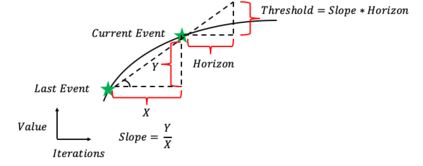

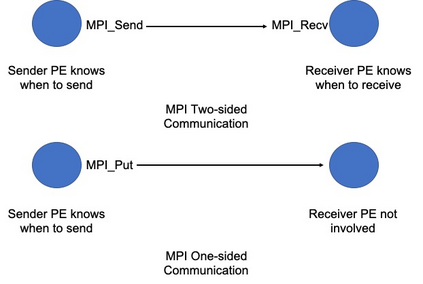

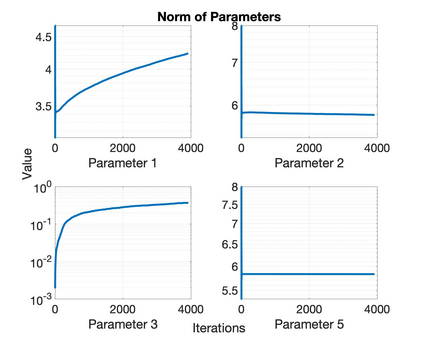

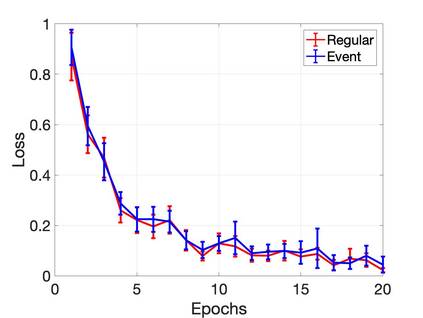

Communication in parallel systems imposes significant overhead which often turns out to be a bottleneck in parallel machine learning. To relieve some of this overhead, in this paper, we present EventGraD - an algorithm with event-triggered communication for stochastic gradient descent in parallel machine learning. The main idea of this algorithm is to modify the requirement of communication at every iteration in standard implementations of stochastic gradient descent in parallel machine learning to communicating only when necessary at certain iterations. We provide theoretical analysis of convergence of our proposed algorithm. We also implement the proposed algorithm for data-parallel training of a popular residual neural network used for training the CIFAR-10 dataset and show that EventGraD can reduce the communication load by up to 60% while retaining the same level of accuracy.

翻译:平行系统中的通信要求大量的间接费用,这往往证明是平行机器学习中的一个瓶颈。为了减轻部分间接费用,我们在本文中介绍了“事件GraD”——一种在平行机器学习中为随机梯度下降进行事件触发通信的算法。这种算法的主要想法是修改在标准实施“随机梯度梯度下降”的每一次迭代中进行通信的要求,仅在必要情况下在某些迭代中进行平行机器学习,以进行通信。我们提供了我们拟议算法趋同的理论分析。我们还实施了用于培训CIFAR-10数据集的流行性残余神经网络数据平行培训的拟议算法,并表明“事件GraD”可以将通信负荷减少60%,同时保持同样的精确度。