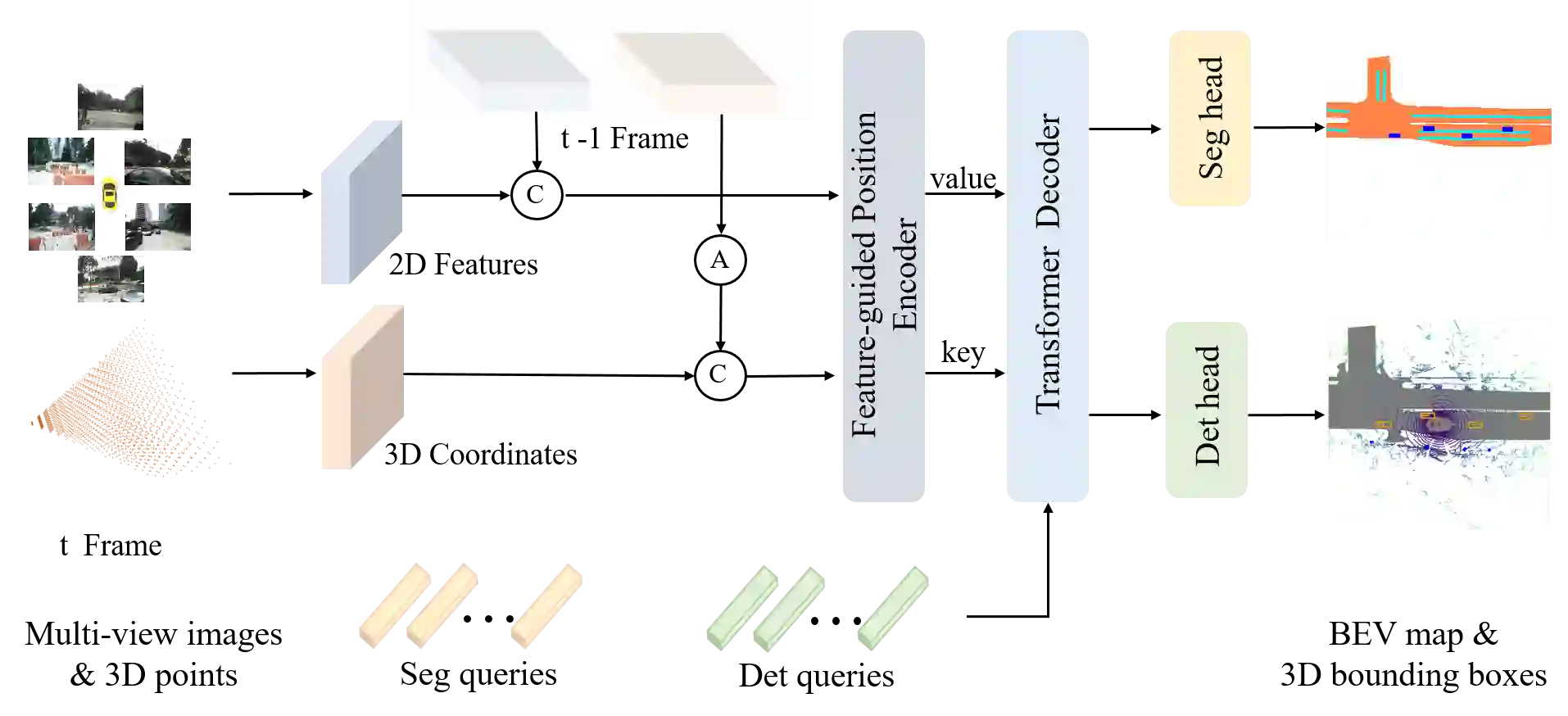

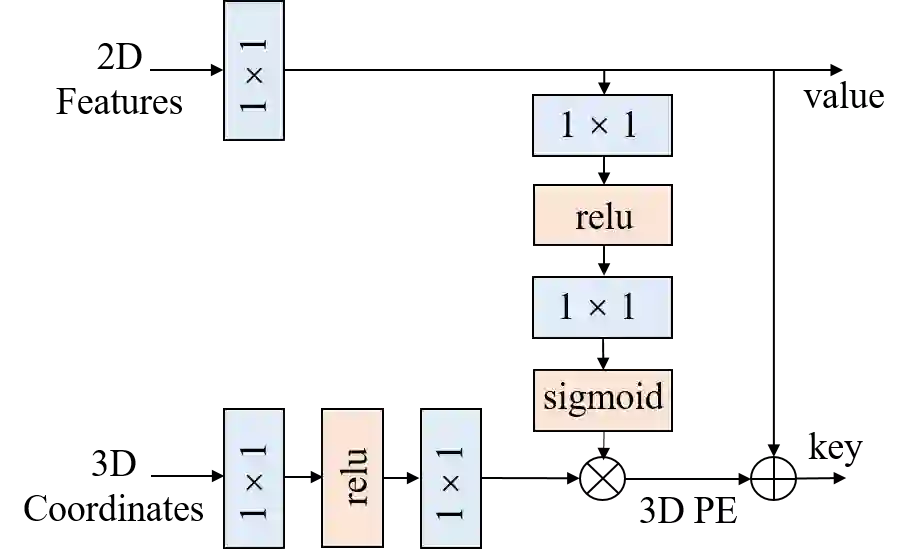

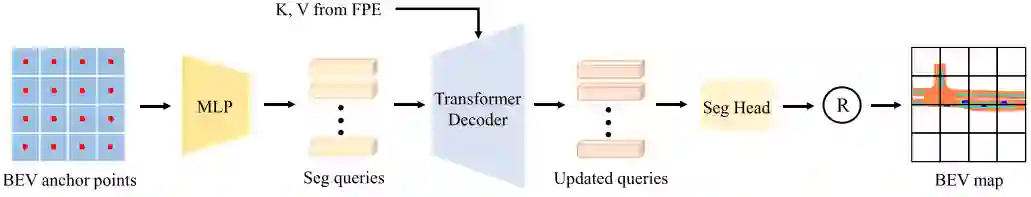

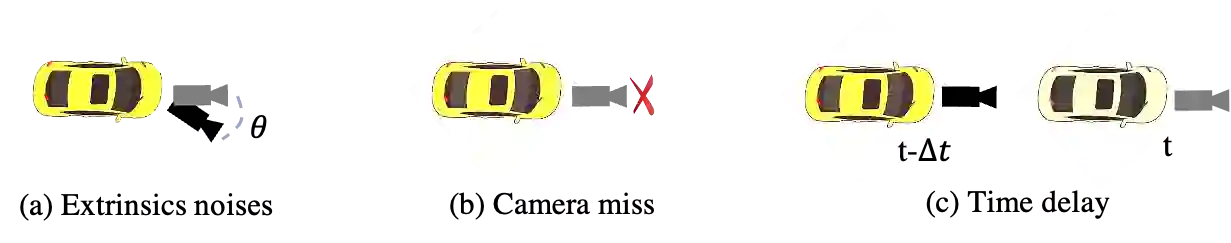

In this paper, we propose PETRv2, a unified framework for 3D perception from multi-view images. Based on PETR, PETRv2 explores the effectiveness of temporal modeling, which utilizes the temporal information of previous frames to boost 3D object detection. More specifically, we extend the 3D position embedding (3D PE) in PETR for temporal modeling. The 3D PE achieves the temporal alignment on object position of different frames. A feature-guided position encoder is further introduced to improve the data adaptability of 3D PE. To support for high-quality BEV segmentation, PETRv2 provides a simply yet effective solution by adding a set of segmentation queries. Each segmentation query is responsible for segmenting one specific patch of BEV map. PETRv2 achieves state-of-the-art performance on 3D object detection and BEV segmentation. Detailed robustness analysis is also conducted on PETR framework. We hope PETRv2 can serve as a strong baseline for 3D perception. Code is available at \url{https://github.com/megvii-research/PETR}.

翻译:在本文中,我们提议PETRv2, 一个用于多视图图像3D感知的统一框架。 基于 PETR, PETRv2, 探索时间模型的有效性, 利用先前框架的时间信息促进3D对象探测。 更具体地说, 我们扩展了 PETR 中嵌入的 3D 位置( 3D PE) 用于时间建模。 3D PE 在不同框架的物体位置上实现了时间对齐。 进一步引入了一个特性导位置编码器, 以提高 3D PE 的数据适应性。 为了支持高质量的 BEV 分割, PETRv2 提供了简单而有效的解决方案, 增加了一套分解查询。 每一个分解查询器负责分割 BEV 地图的某个特定的补丁 。 PETRv2 在 3D 对象探测和 BEV 分解上实现了最先进的性能。 在 PETRF 框架上也进行了详细的强性分析。 我们希望 PETRv2 能够作为 3D 感知的可靠基线。 代码可在\url/ gregyreub/ seargym.