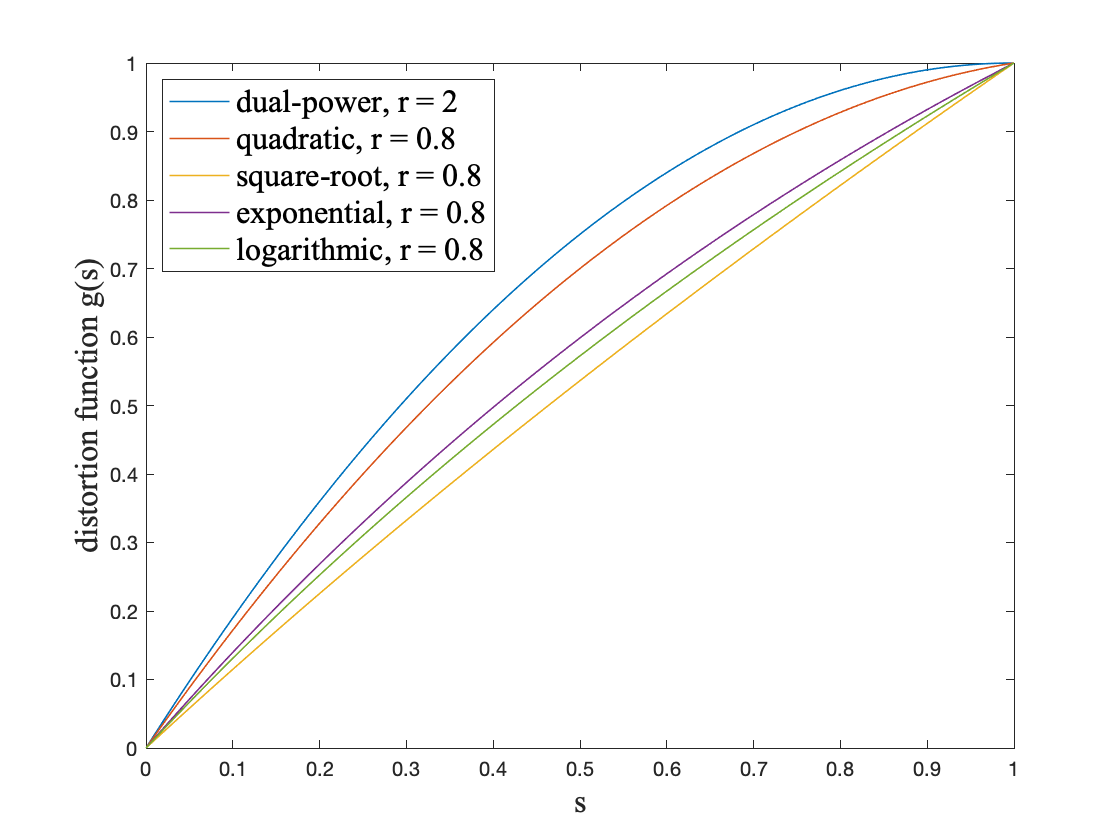

We propose approximate gradient ascent algorithms for risk-sensitive reinforcement learning control problem in on-policy as well as off-policy settings. We consider episodic Markov decision processes, and model the risk using distortion risk measure (DRM) of the cumulative discounted reward. Our algorithms estimate the DRM using order statistics of the cumulative rewards, and calculate approximate gradients from the DRM estimates using a smoothed functional-based gradient estimation scheme. We derive non-asymptotic bounds that establish the convergence of our proposed algorithms to an approximate stationary point of the DRM objective.

翻译:我们提出在政策和非政策环境中对风险敏感强化学习控制问题采用近似梯度乘法。 我们考虑附带的马尔科夫决策程序,并用累积折扣奖励的扭曲风险计量(DRM)来模拟风险。 我们的算法使用累积奖励的定序统计来估计DRM, 并使用平滑的功能梯度估计办法从DRM估计数中计算出大约的梯度。 我们得出了确定我们拟议算法与DRM目标大致固定点趋同的非简易界限。