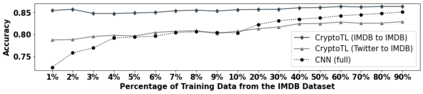

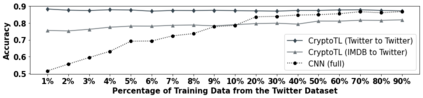

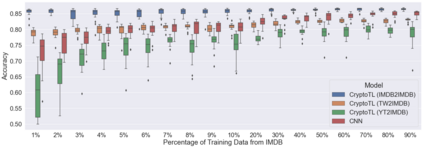

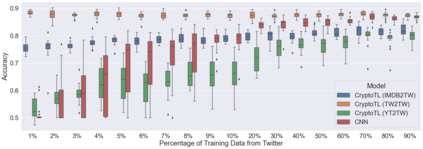

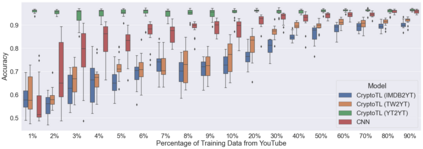

Big data has been a pervasive catchphrase in recent years, but dealing with data scarcity has become a crucial question for many real-world deep learning (DL) applications. A popular methodology to efficiently enable the training of DL models to perform tasks in scenarios with low availability of data is transfer learning (TL). TL allows to transfer knowledge from a general domain to a specific target one. However, such a knowledge transfer may put privacy at risk when it comes to sensitive or private data. With CryptoTL we introduce a solution to this problem, and show for the first time a cryptographic privacy-preserving TL approach based on homomorphic encryption that is efficient and feasible for real-world use cases. We achieve this by carefully designing the framework such that training is always done in plain while still profiting from the privacy gained by homomorphic encryption. To demonstrate the efficiency of our framework, we instantiate it with the popular CKKS HE scheme and apply CryptoTL to classification tasks with small datasets and show the applicability of our approach for sentiment analysis and spam detection. Additionally, we highlight how our approach can be combined with differential privacy to further increase the security guarantees. Our extensive benchmarks show that using CryptoTL leads to high accuracy while still having practical fine-tuning and classification runtimes despite using homomorphic encryption. Concretely, one forward-pass through the encrypted layers of our setup takes roughly 1s on a notebook CPU.

翻译:近几年来,大数据是一个普遍存在的套话,但处理数据稀缺问题已成为许多现实世界深层学习(DL)应用的关键问题。一种高效地培训DL模型以在数据可用率低的情况下执行任务的流行方法是转移学习(TL)。TL允许将知识从一般领域转移到具体目标领域。然而,这种知识转让可能会在敏感或私人数据方面危及隐私。在CryptoTL中,我们引入了解决这个问题的办法,并首次展示了基于对现实世界使用案例来说有效和可行的同源加密的加密隐私保护TL方法。我们通过仔细设计这样的框架来实现这一目标,即培训总是在普通情况下进行,同时仍然从同源加密中获得的隐私。为了展示我们的框架效率,我们用流行的CKKKS He 方案,将CryptoTL用于小数据集的分类任务,并显示我们用于情绪分析和垃圾检测的方法的实用性。此外,我们强调,我们的方法可以与高额的Crentrecregil化标准相结合,同时使用高额的Cry-colremocreal rodeal laftal laftal laveal