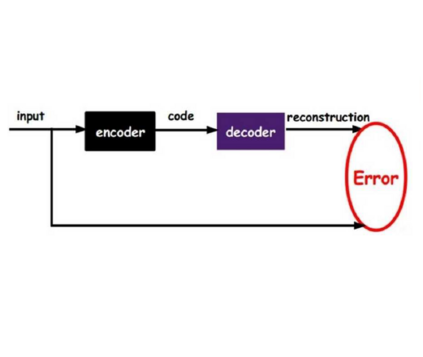

Attracted by its scalability towards practical codeword lengths, we revisit the idea of Turbo-autoencoders for end-to-end learning of PHY-Layer communications. For this, we study the existing concepts of Turbo-autoencoders from the literature and compare the concept with state-of-the-art classical coding schemes. We propose a new component-wise training algorithm based on the idea of Gaussian a priori distributions that reduces the overall training time by almost a magnitude. Further, we propose a new serial architecture inspired by classical serially concatenated Turbo code structures and show that a carefully optimized interface between the two component autoencoders is required. To the best of our knowledge, these serial Turbo autoencoder structures are the best known neural network based learned sequences that can be trained from scratch without any required expert knowledge in the domain of channel codes.

翻译:从其可移植到实用的编码长度,我们重新审视了Turbo-autoencoders用于从终端到终端学习PHY-Layer通信的Turbo-autoencoders的想法。为此,我们从文献中研究Turbo-autoencoders的现有概念,并将这个概念与最先进的古典编码计划进行比较。我们基于Gaussian的先验分布概念,提出了一个新的构件式培训算法,将整个培训时间减少几乎一个规模。此外,我们提议了一个新的由古典的串联式Turbo代码结构所启发的序列结构,并表明需要两个组件自动编码者之间精心优化的界面。就我们所知,这些串号Turbo自动编码结构是已知的最佳神经网络学习序列,可以在没有频道代码领域所需的专家知识的情况下从零开始培训。