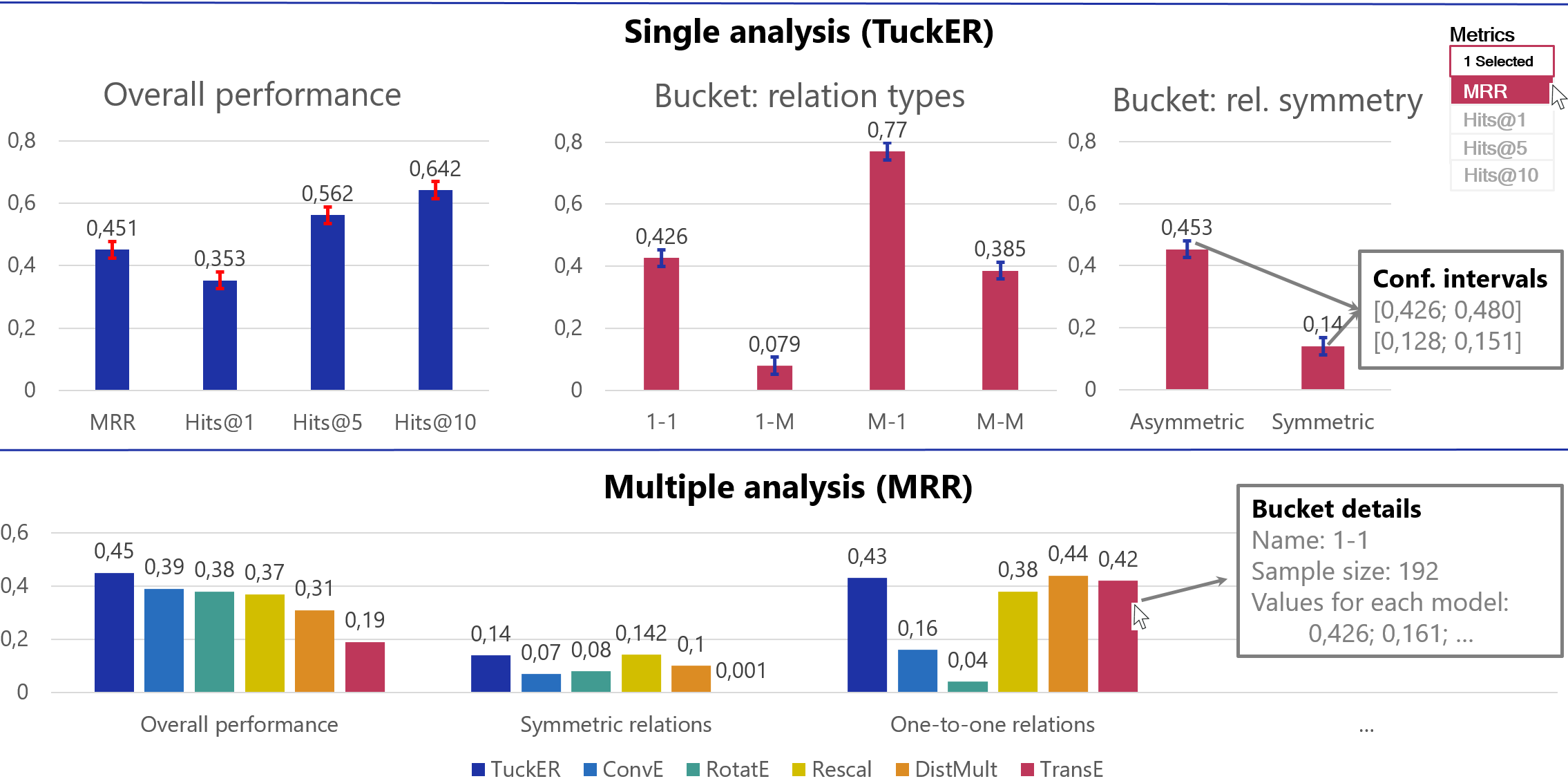

Knowledge Graphs (KGs) store information in the form of (head, predicate, tail)-triples. To augment KGs with new knowledge, researchers proposed models for KG Completion (KGC) tasks such as link prediction; i.e., answering (h; p; ?) or (?; p; t) queries. Such models are usually evaluated with averaged metrics on a held-out test set. While useful for tracking progress, averaged single-score metrics cannot reveal what exactly a model has learned -- or failed to learn. To address this issue, we propose KGxBoard: an interactive framework for performing fine-grained evaluation on meaningful subsets of the data, each of which tests individual and interpretable capabilities of a KGC model. In our experiments, we highlight the findings that we discovered with the use of KGxBoard, which would have been impossible to detect with standard averaged single-score metrics.

翻译:知识图( KGs) 以( 头、 尾、 尾、 尾) 三Ps 的形式存储信息。 为了以新的知识增强 KGs, 研究人员提出了KG 完成( KGC) 任务的模式, 例如链接预测; 即 回答 (h; p;?) 或 (?; p; t) 查询 。 这些模型通常用悬停测试集的平均度量来评估。 虽然对于跟踪进展有用, 平均单数指标无法显示一个模型所学到的 -- -- 或没有学到的。 为了解决这个问题, 我们提议 KGxBoard : 一个对数据有意义的子集进行精细分析评估的互动框架, 每个子测试KGC 模型的个体和可解释能力。 在我们的实验中, 我们强调我们通过使用 KGxBoard 发现的结果, 而使用标准的平均单数的单数指标是无法检测的。