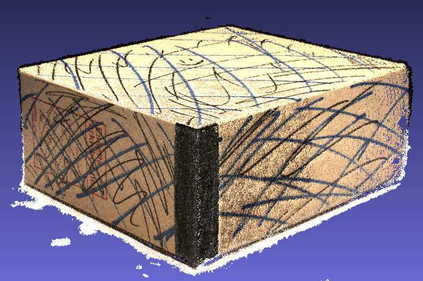

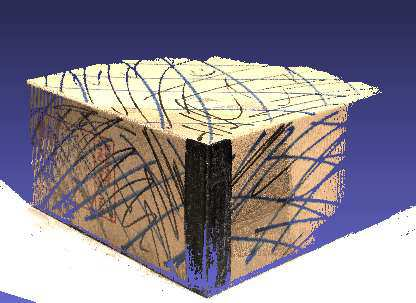

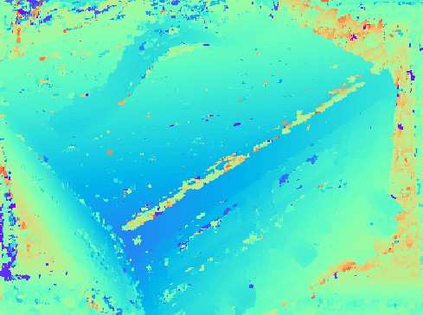

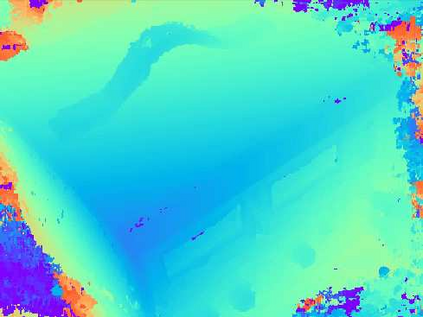

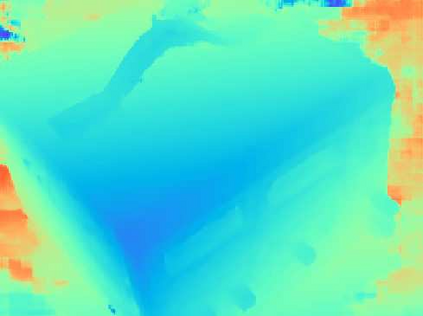

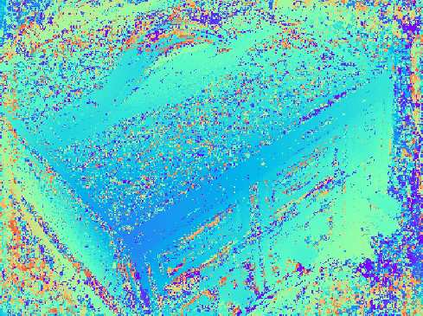

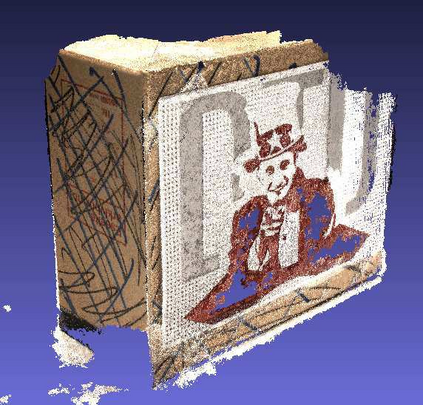

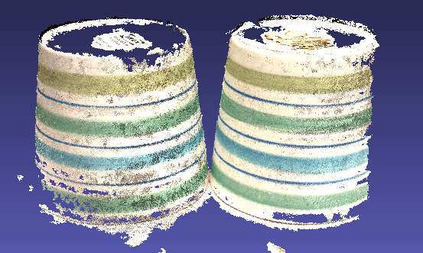

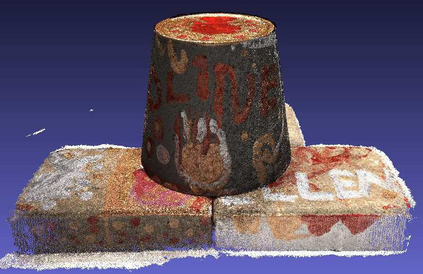

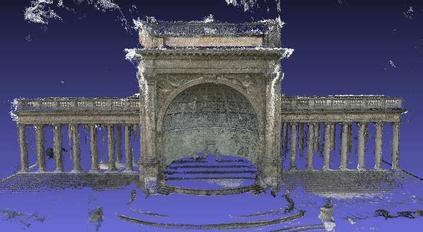

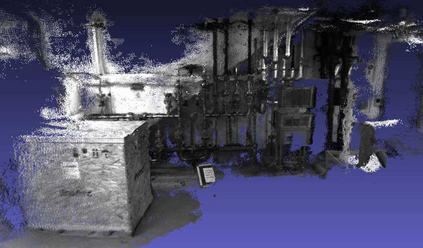

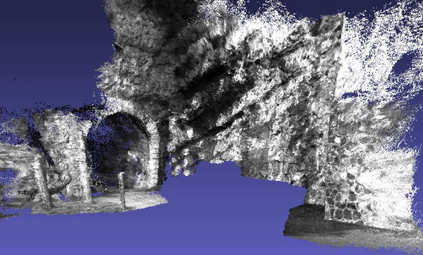

Deep learning has recently demonstrated its excellent performance for multi-view stereo (MVS). However, one major limitation of current learned MVS approaches is the scalability: the memory-consuming cost volume regularization makes the learned MVS hard to be applied to high-resolution scenes. In this paper, we introduce a scalable multi-view stereo framework based on the recurrent neural network. Instead of regularizing the entire 3D cost volume in one go, the proposed Recurrent Multi-view Stereo Network (R-MVSNet) sequentially regularizes the 2D cost maps along the depth direction via the gated recurrent unit (GRU). This reduces dramatically the memory consumption and makes high-resolution reconstruction feasible. We first show the state-of-the-art performance achieved by the proposed R-MVSNet on the recent MVS benchmarks. Then, we further demonstrate the scalability of the proposed method on several large-scale scenarios, where previous learned approaches often fail due to the memory constraint. Code is available at https://github.com/YoYo000/MVSNet.

翻译:最近深层学习展示了多视立体声的出色表现。然而,目前所学的多视立体立体声方法的一个主要局限性是可缩放性:记忆消耗成本的量的正规化使得所学的多视系统难以应用于高分辨率场景。在本文中,我们采用了基于经常性神经网络的可缩放多视立体立体声框架。拟议的经常性多视立体立体声网络(R-MVSNet)没有将整个3D成本量统一起来,而是通过大门式经常单元(GRU)对沿深度方向的2D成本图进行顺序调整。这大大减少了记忆消耗量,并使得高分辨率重建成为可行。我们首先展示了拟议的R-MVSNet在最近MVS基准上取得的最先进的业绩。然后,我们进一步展示了拟议方法在几个大型情景上的可缩度,因为先前的学习方法往往因记忆限制而失败。代码可在 https://github.com/YoYo000/MVSNet上查阅。