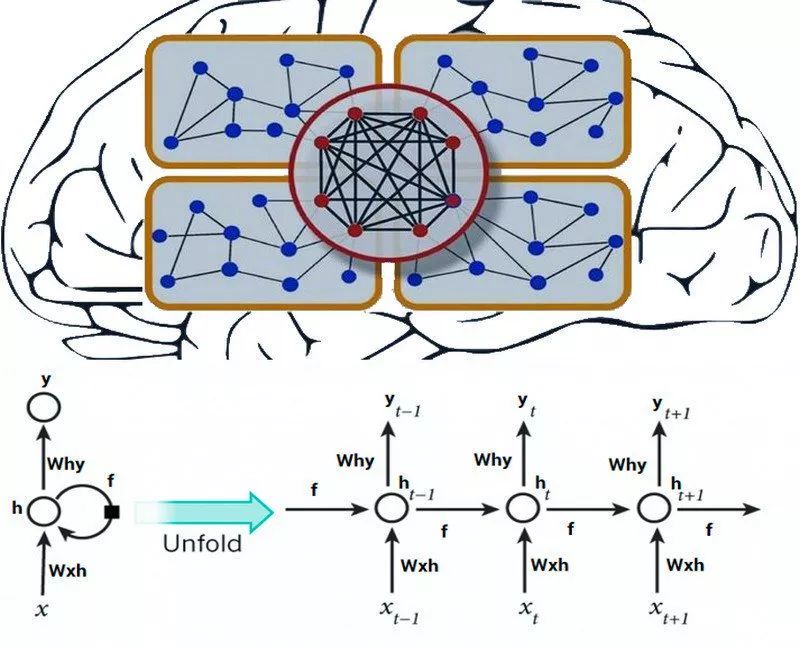

LibRec 精选:从0开始构建RNN网络

LibRec 精选

勇敢的人不是不落泪的人,而是愿意含着眼泪继续奔跑的人。

【DL基础教程】从0开始构建RNN网络(Python),

链接:https://www.analyticsvidhya.com/blog/2019/01/fundamentals-deep-learning-recurrent-neural-networks-scratch-python/

近期热点论文

1. Misspelling Oblivious Word Embeddings

Bora Edizel, Aleksandra Piktus, Piotr Bojanowski, Rui Ferreira, Edouard Grave, Fabrizio Silvestri

https://arxiv.org/abs/1905.09755v1

In this paper we present a method to learn word embeddings that are resilient to misspellings. Existing word embeddings have limited applicability to malformed texts, which contain a non-negligible amount of out-of-vocabulary words. In our method, misspellings of each word are embedded close to their correct variants.

2. Interpreting and improving natural-language processing (in machines) with natural language-processing (in the brain)

Mariya Toneva, Leila Wehbe

https://arxiv.org/abs/1905.11833v1

Despite much work, it is still unclear what the representations learned by these networks correspond to. We propose here a novel approach for interpreting neural networks that relies on the only processing system we have that does understand language: the human brain. We use brain imaging recordings of subjects reading complex natural text to interpret word and sequence embeddings from 4 recent NLP models - ELMo, USE, BERT and Transformer-XL.

3. MatchZoo: A Learning, Practicing, and Developing System for Neural Text Matching

Jiafeng Guo, Yixing Fan, Xiang Ji, Xueqi Cheng

https://arxiv.org/abs/1905.10289v1

Recently, deep leaning technology has been widely adopted for text matching, making neural text matching a new and active research domain. With a large number of neural matching models emerging rapidly, it becomes more and more difficult for researchers, especially those newcomers, to learn and understand these new models. In this paper, therefore, we present a novel system, namely MatchZoo, to facilitate the learning, practicing and designing of neural text matching models.

4. QuesNet: A Unified Representation for Heterogeneous Test Questions

Yu Yin, Qi Liu, Zhenya Huang, Enhong Chen, Wei Tong, Shijin Wang, Yu Su

https://arxiv.org/abs/1905.10949v1

It is a crucial issue in online learning systems, which can promote many applications in education domain. Specifically, we first design a unified framework to aggregate question information with its heterogeneous inputs into a comprehensive vector. Then we propose a two-level hierarchical pre-training algorithm to learn better understanding of test questions in an unsupervised way.

5. Compositional pre-training for neural semantic parsing

Amir Ziai

https://arxiv.org/abs/1905.11531v1

Semantic parsing is the process of translating natural language utterances into logical forms, which has many important applications such as question answering and instruction following. Prior work has used frameworks for inducing grammars over the training examples, which capture conditional independence properties that the model can leverage. In addition, since the pre-training stage is separate from the training on the main task we also expand the universe of possible augmentations without causing catastrophic inference.