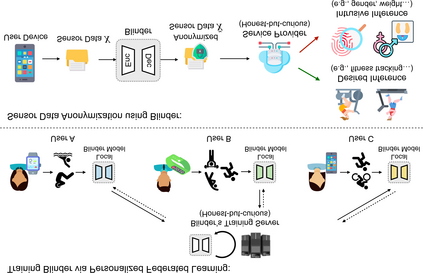

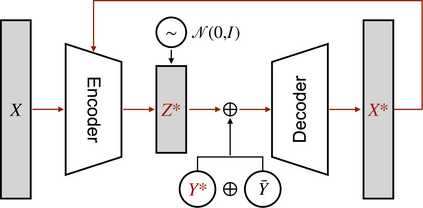

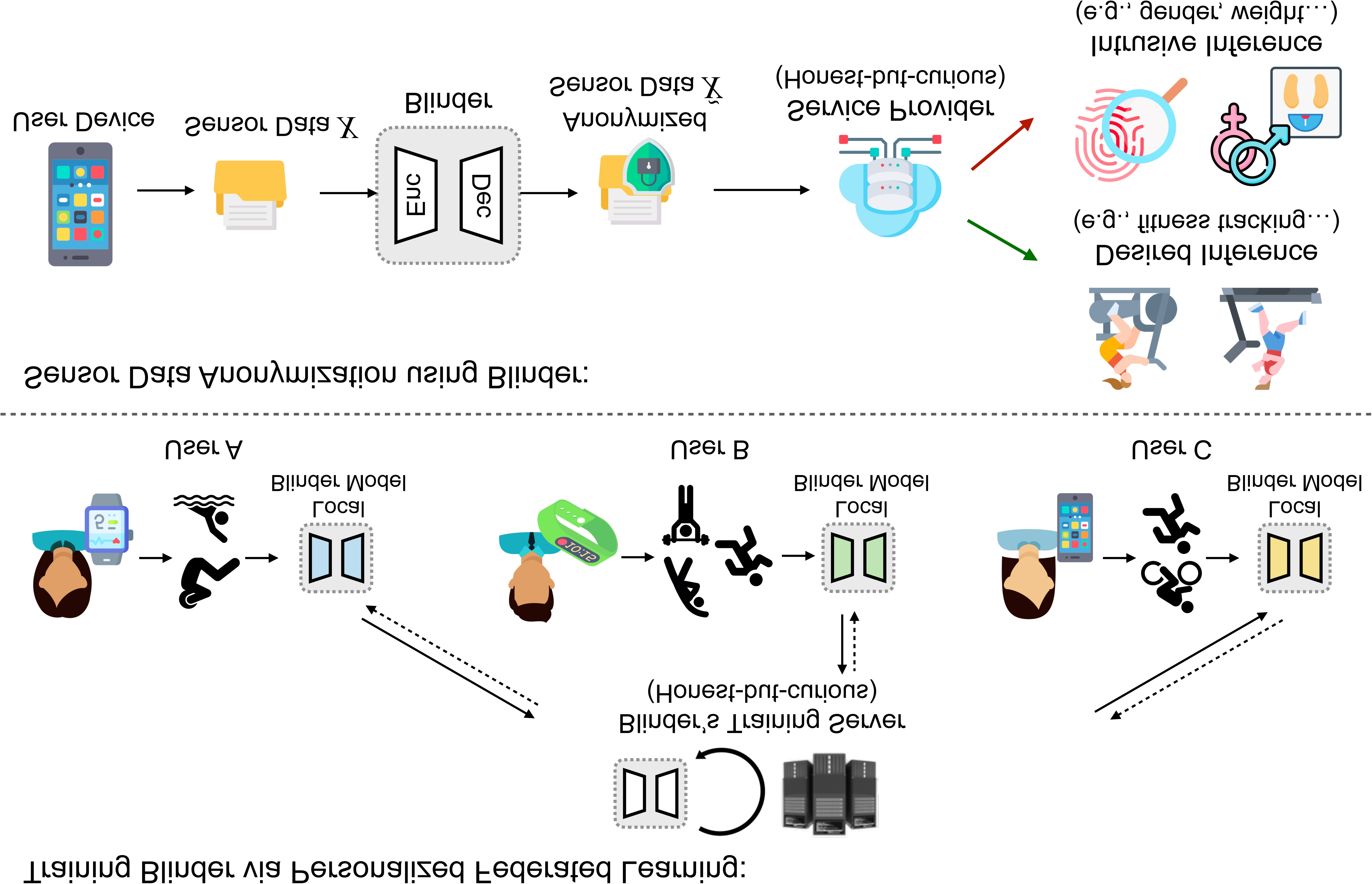

This paper proposes a sensor data anonymization model that is trained on decentralized data and strikes a desirable trade-off between data utility and privacy, even in heterogeneous settings where the collected sensor data have different underlying distributions. Our anonymization model, dubbed Blinder, is based on a variational autoencoder and discriminator networks trained in an adversarial fashion. We use the model-agnostic meta-learning framework to adapt the anonymization model trained via federated learning to each user's data distribution. We evaluate Blinder under different settings and show that it provides end-to-end privacy protection at the cost of increasing privacy loss by up to 4.00% and decreasing data utility by up to 4.24%, compared to the state-of-the-art anonymization model trained on centralized data. Our experiments confirm that Blinder can obscure multiple private attributes at once, and has sufficiently low power consumption and computational overhead for it to be deployed on edge devices and smartphones to perform real-time anonymization of sensor data.

翻译:本文提出一个传感器数据匿名模型,该模型在分散化数据方面受过培训,并在数据公用和隐私之间实现可取的权衡,即使在收集的传感器数据具有不同基本分布分布的多种环境中也是如此。我们的匿名模型,即所谓的盲人,以对抗性方式培训的变异自动编码器和歧视者网络为基础。我们使用模型-不可知元学习框架,使通过联结学习培训的匿名模型适应每个用户的数据分布。我们评估了不同环境中的盲人,并表明它提供了端对端隐私保护,其代价是隐私损失增加4.0%,数据效用减少4.24%,而中央数据培训的是最先进的匿名化模型。我们的实验证实,盲人可以一次模糊多个私人属性,并且有足够的低功率消耗量和计算间接费用,用于边缘装置和智能手机,以便实时对传感器数据进行匿名。