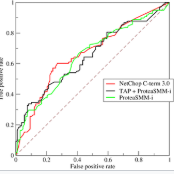

Human tissue and its constituent cells form a microenvironment that is fundamentally three-dimensional (3D). However, the standard-of-care in pathologic diagnosis involves selecting a few two-dimensional (2D) sections for microscopic evaluation, risking sampling bias and misdiagnosis. Diverse methods for capturing 3D tissue morphologies have been developed, but they have yet had little translation to clinical practice; manual and computational evaluations of such large 3D data have so far been impractical and/or unable to provide patient-level clinical insights. Here we present Modality-Agnostic Multiple instance learning for volumetric Block Analysis (MAMBA), a deep-learning-based platform for processing 3D tissue images from diverse imaging modalities and predicting patient outcomes. Archived prostate cancer specimens were imaged with open-top light-sheet microscopy or microcomputed tomography and the resulting 3D datasets were used to train risk-stratification networks based on 5-year biochemical recurrence outcomes via MAMBA. With the 3D block-based approach, MAMBA achieves an area under the receiver operating characteristic curve (AUC) of 0.86 and 0.74, superior to 2D traditional single-slice-based prognostication (AUC of 0.79 and 0.57), suggesting superior prognostication with 3D morphological features. Further analyses reveal that the incorporation of greater tissue volume improves prognostic performance and mitigates risk prediction variability from sampling bias, suggesting the value of capturing larger extents of heterogeneous 3D morphology. With the rapid growth and adoption of 3D spatial biology and pathology techniques by researchers and clinicians, MAMBA provides a general and efficient framework for 3D weakly supervised learning for clinical decision support and can help to reveal novel 3D morphological biomarkers for prognosis and therapeutic response.

翻译:暂无翻译