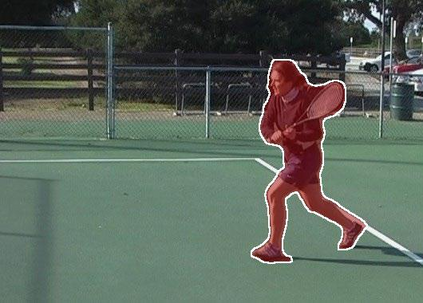

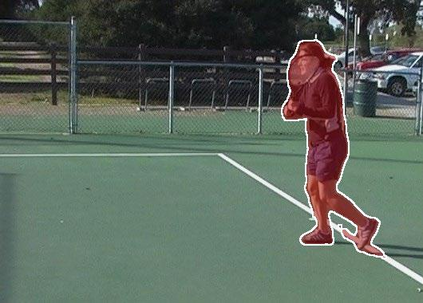

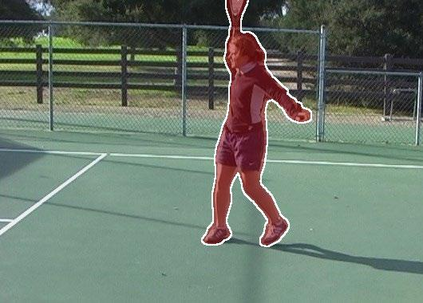

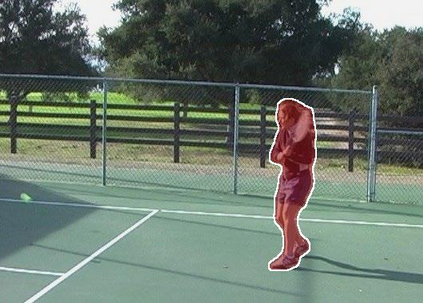

Appearance and motion are two important sources of information in video object segmentation (VOS). Previous methods mainly focus on using simplex solutions, lowering the upper bound of feature collaboration among and across these two cues. In this paper, we study a novel framework, termed the FSNet (Full-duplex Strategy Network), which designs a relational cross-attention module (RCAM) to achieve the bidirectional message propagation across embedding subspaces. Furthermore, the bidirectional purification module (BPM) is introduced to update the inconsistent features between the spatial-temporal embeddings, effectively improving the model robustness. By considering the mutual restraint within the full-duplex strategy, our FSNet performs the cross-modal feature-passing (i.e., transmission and receiving) simultaneously before the fusion and decoding stage, making it robust to various challenging scenarios (e.g., motion blur, occlusion) in VOS. Extensive experiments on five popular benchmarks (i.e., DAVIS$_{16}$, FBMS, MCL, SegTrack-V2, and DAVSOD$_{19}$) show that our FSNet outperforms other state-of-the-arts for both the VOS and video salient object detection tasks.

翻译:显示和移动是视频对象分割(VOS)中两个重要信息来源。 以往的方法主要侧重于使用简单化解决方案,降低这两个提示之间和跨这两个提示之间特征合作的上限。 在本文中,我们研究一个叫FSNet(FSNet(FUNet)的新型框架,即FSNet(Full-duplex战略网络),它设计了一个关联交叉关注模块(RCAM),以便在嵌入的子空间中实现双向信息双向传播。此外,双向净化模块(BPM)被引入了双向净化模块,以更新空间-时空嵌入之间的不一致特征,有效改进模型的稳健性。我们FSNet(FSNet)在全双轨战略中相互约束,在聚变和解码阶段之前同时执行跨模式的特征通行(即传输和接收),使其对VOS系统的各种挑战性情景(如运动模糊、分解)进行强力。 在五种流行基准(即DAVIS$+16}、FBMS、SETRC、 SegTracrack-FS-st VS) 以及DSFSFSFSFSFS- 显示其他状态探测任务和DADSFSFS- 和DFSFSFSFSFSFSFDFSFDFDFDFDFS- T)

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem