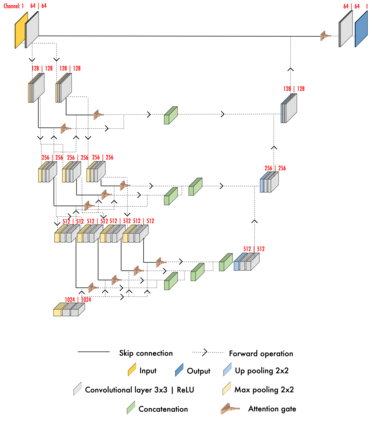

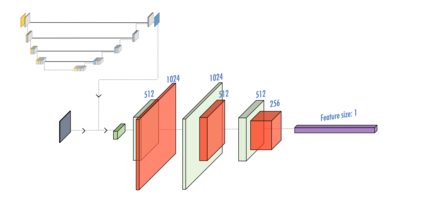

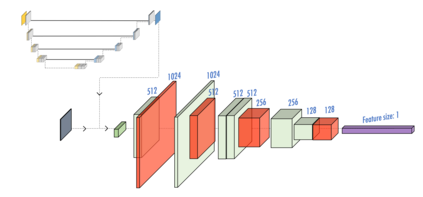

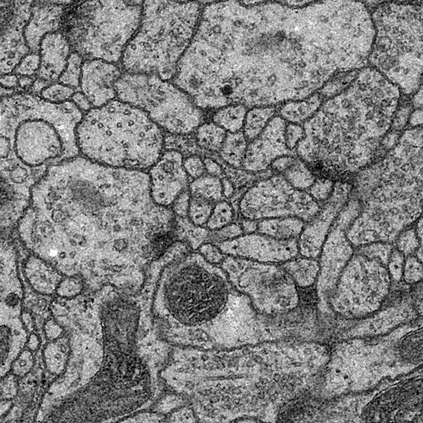

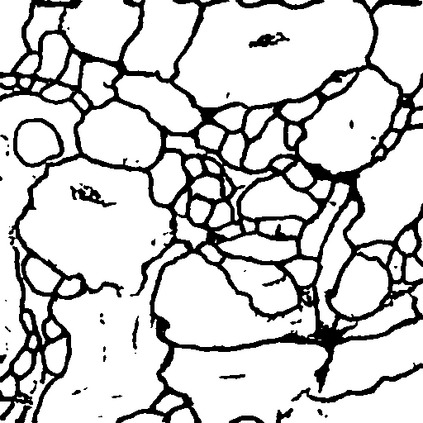

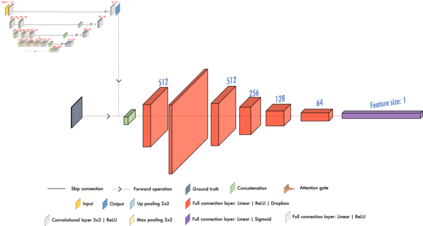

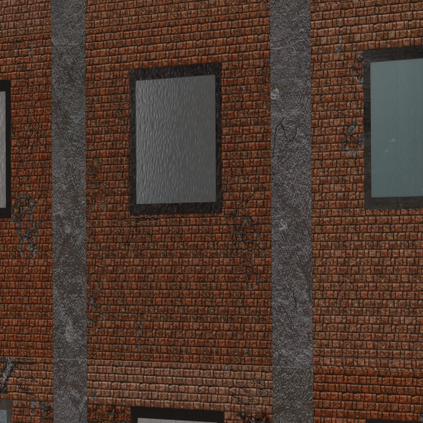

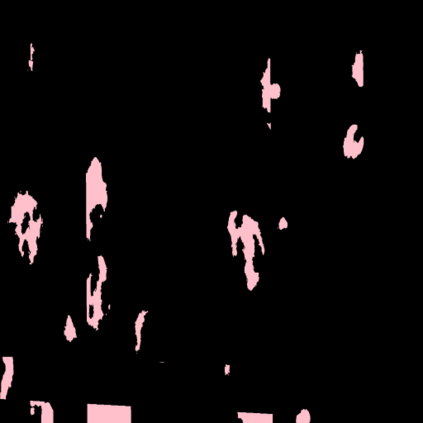

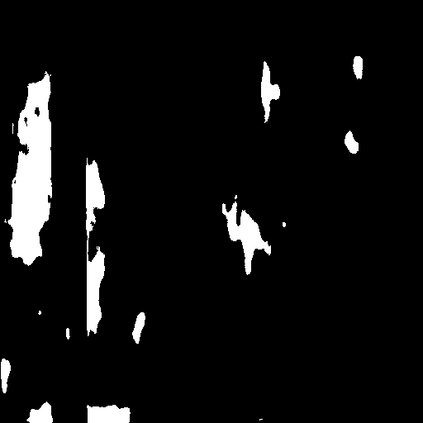

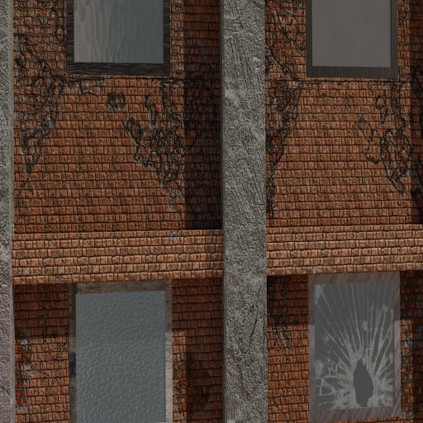

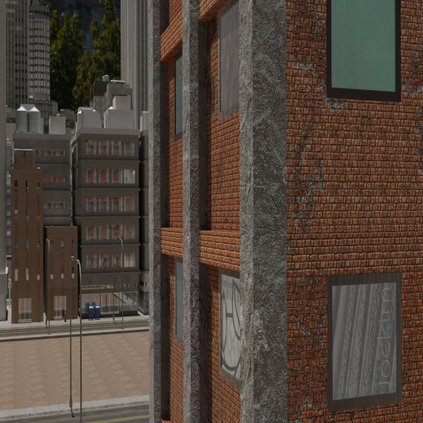

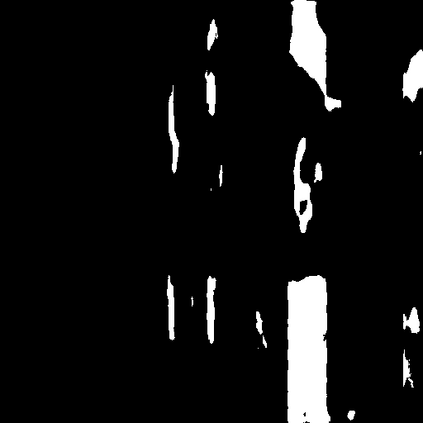

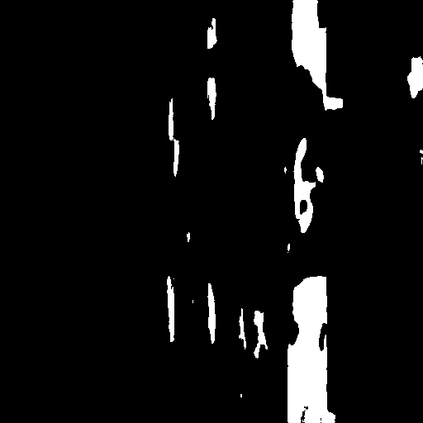

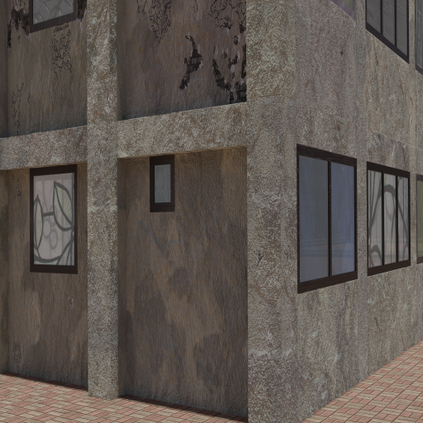

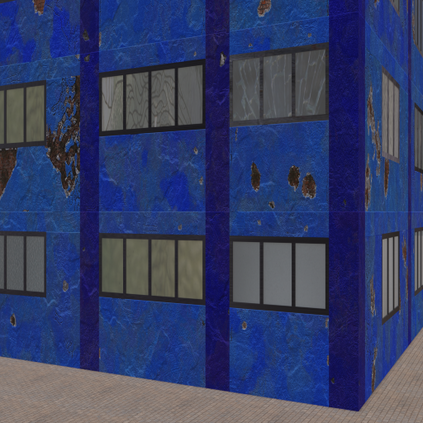

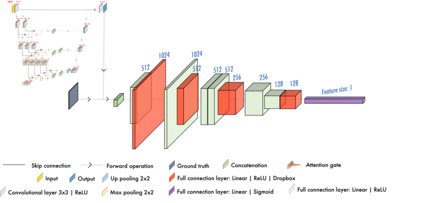

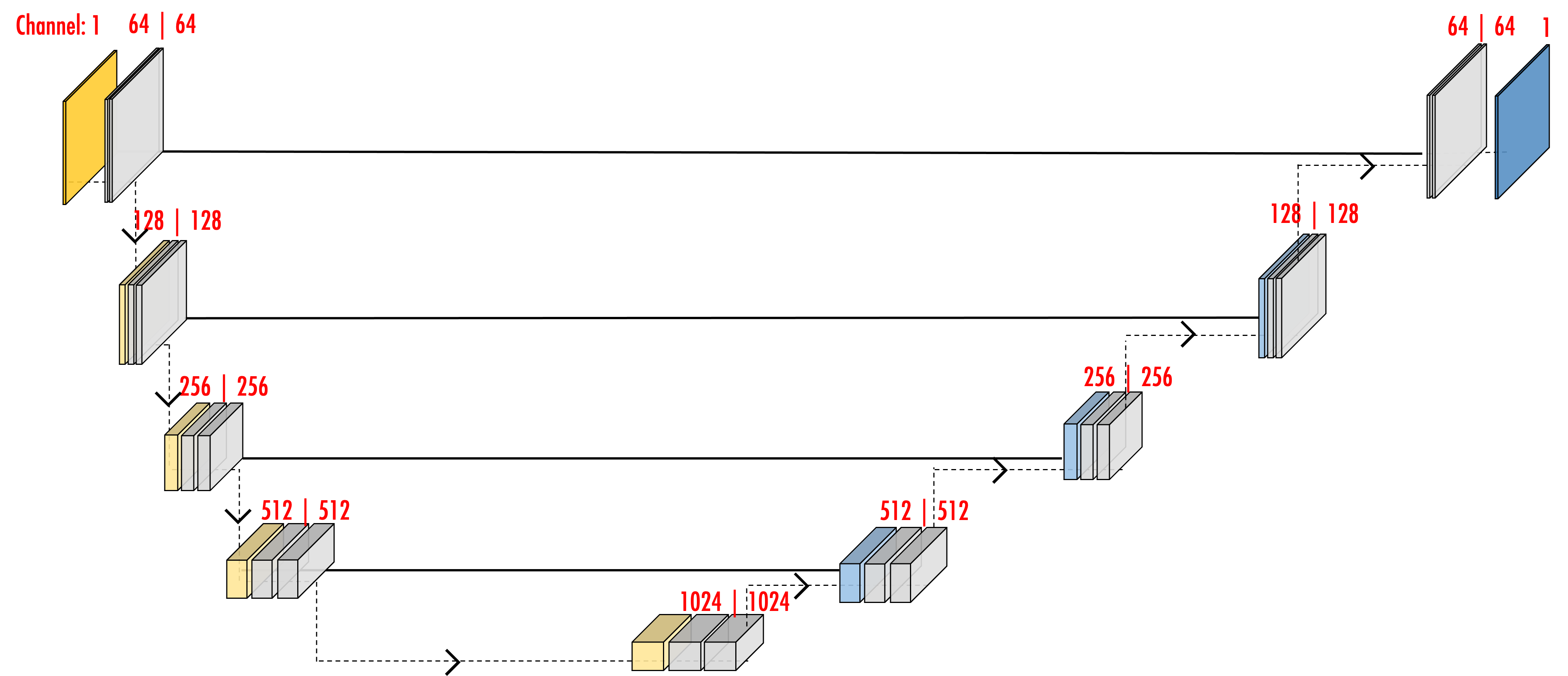

Semantic segmentation profits from deep learning and has shown its possibilities in handling the graphical data from the on-site inspection. As a result, visual damage in the facade images should be detected. Attention mechanism and generative adversarial networks are two of the most popular strategies to improve the quality of semantic segmentation. With specific focuses on these two strategies, this paper adopts U-net, a representative convolutional neural network, as the primary network and presents a comparative study in two steps. First, cell images are utilized to respectively determine the most effective networks among the U-nets with attention mechanism or generative adversarial networks. Subsequently, selected networks from the first test and their combination are applied for facade damage segmentation to investigate the performances of these networks. Besides, the combined effect of the attention mechanism and the generative adversarial network is discovered and discussed.

翻译:通过深层学习获得的语义分解,并展示了处理现场视察的图形数据的可能性。结果,应发现表面图像的视觉损坏。注意机制和基因对抗网络是提高语义分解质量的最受欢迎的两种战略。本文以这两个战略为具体重点,将具有代表性的共生神经网络U-net(具有代表性的共生神经网络)作为主要网络,并分两个步骤进行比较研究。首先,利用细胞图像分别确定带有注意机制或基因对抗网络的U-net之间最有效的网络。随后,将第一次试验中选定的网络及其组合用于外形损害分解,以调查这些网络的性能。此外,还发现并讨论了注意机制和基因对抗网络的综合影响。