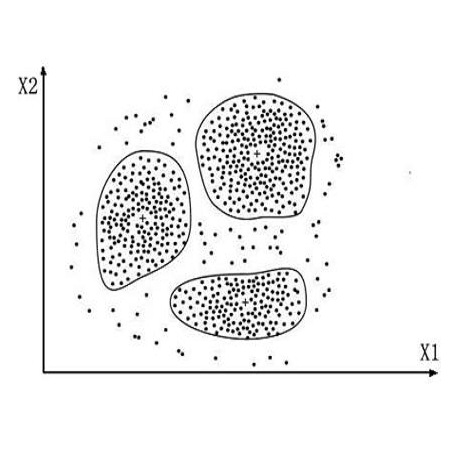

Contrastive learning has been frequently investigated to learn effective representations for text clustering tasks. While existing contrastive learning-based text clustering methods only focus on modeling instance-wise semantic similarity relationships, they ignore contextual information and underlying relationships among all instances that needs to be clustered. In this paper, we propose a novel text clustering approach called Subspace Contrastive Learning (SCL) which models cluster-wise relationships among instances. Specifically, the proposed SCL consists of two main modules: (1) a self-expressive module that constructs virtual positive samples and (2) a contrastive learning module that further learns a discriminative subspace to capture task-specific cluster-wise relationships among texts. Experimental results show that the proposed SCL method not only has achieved superior results on multiple task clustering datasets but also has less complexity in positive sample construction.

翻译:暂无翻译