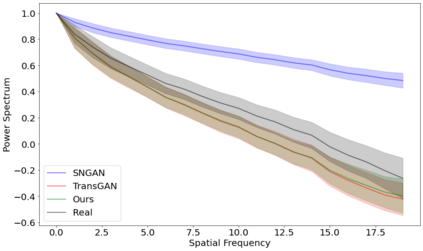

Transformer models have recently attracted much interest from computer vision researchers and have since been successfully employed for several problems traditionally addressed with convolutional neural networks. At the same time, image synthesis using generative adversarial networks (GANs) has drastically improved over the last few years. The recently proposed TransGAN is the first GAN using only transformer-based architectures and achieves competitive results when compared to convolutional GANs. However, since transformers are data-hungry architectures, TransGAN requires data augmentation, an auxiliary super-resolution task during training, and a masking prior to guide the self-attention mechanism. In this paper, we study the combination of a transformer-based generator and convolutional discriminator and successfully remove the need of the aforementioned required design choices. We evaluate our approach by conducting a benchmark of well-known CNN discriminators, ablate the size of the transformer-based generator, and show that combining both architectural elements into a hybrid model leads to better results. Furthermore, we investigate the frequency spectrum properties of generated images and observe that our model retains the benefits of an attention based generator.

翻译:最近提议的TranGAN是第一个仅使用以变压器为基础的结构的GAN,与以变压器为主的GAN相比,它取得了竞争性的结果。然而,由于变压器是数据饥饿结构,TranGAN需要数据增强,在培训期间需要辅助性超级分辨率任务,在引导自我注意机制之前需要遮罩。在本文中,我们研究以变压器为基础的发电机和变压器制导器的结合,成功地消除了上述设计选择的需要。我们评估了我们的方法,对著名的CNN有线电视歧视器进行了基准,扩大了变压器发电机的大小,并表明将两个建筑要素合并成混合模型可以取得更好的结果。此外,我们调查了生成图像的频谱特性,并观察了我们的模型保留了以注意为基础的发电机的好处。