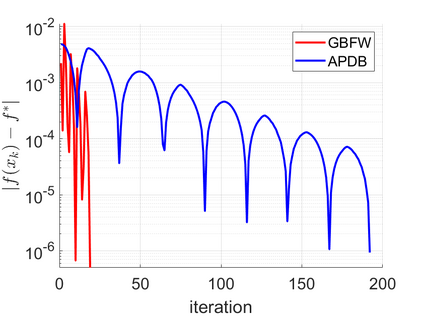

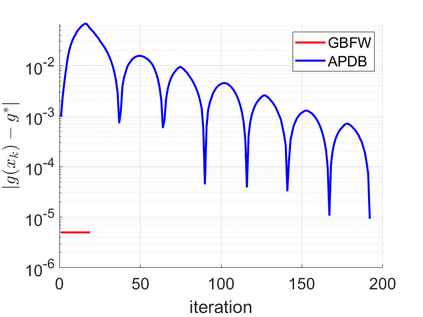

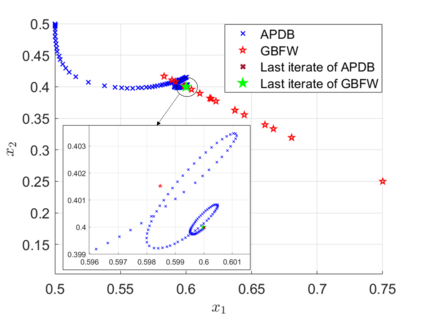

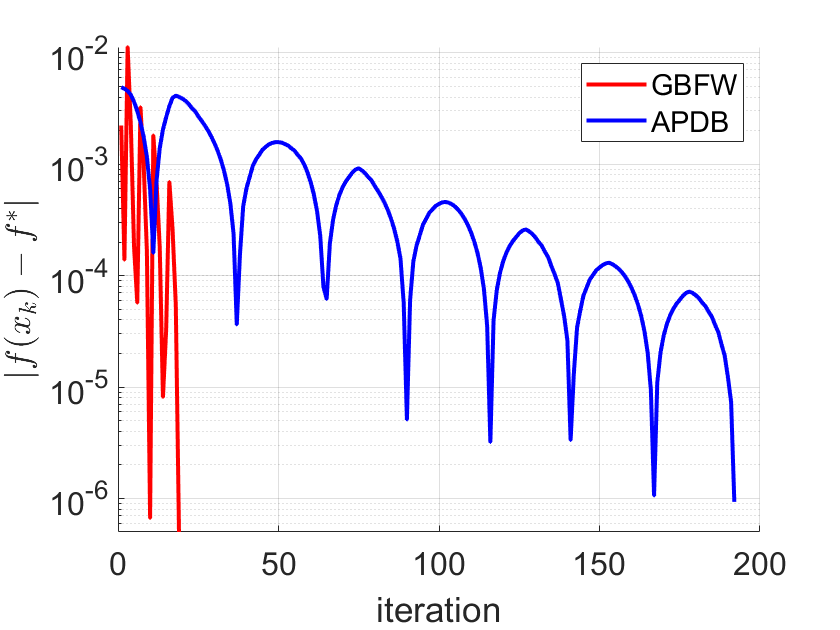

In this paper, we study a class of bilevel optimization problems, also known as simple bilevel optimization, where we minimize a smooth objective function over the optimal solution set of another convex constrained optimization problem. Several iterative methods have been developed for tackling this class of problems. Alas, their convergence guarantees are not satisfactory as they are either asymptotic for the upper-level objective, or the convergence rates are slow and sub-optimal. To address this issue, in this paper, we introduce a generalization of the Frank-Wolfe (FW) method to solve the considered problem. The main idea of our method is to locally approximate the solution set of the lower-level problem via a cutting plane, and then run a FW-type update to decrease the upper-level objective. When the upper-level objective is convex, we show that our method requires ${\mathcal{O}}(\max\{1/\epsilon_f,1/\epsilon_g\})$ iterations to find a solution that is $\epsilon_f$-optimal for the upper-level objective and $\epsilon_g$-optimal for the lower-level objective. Moreover, when the upper-level objective is non-convex, our method requires ${\mathcal{O}}(\max\{1/\epsilon_f^2,1/(\epsilon_f\epsilon_g)\})$ iterations to find an $(\epsilon_f,\epsilon_g)$-optimal solution. We further prove stronger convergence guarantees under the H\"olderian error bound assumption on the lower-level problem. To the best of our knowledge, our method achieves the best-known iteration complexity for the considered bilevel problem. We also present numerical experiments to showcase the superior performance of our method compared with state-of-the-art methods.

翻译:在本文中, 我们研究一组双层优化问题, 也称为简单的双层优化, 即我们尽可能减少一个平滑的目标功能, 来应对另一个 convex 限制优化问题。 已经开发了几种迭代方法来应对这类问题。 可惜, 它们的趋同保障并不令人满意, 因为对于上层目标来说, 或趋同率是缓慢的, 或者是不尽如人意的。 为了解决这个问题, 本文中我们引入了一个通用的 Frank- Wol1/ 双层优化( FW) 方法来解决所考虑的问题。 我们方法的主要理念是通过切割平面来本地接近下层问题的解决方案集, 然后进行 FW 类型更新以降低上层目标 。 当上层目标为 convelment {( max) 时, 我们的方法需要$mathcal_ (max) /\\\\\\\\\\\\\\\\\\\\\\\\\\\\ disielfl_ liar_ ma ma ma distrate max max max max max max max max la la max max max max max max max max max max max max max max max max max max max max max) max max max max max max max max max max max max max max max max max max max max max max max) max mox mox mox mox max max max max max max max max max mox mox mox mod mo mod mod mo mo mo mo mo mo mox mox mox mox mox mox mox mox mo mox mox mo mo