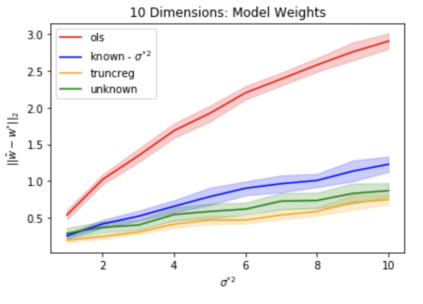

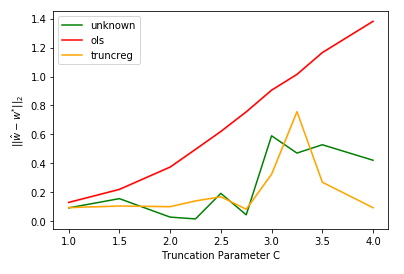

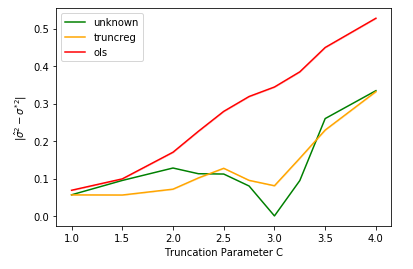

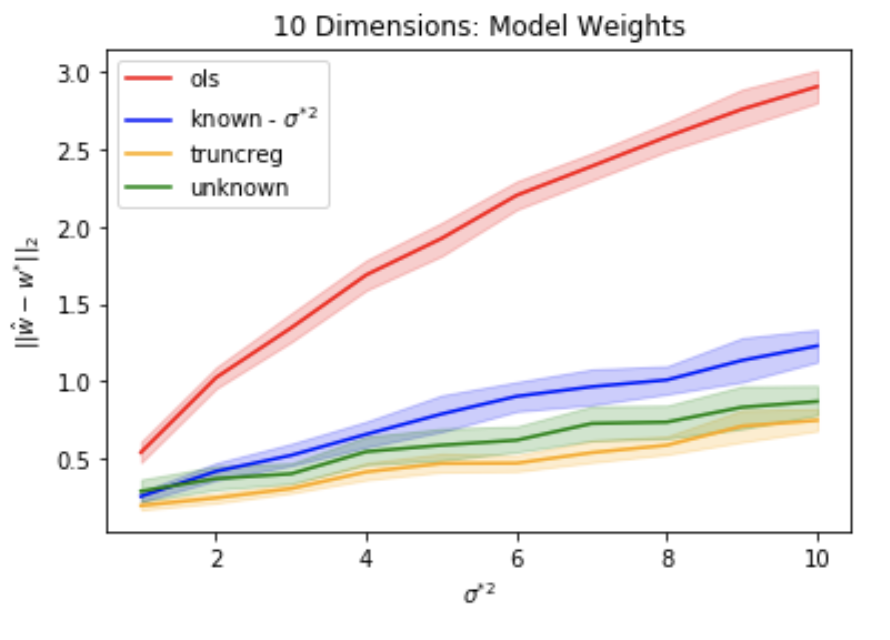

Truncated linear regression is a classical challenge in Statistics, wherein a label, $y = w^T x + \varepsilon$, and its corresponding feature vector, $x \in \mathbb{R}^k$, are only observed if the label falls in some subset $S \subseteq \mathbb{R}$; otherwise the existence of the pair $(x, y)$ is hidden from observation. Linear regression with truncated observations has remained a challenge, in its general form, since the early works of~\citet{tobin1958estimation,amemiya1973regression}. When the distribution of the error is normal with known variance, recent work of~\citet{daskalakis2019truncatedregression} provides computationally and statistically efficient estimators of the linear model, $w$. In this paper, we provide the first computationally and statistically efficient estimators for truncated linear regression when the noise variance is unknown, estimating both the linear model and the variance of the noise. Our estimator is based on an efficient implementation of Projected Stochastic Gradient Descent on the negative log-likelihood of the truncated sample. Importantly, we show that the error of our estimates is asymptotically normal, and we use this to provide explicit confidence regions for our estimates.

翻译:线性线性回归是一个典型的统计挑战,其中标签($y = wQT x + varepsilon$)及其相应的特性矢量($x $x $ $) 只有在标签落到某个子集($S subseteq \ mathbb{R}$) 时才会观察到。 否则,双对(x, y) 的存在就隐藏在观察之外。 由细小观察得出的线性回归从总体上来说仍然是一项挑战。 自从早期的“citet{tobin1958estimation, amemiya1973 regrestition } 以来,当错误的分布与已知差异正常时,只有在标签落到某个子集时, $citet{dskalakis 2019tcrunate regresion} 最近的工作提供了计算和统计效率高的线性估计值, $w$。在本文中,我们提供了第一个计算和统计效率明确的线性缩缩缩缩缩缩缩缩图,当噪音的计算出正常模型和定序的正确性模型,这是我们用来显示的正常的模型和预测。