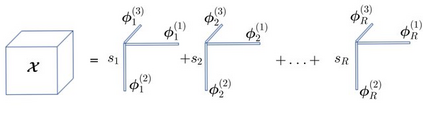

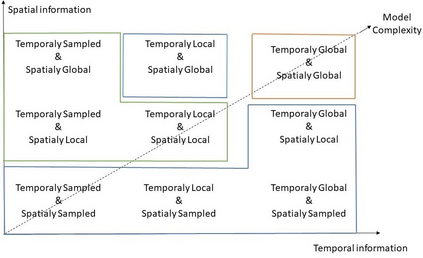

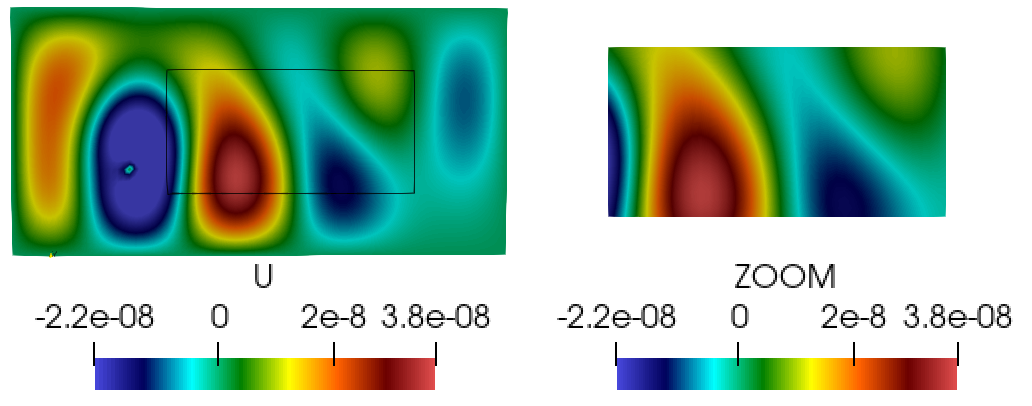

Convolutional neural networks are now seeing widespread use in a variety of fields, including image classification, facial and object recognition, medical imaging analysis, and many more. In addition, there are applications such as physics-informed simulators in which accurate forecasts in real time with a minimal lag are required. The present neural network designs include millions of parameters, which makes it difficult to install such complex models on devices that have limited memory. Compression techniques might be able to resolve these issues by decreasing the size of CNN models that are created by reducing the number of parameters that contribute to the complexity of the models. We propose a compressed tensor format of convolutional layer, a priori, before the training of the neural network. 3-way kernels or 2-way kernels in convolutional layers are replaced by one-way fiters. The overfitting phenomena will be reduced also. The time needed to make predictions or time required for training using the original Convolutional Neural Networks model would be cut significantly if there were fewer parameters to deal with. In this paper we present a method of a priori compressing convolutional neural networks for finite element (FE) predictions of physical data. Afterwards we validate our a priori compressed models on physical data from a FE model solving a 2D wave equation. We show that the proposed convolutinal compression technique achieves equivalent performance as classical convolutional layers with fewer trainable parameters and lower memory footprint.

翻译:卷积神经网络现在在各种领域得到广泛应用,包括图像分类、人脸和物体识别、医学影像分析等。此外,有一些应用需要物理推理模拟器,其中需要实时准确的预测。当前的神经网络设计包含数百万个参数,这使得在内存受限的设备上安装这样的复杂模型变得困难。压缩技术可以通过减少导致模型复杂性的参数数量来解决这些问题。我们提出了一种卷积层的压缩张量格式,以先验方式在神经网络的训练之前进行。卷积层中的三维卷积核或二维卷积核被替换成一维过滤器。过拟合现象也将减少。如果有更少的参数需要处理,使用原始卷积神经网络模型进行预测所需的时间或训练所需的时间将显著缩短。在本文中,我们提出了对卷积神经网络进行先验压缩的方法,用于有限元预测物理数据。然后,我们将我们的先验压缩模型验证在FE模型中的物理数据上求解二维波动方程。我们证明了所提出的卷积压缩技术在具有较少可训练参数和较小内存占用量的同时,实现了与传统卷积层相同的性能。