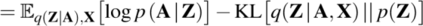

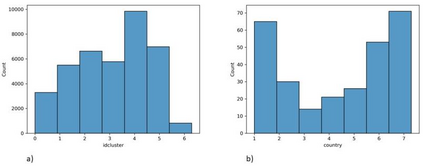

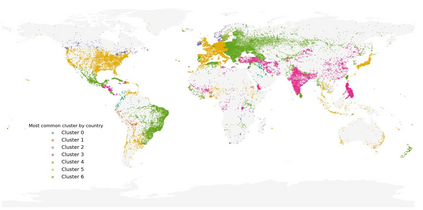

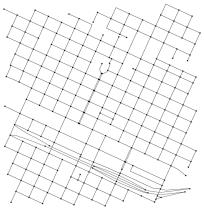

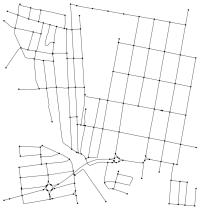

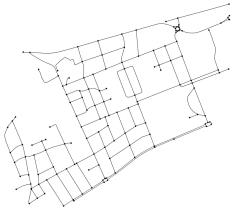

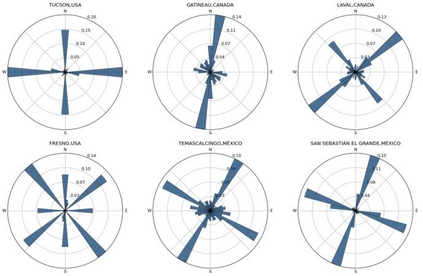

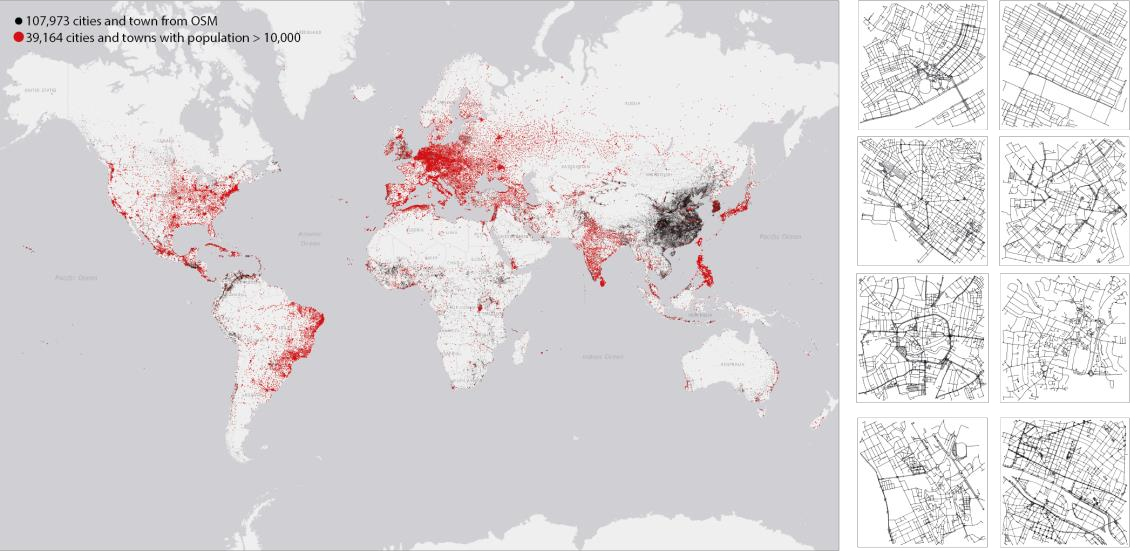

Streets networks provide an invaluable source of information about the different temporal and spatial patterns emerging in our cities. These streets are often represented as graphs where intersections are modelled as nodes and streets as links between them. Previous work has shown that raster representations of the original data can be created through a learning algorithm on low-dimensional representations of the street networks. In contrast, models that capture high-level urban network metrics can be trained through convolutional neural networks. However, the detailed topological data is lost through the rasterisation of the street network. The models cannot recover this information from the image alone, failing to capture complex street network features. This paper proposes a model capable of inferring good representations directly from the street network. Specifically, we use a variational autoencoder with graph convolutional layers and a decoder that outputs a probabilistic fully-connected graph to learn latent representations that encode both local network structure and the spatial distribution of nodes. We train the model on thousands of street network segments and use the learnt representations to generate synthetic street configurations. Finally, we proposed a possible application to classify the urban morphology of different network segments by investigating their common characteristics in the learnt space.

翻译:街道网络提供了宝贵的信息来源,说明我们城市中出现的不同时间和空间模式。这些街道通常以图示形式呈现,交叉点以节点和街道作为相互连接点。以前的工作表明,原始数据的简单表达方式可以通过对街道网络的低维代表面进行学习算法来形成。相比之下,记录高水平城市网络指标的模型可以通过共生神经网络加以培训。然而,详细的地形数据通过街道网络的分化而丢失。模型无法单独从图像中恢复这一信息,无法捕捉复杂的街道网络特征。本文提出了一个能够直接从街道网络中推断出良好表现的模型。具体地说,我们使用一个带有图形革命层和分解码的变形自动编码器来生成一个具有概率性、完全相连的图表,以了解对本地网络结构和节点的空间分布进行编码的潜在表达方式。我们培训了数千个街道网络部分的模型,并利用所学的演示来生成合成的街道配置。最后,我们建议采用一种可能的方法,通过调查不同网络的不同部分的通用特征来分类不同网络的城市形态。