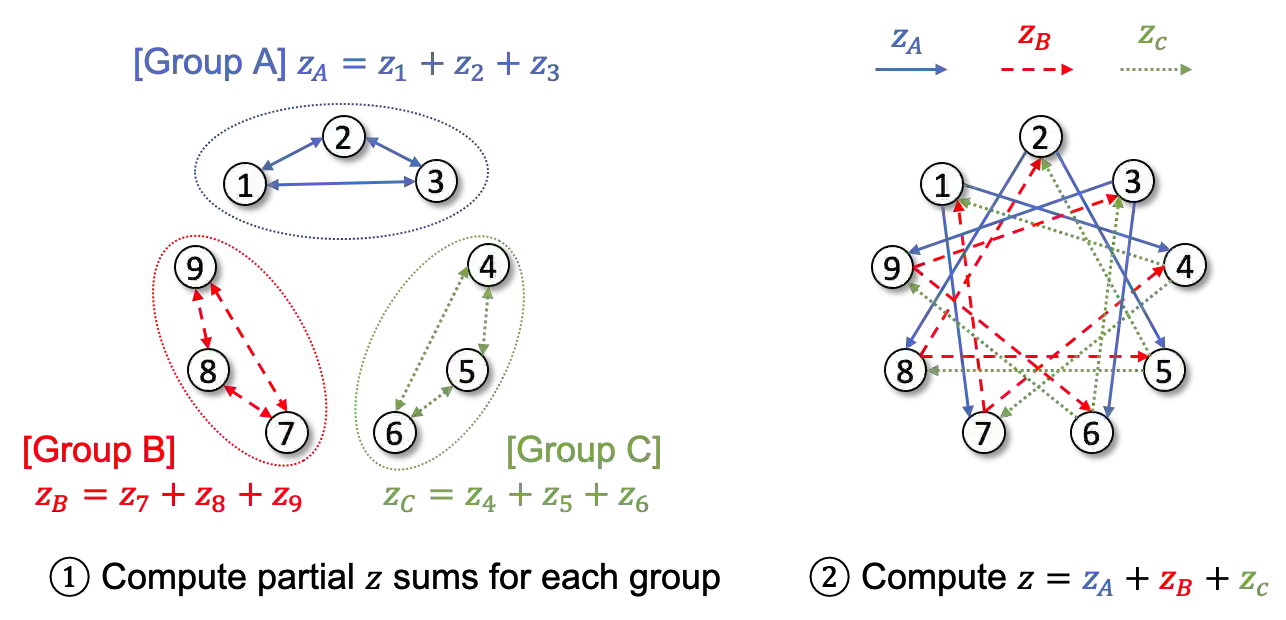

In this paper, we develop SecureD-FL, a privacy-preserving decentralized federated learning algorithm, i.e., without the traditional centralized aggregation server. For the decentralized aggregation, we employ the Alternating Direction Method of Multiplier (ADMM) and examine its privacy weakness. To address the privacy risk, we introduce a communication pattern inspired by the combinatorial block design theory and establish its theoretical privacy guarantee. We also propose an efficient algorithm to construct such a communication pattern. We evaluate our method on image classification and next-word prediction applications over federated benchmark datasets with nine and fifteen distributed sites hosting training data. While preserving privacy, SecureD-FL performs comparably to the standard centralized federated learning method; the degradation in test accuracy is only up to 0.73%.

翻译:在本文中,我们开发了安全D-FL, 这是一种保护隐私的分权联合学习算法, 即没有传统的中央集成服务器。 对于分权汇总, 我们使用乘数替代方向方法( ADMM), 并检查其隐私弱点。 为了解决隐私风险, 我们引入了一种由组合区设计理论启发的通信模式, 并建立了其理论隐私保障。 我们还提出了构建这种通信模式的有效算法。 我们评估了我们的图像分类方法, 以及对9个和15个分布式培训数据的联邦基准数据集的下一个词预测应用。 在保护隐私的同时, 安全D- FL 运行与标准的中央集成学习方法相对; 测试准确性下降率只有0.73% 。