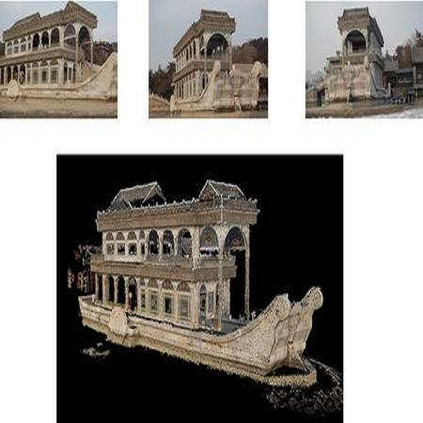

We propose a new approach to determine correspondences between image pairs in the wild under large changes in illumination, viewpoint, context, and material. While other approaches find correspondences between pairs of images by treating the images independently, we instead condition on both images to implicitly take account of the differences between them. To achieve this, we introduce (i) a spatial attention mechanism (a co-attention module, CoAM) for conditioning the learned features on both images, and (ii) a distinctiveness score used to choose the best matches at test time. CoAM can be added to standard architectures and trained using self-supervision or supervised data, and achieves a significant performance improvement under hard conditions, e.g. large viewpoint changes. We demonstrate that models using CoAM achieve state of the art or competitive results on a wide range of tasks: local matching, camera localization, 3D reconstruction, and image stylization.

翻译:我们提出一种新的方法来确定野生图像配对之间在光照、观点、上下文和材料方面发生巨大变化的情况下的对应性; 虽然其他方法通过对图像进行独立处理而发现成对图像之间的对应性, 我们却以两种图像为条件, 隐含地考虑到它们之间的差异。 为此, 我们引入了( 一) 空间关注机制( 共同注意模块, CoAM ), 以调整这两张图像上所学习的特征, 以及 (二) 用于在测试时选择最佳匹配的独特性评分。 CoAM 可以添加到标准结构中, 并使用自我监督或监督的数据进行培训, 并在困难的条件下实现显著的性能改进, 比如大型观点变化 。 我们证明使用 CoAM 模型在广泛的任务上( 本地匹配、 相机本地化、 3D 重建、 图像丝质化) 实现了艺术状态或竞争结果 。