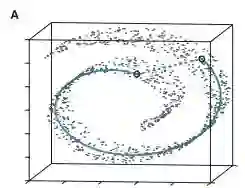

Manifold learning algorithms are valuable tools for the analysis of high-dimensional data, many of which include a step where nearest neighbors of all observations are found. This can present a computational bottleneck when the number of observations is large or when the observations lie in more general metric spaces, such as statistical manifolds, which require all pairwise distances between observations to be computed. We resolve this problem by using a broad range of approximate nearest neighbor algorithms within manifold learning algorithms and evaluating their impact on embedding accuracy. We use approximate nearest neighbors for statistical manifolds by exploiting the connection between Hellinger/Total variation distance for discrete distributions and the L2/L1 norm. Via a thorough empirical investigation based on the benchmark MNIST dataset, it is shown that approximate nearest neighbors lead to substantial improvements in computational time with little to no loss in the accuracy of the embedding produced by a manifold learning algorithm. This result is robust to the use of different manifold learning algorithms, to the use of different approximate nearest neighbor algorithms, and to the use of different measures of embedding accuracy. The proposed method is applied to learning statistical manifolds data on distributions of electricity usage. This application demonstrates how the proposed methods can be used to visualize and identify anomalies and uncover underlying structure within high-dimensional data in a way that is scalable to large datasets.

翻译:曼氏学习算法是分析高维数据的宝贵工具, 其中许多方法包括发现所有观测的近邻相距最近的一个步骤。 这可以显示当观测数量巨大或观测位于更一般的衡量空间时的计算瓶颈, 例如统计元体, 需要计算观测之间所有对称距离。 我们通过在多种学习算法中使用一系列近近近邻的近邻算法, 并评价其对嵌入准确性的影响来解决这个问题。 我们利用离散分布和L2/L1规范的海灵格/多端差异距离之间的连接, 使用近邻相近的统计元件。 通过基于基准的MNIST数据集进行彻底的经验调查, 显示近邻在计算时会大大改进计算时间, 且不至不损及多元学习算法所生成的嵌入准确性。 这与不同的多元学习算法、 使用不同的近邻算法, 以及使用不同测量嵌入准确度的尺度, 我们建议的方法用于学习统计元数据分布的大型数据, 从而显示在高维度数据应用中如何利用。 这个方法可以测量高维度数据。