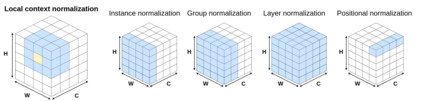

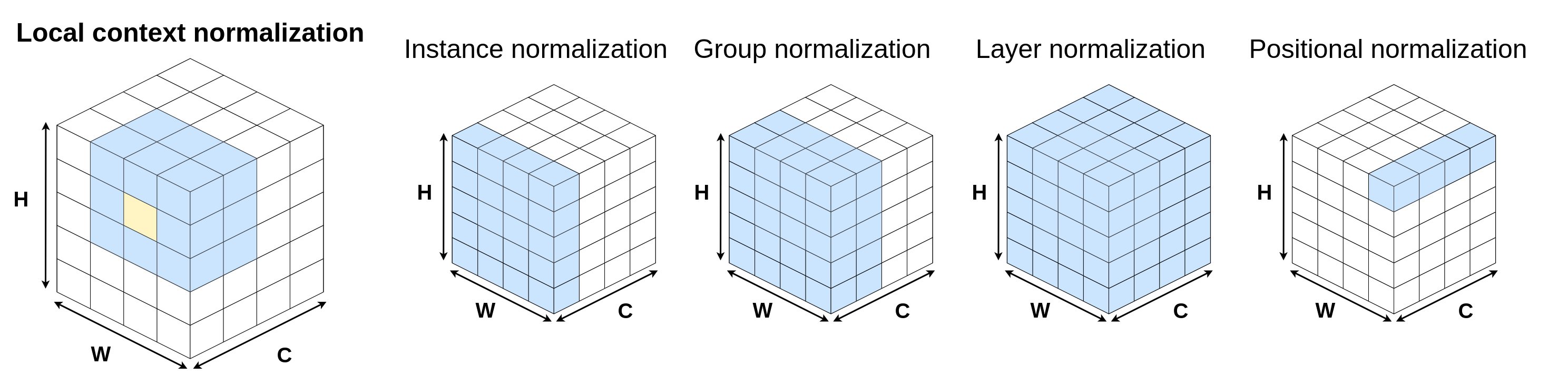

Normalization layers have been shown to improve convergence in deep neural networks. In many vision applications the local spatial context of the features is important, but most common normalization schemes includingGroup Normalization (GN), Instance Normalization (IN), and Layer Normalization (LN) normalize over the entire spatial dimension of a feature. This can wash out important signals and degrade performance. For example, in applications that use satellite imagery, input images can be arbitrarily large; consequently, it is nonsensical to normalize over the entire area. Positional Normalization (PN), on the other hand, only normalizes over a single spatial position at a time. A natural compromise is to normalize features by local context, while also taking into account group level information. In this paper, we propose Local Context Normalization (LCN): a normalization layer where every feature is normalized based on a window around it and the filters in its group. We propose an algorithmic solution to make LCN efficient for arbitrary window sizes, even if every point in the image has a unique window. LCN outperforms its Batch Normalization (BN), GN, IN, and LN counterparts for object detection, semantic segmentation, and instance segmentation applications in several benchmark datasets, while keeping performance independent of the batch size and facilitating transfer learning.

翻译:在很多视觉应用中,这些特征的局部空间环境是十分重要的,但大多数常见的正常化方案,包括群体正常化(GN)、情况正常化(IN)和层正常化(LN)等,都与某一特征的整个空间层面正常化。这可以冲掉重要的信号并降低性能。例如,在使用卫星图像的应用中,输入图像可以是任意的,因此,在整个区域实现正常化是不明智的。而定位正常化(PN)则只是一次在单一空间位置上正常化。自然妥协是按当地环境实现特征正常化,同时也考虑到群体一级的信息。在本文件中,我们提出“地方环境正常化(LCN):一个正常化(LCN):每个特征都基于其周围的窗口和其组内的过滤器实现正常化。我们提出了一个算法解决方案,使LCN对任意的窗口大小具有效率,即使图像中的每个点都有一个独特的窗口。LCN优于它的分级标准化(BN)、GN、IN、IN和LN对等,同时将目标的分级和分级进行独立的测试,同时将目标的分级的分级的分级和分级的分级数据进行独立学习。