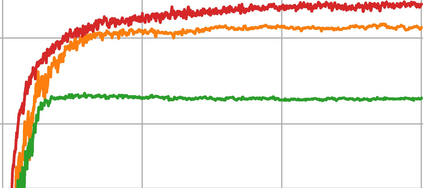

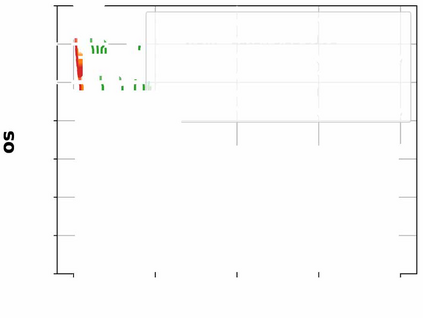

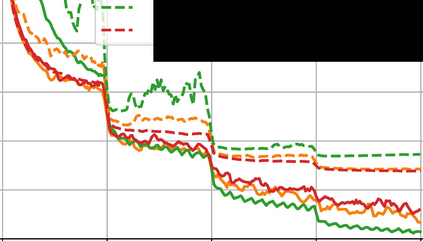

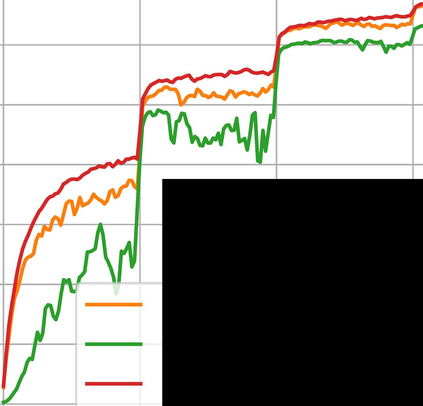

Batch Normalization (BN) improves both convergence and generalization in training neural networks. This work understands these phenomena theoretically. We analyze BN by using a basic block of neural networks, consisting of a kernel layer, a BN layer, and a nonlinear activation function. This basic network helps us understand the impacts of BN in three aspects. First, by viewing BN as an implicit regularizer, BN can be decomposed into population normalization (PN) and gamma decay as an explicit regularization. Second, learning dynamics of BN and the regularization show that training converged with large maximum and effective learning rate. Third, generalization of BN is explored by using statistical mechanics. Experiments demonstrate that BN in convolutional neural networks share the same traits of regularization as the above analyses.

翻译:批量正常化(BN) 提高了神经网络培训的趋同性和普遍性。 这项工作从理论上理解这些现象。 我们通过使用由内核层、 BN 层和非线性激活功能组成的神经网络基本部分分析BN。 这个基本网络有助于我们理解BN在三个方面的影响。 首先,通过将BN视为隐含的常规化,BN可以分解成人口正常化(PN)和伽马衰变,作为明确的正规化。 其次, BN 的学习动态和正规化表明培训与最大有效学习率相融合。 第三,利用统计机制探索BN 。 实验表明,在革命神经网络中,BN具有与上述分析相同的正规化特征。