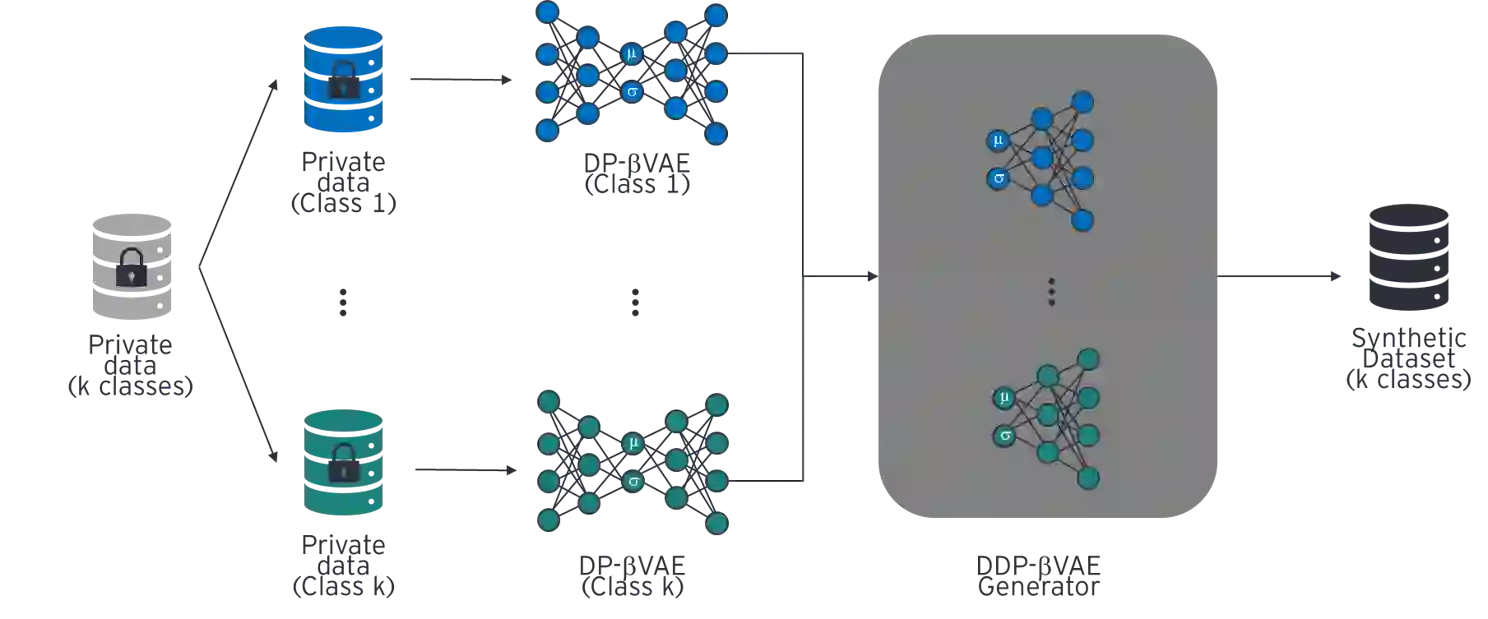

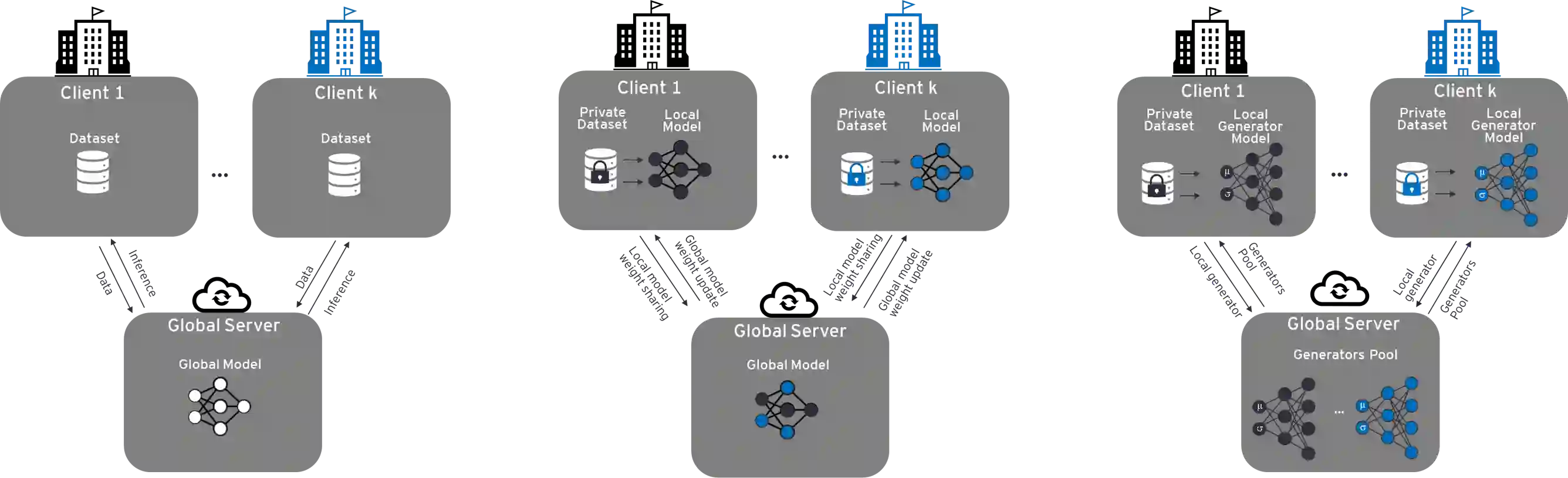

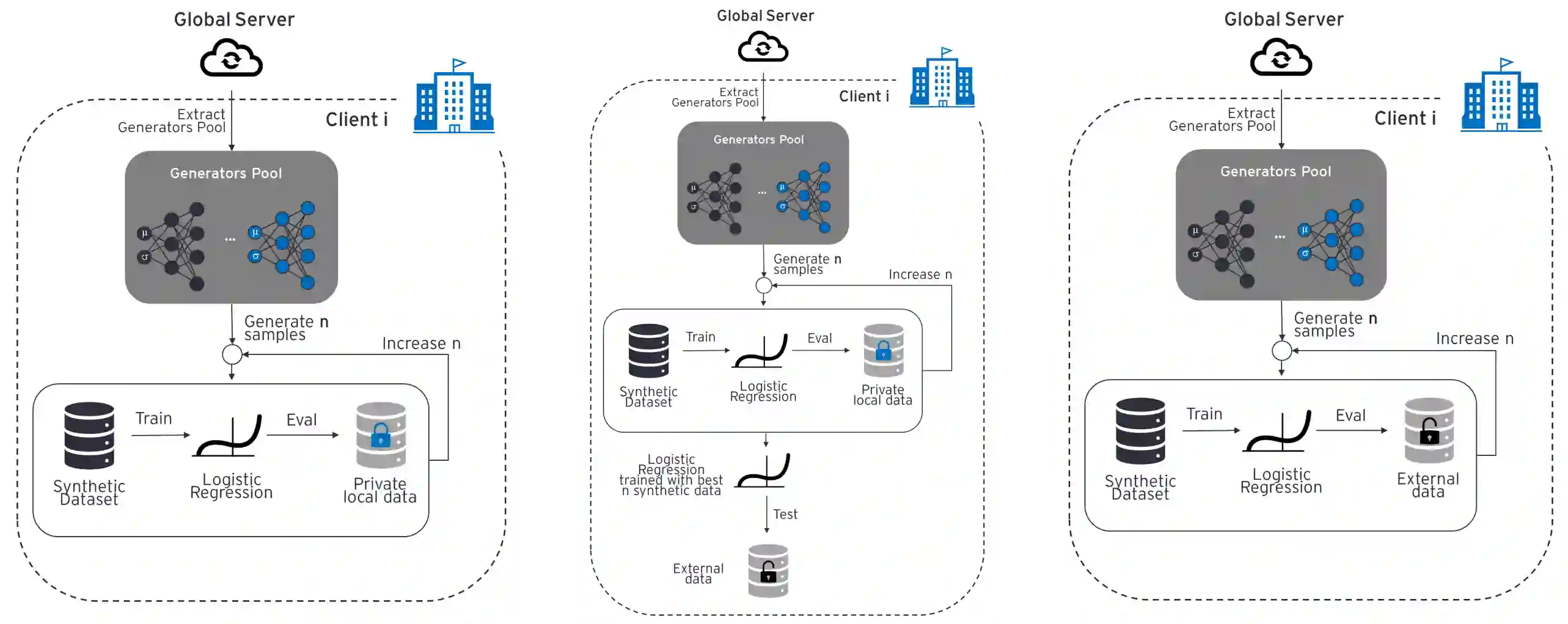

In machine learning, differential privacy and federated learning concepts are gaining more and more importance in an increasingly interconnected world. While the former refers to the sharing of private data characterized by strict security rules to protect individual privacy, the latter refers to distributed learning techniques in which a central server exchanges information with different clients for machine learning purposes. In recent years, many studies have shown the possibility of bypassing the privacy shields of these systems and exploiting the vulnerabilities of machine learning models, making them leak the information with which they have been trained. In this work, we present the 3DGL framework, an alternative to the current federated learning paradigms. Its goal is to share generative models with high levels of $\varepsilon$-differential privacy. In addition, we propose DDP-$\beta$VAE, a deep generative model capable of generating synthetic data with high levels of utility and safety for the individual. We evaluate the 3DGL framework based on DDP-$\beta$VAE, showing how the overall system is resilient to the principal attacks in federated learning and improves the performance of distributed learning algorithms.

翻译:在日益相互关联的世界中,在机器学习中,不同的隐私和联谊学习概念越来越重要,前者是指分享以严格的安全规则为特点的私人数据以保护个人隐私,后者则是指为机器学习目的与不同客户进行中央服务器信息交流的分布式学习技术;近年来,许多研究表明,有可能绕过这些系统的隐私屏蔽,利用机器学习模式的脆弱性,使它们泄露所培训的信息;在这项工作中,我们介绍了3DGL框架,这是目前联合学习模式的替代方案;目标是分享高水平的瓦雷普西隆-差异隐私的基因模型;此外,我们提议DDP-$\beta$VAE,这是一个能够产生具有较高效用和人身安全的合成数据的深层基因模型;我们根据DDP-$\beta$VAE对3DGL框架进行了评估,显示整个系统如何适应在联合学习中的主要攻击,并改进了分布式学习算法的绩效。