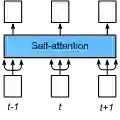

Despite the success in large-scale text-to-image generation and text-conditioned image editing, existing methods still struggle to produce consistent generation and editing results. For example, generation approaches usually fail to synthesize multiple images of the same objects/characters but with different views or poses. Meanwhile, existing editing methods either fail to achieve effective complex non-rigid editing while maintaining the overall textures and identity, or require time-consuming fine-tuning to capture the image-specific appearance. In this paper, we develop MasaCtrl, a tuning-free method to achieve consistent image generation and complex non-rigid image editing simultaneously. Specifically, MasaCtrl converts existing self-attention in diffusion models into mutual self-attention, so that it can query correlated local contents and textures from source images for consistency. To further alleviate the query confusion between foreground and background, we propose a mask-guided mutual self-attention strategy, where the mask can be easily extracted from the cross-attention maps. Extensive experiments show that the proposed MasaCtrl can produce impressive results in both consistent image generation and complex non-rigid real image editing.

翻译:尽管大规模文本到图像生成和文本条件下的图像编辑已经取得了成功,但现有方法仍然难以产生一致的生成和编辑结果。例如,生成方法通常无法合成相同对象/字符的多个图像,但视角或姿态不同。与此同时,现有的编辑方法要么无法实现有效的复杂非刚性编辑,同时保持整体纹理和身份,要么需要耗费时间调整以捕捉特定于图像的外观。本文中,我们开发了MasaCtrl,这是一种无需调参的方法,可以同时实现一致的图像生成和复杂的非刚性图像编辑。具体来说,MasaCtrl将扩散模型中的现有自我注意力转换为相互自我注意力,以便查询源图像中的相关本地内容和纹理以实现一致性。为了进一步减轻前景和背景之间的查询混淆,我们提出了一种掩模引导的相互自注意力策略,其中掩模可以轻松地从交叉注意力图中提取。广泛的实验表明,所提出的MasaCtrl可以在一致的图像生成和复杂的非刚性真实图像编辑方面产生令人印象深刻的结果。