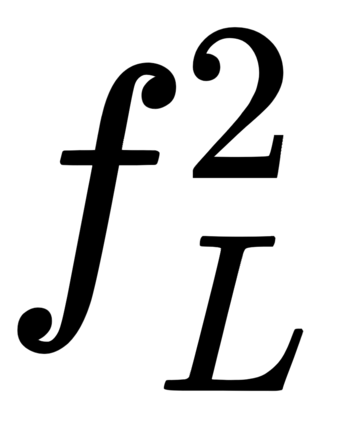

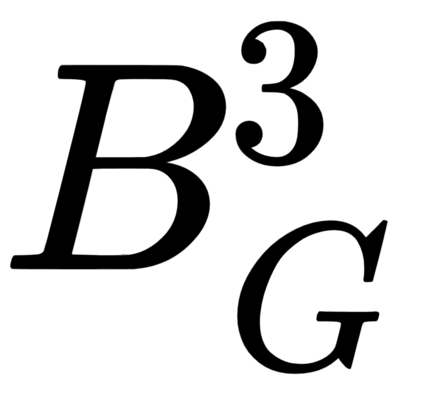

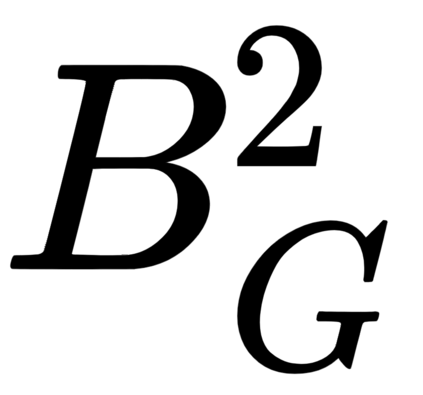

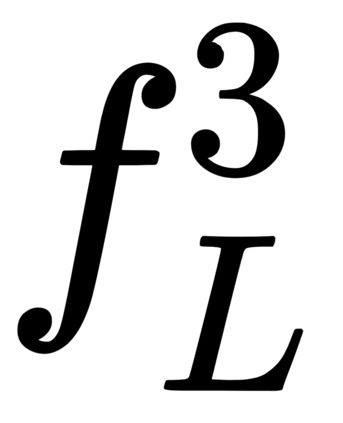

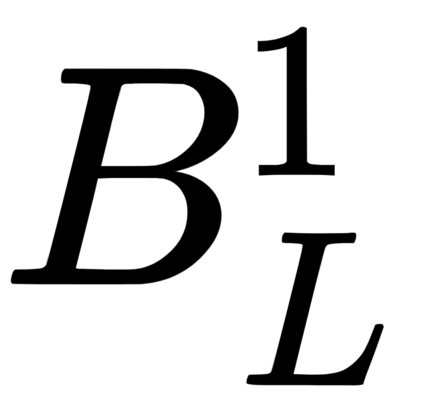

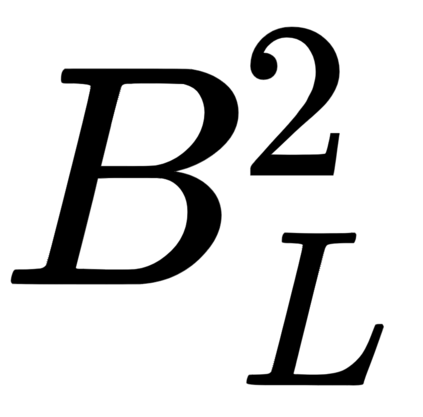

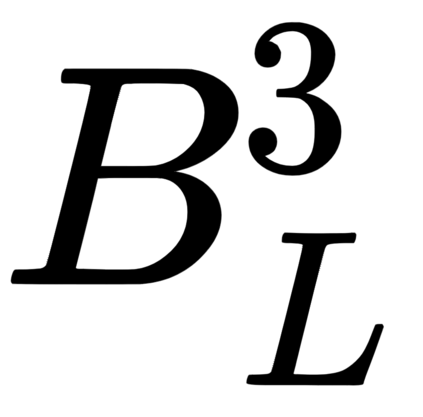

A critical challenge of federated learning is data heterogeneity and imbalance across clients, which leads to inconsistency between local networks and unstable convergence of global models. To alleviate the limitations, we propose a novel architectural regularization technique that constructs multiple auxiliary branches in each local model by grafting local and global subnetworks at several different levels and that learns the representations of the main pathway in the local model congruent to the auxiliary hybrid pathways via online knowledge distillation. The proposed technique is effective to robustify the global model even in the non-iid setting and is applicable to various federated learning frameworks conveniently without incurring extra communication costs. We perform comprehensive empirical studies and demonstrate remarkable performance gains in terms of accuracy and efficiency compared to existing methods. The source code is available at our project page.

翻译:联合学习的一个关键挑战是客户之间的数据差异和不平衡,这导致地方网络之间的不一致和全球模式的不稳定融合。为了减轻这些限制,我们提议采用新的建筑正规化技术,通过在不同级别将地方和全球子网络联结起来,在每个地方模式中建立多个辅助分支,通过在线知识蒸馏,了解当地模式中与辅助混合路径相匹配的主要路径的表述方式。拟议的技术能够有效地巩固全球模式,即使在非二类环境中也是如此,并且可以方便地适用于各种联合学习框架,同时又不产生额外的通信费用。我们进行了全面的实证研究,并展示了与现有方法相比在准确性和效率方面所取得的显著成绩。我们的项目网页上可以找到源代码。