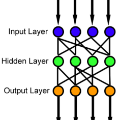

Neural networks have recently become popular for a wide variety of uses, but have seen limited application in safety-critical domains such as robotics near and around humans. This is because it remains an open challenge to train a neural network to obey safety constraints. Most existing safety-related methods only seek to verify that already-trained networks obey constraints, requiring alternating training and verification. Instead, this work proposes a constrained method to simultaneously train and verify a feedforward neural network with rectified linear unit (ReLU) nonlinearities. Constraints are enforced by computing the network's output-space reachable set and ensuring that it does not intersect with unsafe sets; training is achieved by formulating a novel collision-check loss function between the reachable set and unsafe portions of the output space. The reachable and unsafe sets are represented by constrained zonotopes, a convex polytope representation that enables differentiable collision checking. The proposed method is demonstrated successfully on a network with one nonlinearity layer and approximately 50 parameters.

翻译:最近,神经网络被广泛使用,但是在人类附近和周围的机器人等安全关键领域应用有限。这是因为,培训神经网络以遵守安全限制仍然是一项公开的挑战。大多数现有的安全相关方法只是试图核查已经受过训练的网络是否遵守了限制,需要交替培训和核查。相反,这项工作提出了一种限制性方法,即同时用纠正线性单元(ReLU)的非线性来训练和核查进料向神经网络。通过计算网络的输出空间可达集和确保它不与不安全组相交,限制是强制性的;培训是通过在可达性和不安全的输出空间部分之间制定一个新的碰撞校验损失功能来实现的。可达性和不安全的组合由受限制的zonoopes代表,一个可进行不同碰撞检查的连接式多功能代表。在具有一个非线性层和大约50个参数的网络上成功地展示了拟议方法。