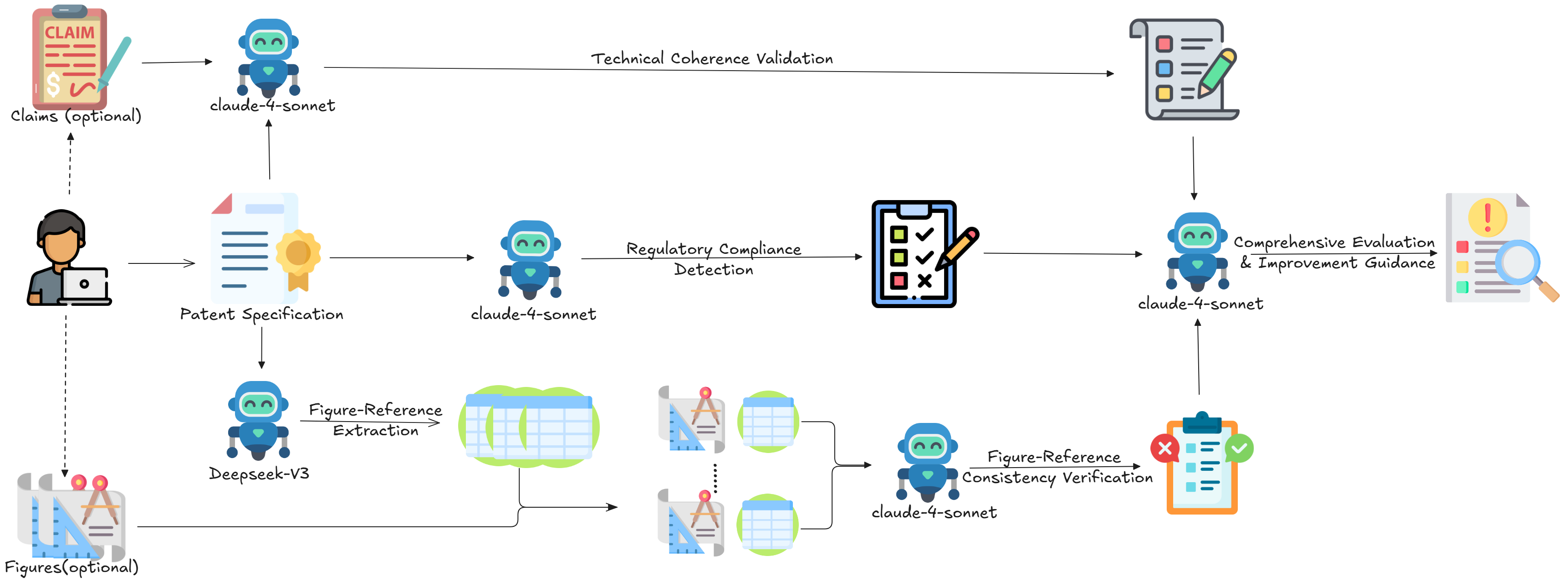

Although AI drafting tools have gained prominence in patent writing, the systematic evaluation of AI-generated patent content quality represents a significant research gap. To address this gap, We propose to evaluate patents using regulatory compliance, technical coherence, and figure-reference consistency detection modules, and then generate improvement suggestions via an integration module. The framework is validated on a comprehensive dataset comprising 80 human-authored and 80 AI-generated patents from two patent drafting tools. Evaluation is performed on 10,841 total sentences, 8,924 non-template sentences, and 554 patent figures for the three detection modules respectively, achieving balanced accuracies of 99.74%, 82.12%, and 91.2% against expert annotations. Additional analysis was conducted to examine defect distributions across patent sections, technical domains, and authoring sources. Section-based analysis indicates that figure-text consistency and technical detail precision require particular attention. Mechanical Engineering and Construction show more claim-specification inconsistencies due to complex technical documentation requirements. AI-generated patents show a significant gap compared to human-authored ones. While human-authored patents primarily contain surface-level errors like typos, AI-generated patents exhibit more structural defects in figure-text alignment and cross-references.

翻译:暂无翻译