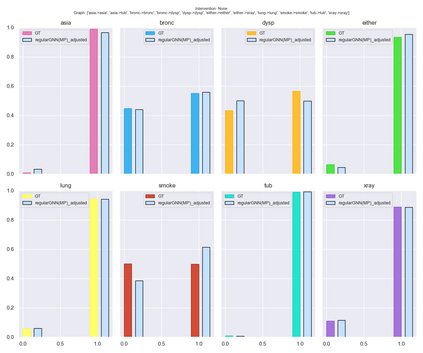

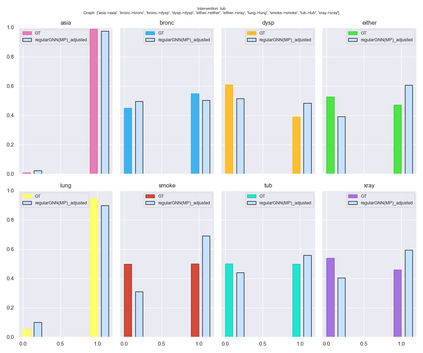

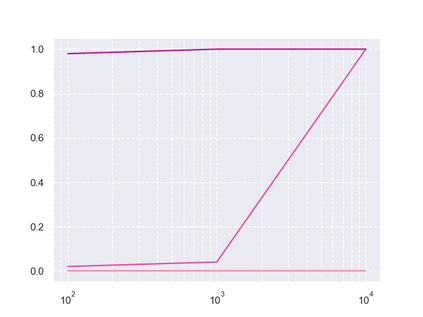

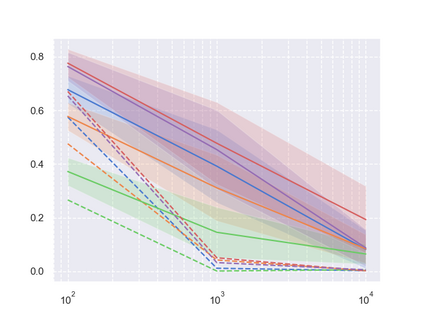

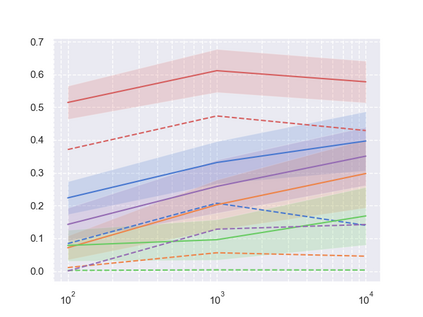

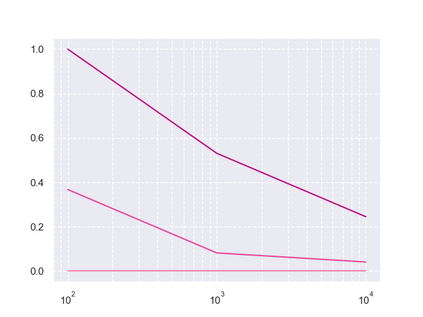

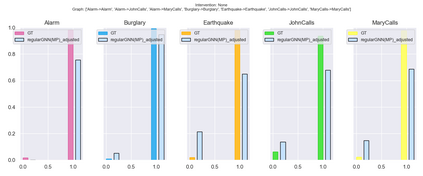

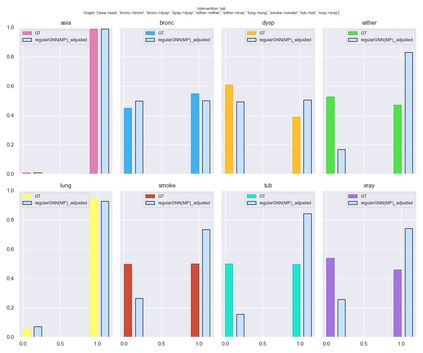

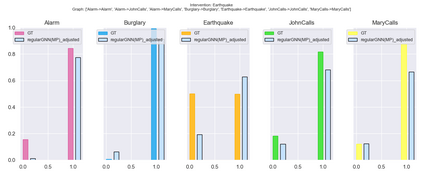

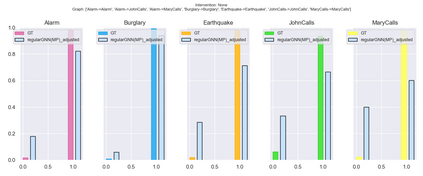

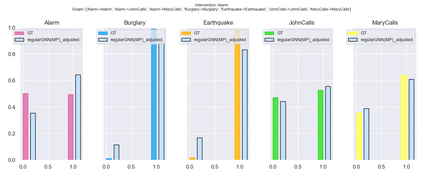

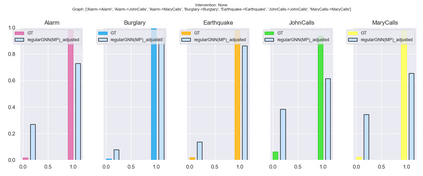

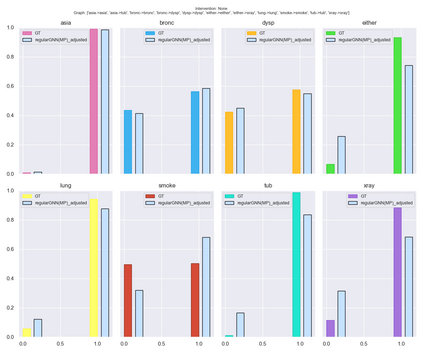

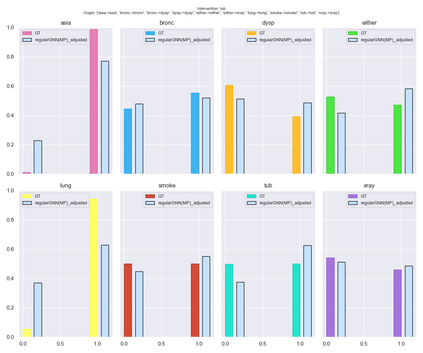

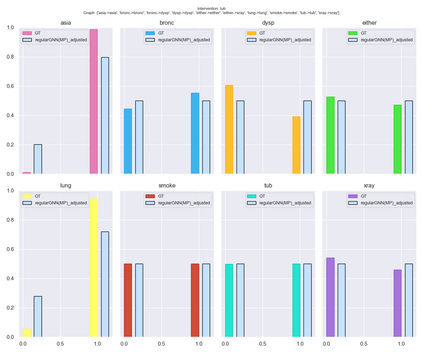

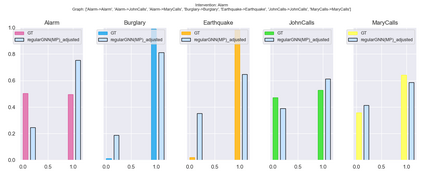

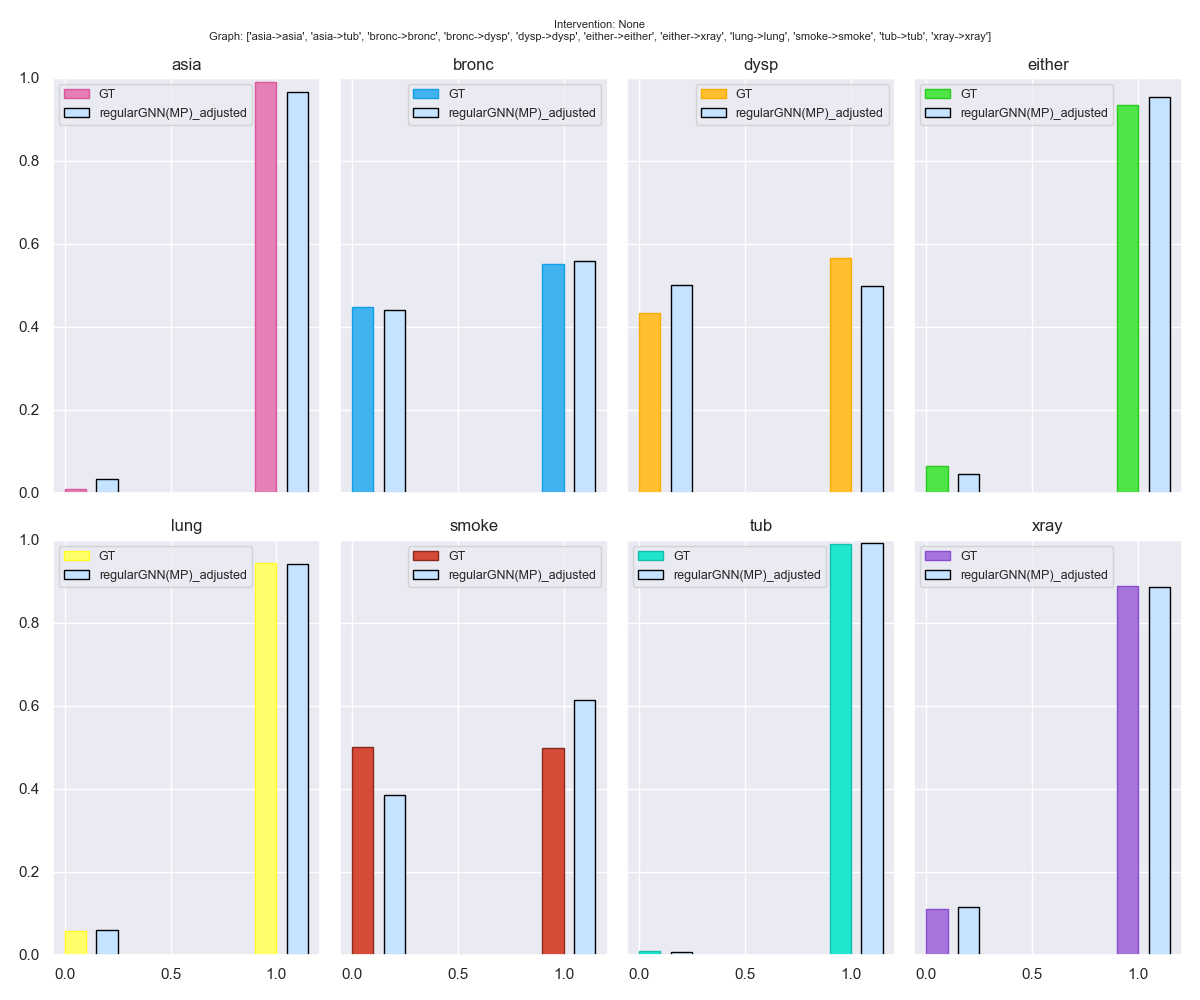

Causality can be described in terms of a structural causal model (SCM) that carries information on the variables of interest and their mechanistic relations. For most processes of interest the underlying SCM will only be partially observable, thus causal inference tries to leverage any exposed information. Graph neural networks (GNN) as universal approximators on structured input pose a viable candidate for causal learning, suggesting a tighter integration with SCM. To this effect we present a theoretical analysis from first principles that establishes a novel connection between GNN and SCM while providing an extended view on general neural-causal models. We then establish a new model class for GNN-based causal inference that is necessary and sufficient for causal effect identification. Our empirical illustration on simulations and standard benchmarks validate our theoretical proofs.

翻译:因果关系可以用结构因果模型来描述,该模型包含关于利益变量及其机械关系的信息。对于大多数感兴趣的进程来说,基础的SCM将只是部分可见的,因此,因果推断试图利用任何暴露的信息。建筑神经网络(GNN)作为结构化投入的通用近似器,为因果学习提供了一个可行的选择,建议与SCM更紧密地结合。为此,我们从最初的原则中提出理论分析,在GNN和SCM之间建立新联系,同时对一般神经-因果模型提供更广泛的看法。然后,我们为基于GNN的因果推断建立一个新的模型类别,这种模型对于确定因果关系是必要和足够的。我们关于模拟和标准基准的经验说明证实了我们的理论证据。