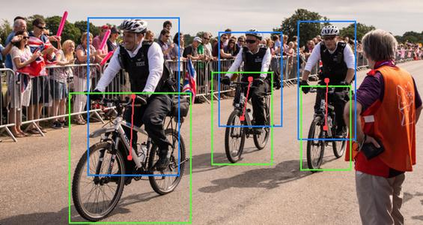

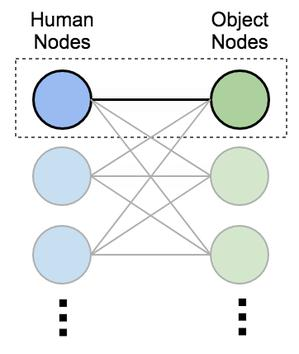

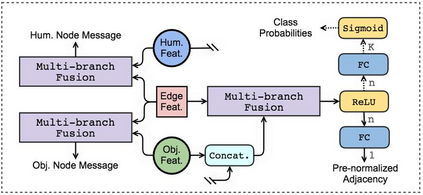

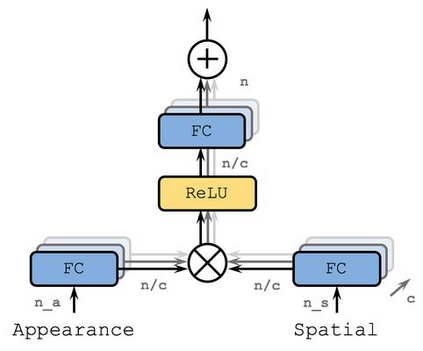

We address the problem of detecting human--object interactions in images using graphical neural networks. Our network constructs a bipartite graph of nodes representing detected humans and objects, wherein messages passed between the nodes encode relative spatial and appearance information. Unlike existing approaches that separate appearance and spatial features, our method fuses these two cues within a single graphical model allowing information conditioned on both modalities to influence the prediction of interactions with neighboring nodes. Through extensive experimentation we demonstrate the advantages of fusing relative spatial information with appearance features in the computation of adjacency structure, message passing and the ultimate refined graph features. On the popular HICO-DET benchmark dataset, our model outperforms state-of-the-art with an mAP of 27.18, a 10% relative improvement.

翻译:我们用图形神经网络来应对在图像中探测人与物体相互作用的问题。我们的网络构建了一个代表被检测到的人类和物体的双方节点图,其中显示在节点之间传递的信息将相对空间和外观信息编码。与现有的区分外观和空间特征的方法不同,我们的方法将这两个提示结合到一个单一的图形模型中,使信息以两种模式为条件,从而影响对与相邻节点相互作用的预测。通过广泛的实验,我们展示了在计算相邻结构、信息传递和最终精细化的图形特征时使用具有外观特征的相对空间信息的好处。在流行的HICO-DET基准数据集上,我们的模型比艺术状态高出了27.18,相对改进了10%。