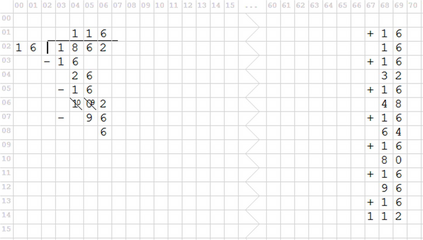

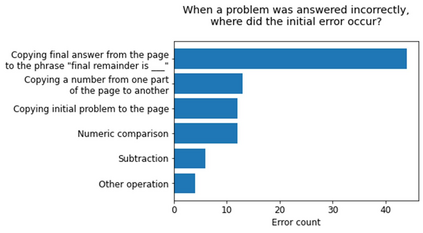

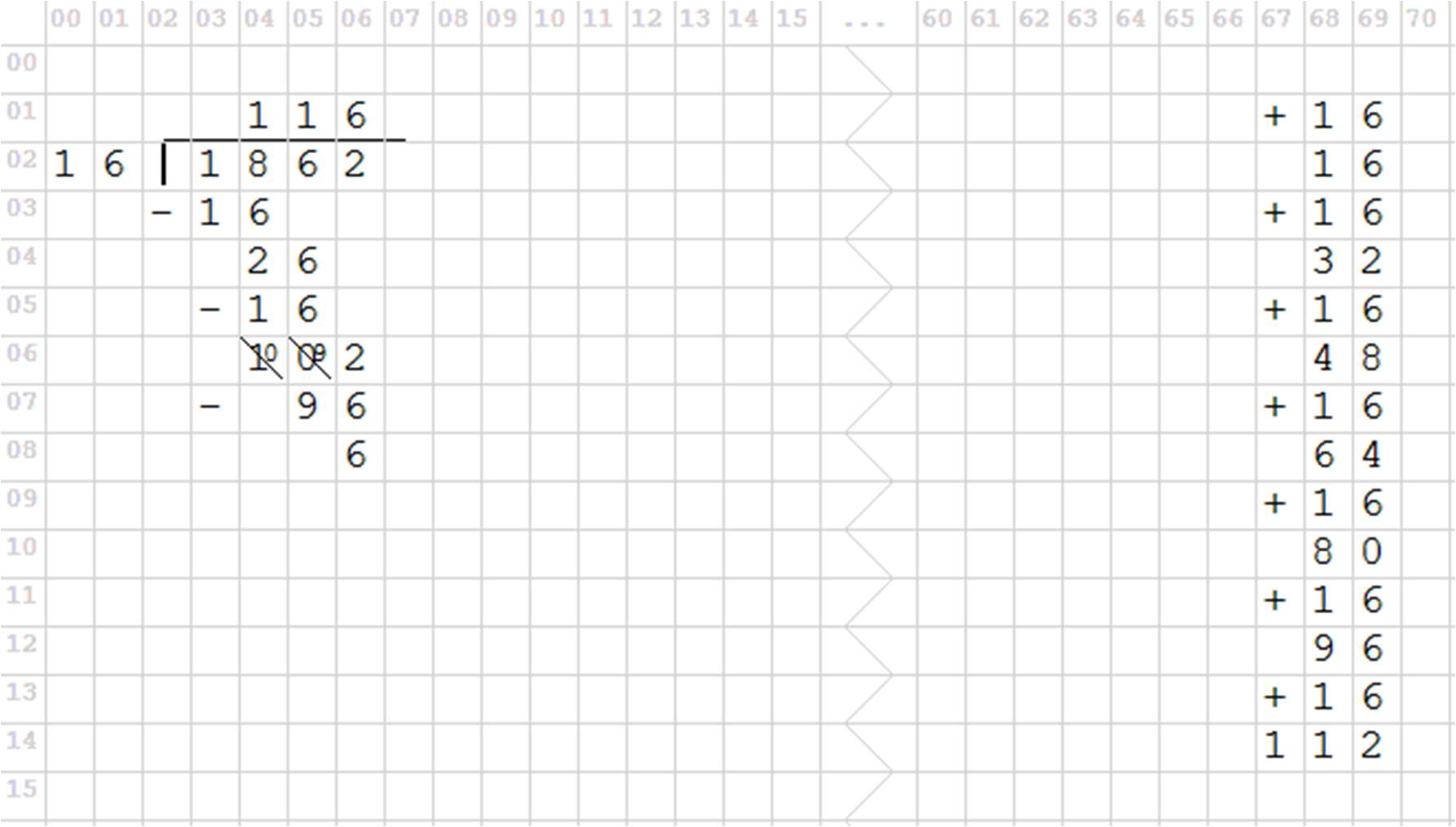

This paper demonstrates that by fine-tuning an autoregressive language model (GPT-Neo) on appropriately structured step-by-step demonstrations, it is possible to teach it to execute a mathematical task that has previously proved difficult for Transformers - longhand modulo operations - with a relatively small number of examples. Specifically, we fine-tune GPT-Neo to solve the numbers__div_remainder task from the DeepMind Mathematics Dataset; Saxton et al. (arXiv:1904.01557) reported below 40% accuracy on this task with 2 million training examples. We show that after fine-tuning on 200 appropriately structured demonstrations of solving long division problems and reporting the remainders, the smallest available GPT-Neo model achieves over 80% accuracy. This is achieved by constructing an appropriate dataset for fine-tuning, with no changes to the learning algorithm. These results suggest that fine-tuning autoregressive language models on small sets of well-crafted demonstrations may be a useful paradigm for enabling individuals without training in machine learning to coax such models to perform some kinds of complex multi-step tasks.

翻译:本文表明,通过对结构适当的逐步演示的自动递减语言模型(GPT-Neo)进行微调,可以教它执行以前证明对变形者来说难以完成的数学任务 -- -- 长手模子操作 -- -- 其实例数量相对较少。具体地说,我们微调GPT-Neo,以解决深磁数学数据集中的数字__div_remainder任务;Saxton 等人(arXiv:1904.057)报告这项工作的精确度低于40%,并举了200万个培训实例。我们表明,在对200个结构适当的解决长期分裂问题和报告其余问题的演示进行微调后,最小的现有GPT-Neo模型的精确度超过80%。这是通过为微调建立一个适当的数据集,对学习算法不作任何改动来实现的。这些结果表明,微调关于小规模精心设计的演示的自动递增语言模型可能是一种有用的范例,使没有接受机器学习培训的个人能够完成某些复杂的多步任务。